Question: We consider Q-Learning with -Greedy algorithm for the above MDP problem. The above tables show the latest values of N(s,a) (top) and Qls,a) (bottom). Don't

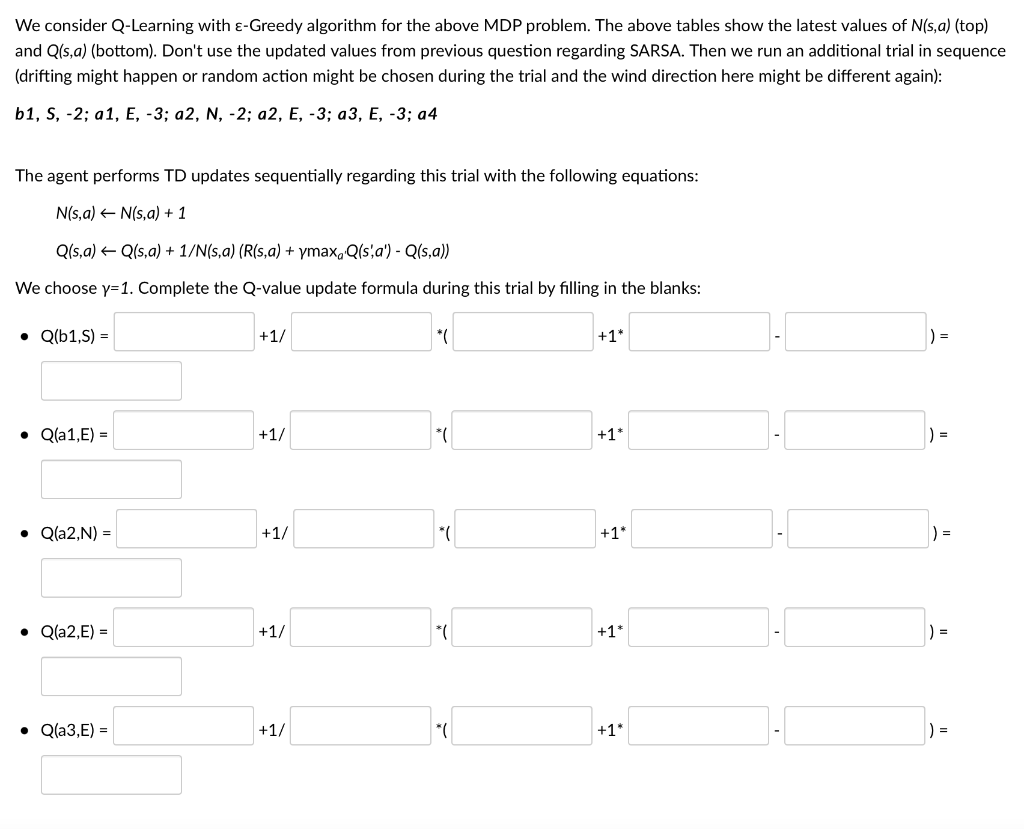

We consider Q-Learning with -Greedy algorithm for the above MDP problem. The above tables show the latest values of N(s,a) (top) and Qls,a) (bottom). Don't use the updated values from previous question regarding SARSA. Then we run an additional trial in sequence (drifting might happen or random action might be chosen during the trial and the wind direction here might be different again): b1, S, -2; a1, E, -3; a2, N, -2; a2, E, -3; a3, E, -3; a4 The agent performs TD updates sequentially regarding this trial with the following equations: Ns, a) + N(s,a) + 1 Qls,a) + Qls,a) + 1/N(s,a) (R(s,a) + ymax, Qls,a') - Q(s,a)) We choose y=1. Complete the Q-value update formula during this trial by filling in the blanks: Q(b1,5) = +1/ * +1" ) = Q(a1,E) = +1/ * +1* Qla2,N) = +1/ +1* 11 Qla2,E) = +1/ 11 * +1* Qla3,E) = +1/ ( +1* ) = We consider Q-Learning with -Greedy algorithm for the above MDP problem. The above tables show the latest values of N(s,a) (top) and Qls,a) (bottom). Don't use the updated values from previous question regarding SARSA. Then we run an additional trial in sequence (drifting might happen or random action might be chosen during the trial and the wind direction here might be different again): b1, S, -2; a1, E, -3; a2, N, -2; a2, E, -3; a3, E, -3; a4 The agent performs TD updates sequentially regarding this trial with the following equations: Ns, a) + N(s,a) + 1 Qls,a) + Qls,a) + 1/N(s,a) (R(s,a) + ymax, Qls,a') - Q(s,a)) We choose y=1. Complete the Q-value update formula during this trial by filling in the blanks: Q(b1,5) = +1/ * +1" ) = Q(a1,E) = +1/ * +1* Qla2,N) = +1/ +1* 11 Qla2,E) = +1/ 11 * +1* Qla3,E) = +1/ ( +1* ) =

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts