Question: We learned about boosting in lecture, and the topic is covered in Murphy 16.4. On page 555 Murphy claims that it was proved that one

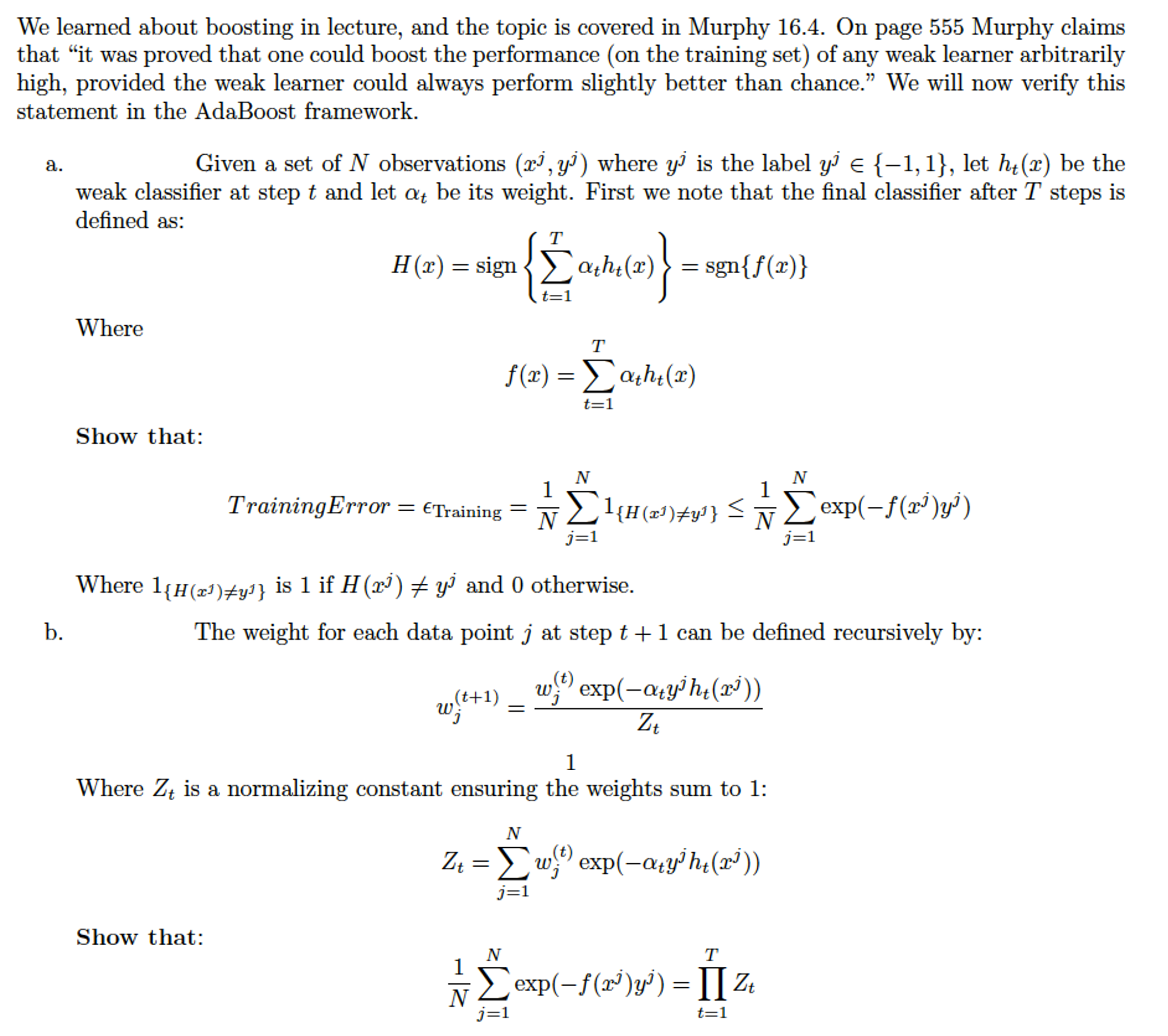

We learned about boosting in lecture, and the topic is covered in Murphy 16.4. On page 555 Murphy claims that "it was proved that one could boost the performance (on the training set) of any weak learner arbitrarily high, provided the weak learner could always perform slightly better than chance." We will now verify this statement in the AdaBoost framework. a. Given a set of N observations (x), y. ) where y' is the label y. E {-1, 1}, let he(x) be the weak classifier at step t and let at be its weight. First we note that the final classifier after T steps is defined as: Where f (z) = [athe(1) t= 1 Show that: Training Error = Training = N 1 N exp ( - f (x) ) y' ) 1= 1 Where 1( H(x]) yl) is 1 if H(x) # y' and 0 otherwise. b. The weight for each data point j at step t + 1 can be defined recursively by: w(t+1) _w; exp(-atyiht(xi)) Zt Where Zt is a normalizing constant ensuring the weights sum to 1: Zt = w, exp(-aty' hi(2))) j= 1 Show that: N T 2 - Lexp(-f (2) y') = [IZ j=1 t= 1

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts