Question: We want to derive efficient algorithm for solving optimization problem where the objective is a sum of a L-smooth function f and a non-smooth

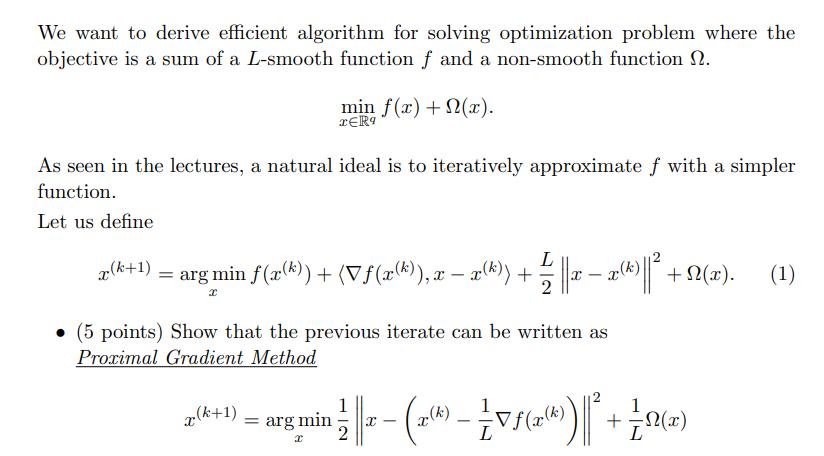

We want to derive efficient algorithm for solving optimization problem where the objective is a sum of a L-smooth function f and a non-smooth function . min f(x) + (x). xR9 As seen in the lectures, a natural ideal is to iteratively approximate f with a simpler function. Let us define L x(k+1) = arg min f(x(*)) + ( (x(*), x x (^)) + | | || x x (^)|| + N( x ) . x 2 (5 points) Show that the previous iterate can be written as Proximal Gradient Method (1) x(k+1) = arg min X - (k) I - L (k) + (x)

Step by Step Solution

3.34 Rating (151 Votes )

There are 3 Steps involved in it

Lets assume that the smooth function f is Lsmooth meaning its gradient is Lipschitz ... View full answer

Get step-by-step solutions from verified subject matter experts