Question: Write a C program that converts a 32-bit sequence of 1's and 0's to integers or to floating point IEEE single precision . A few

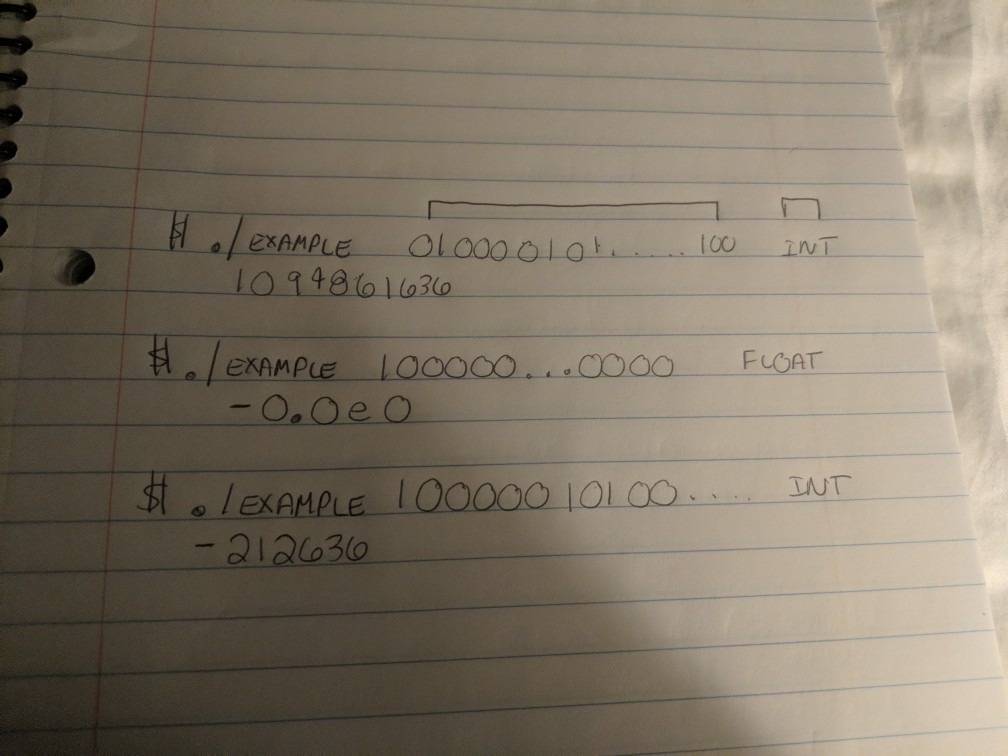

Write a C program that converts a 32-bit sequence of 1's and 0's to integers or to floating point IEEE single precision . A few example outputs in command line are

where the first parameter is the bit sequence, and the second is either float or int. My source code is below but my program isnt working.

#include

#include

#if UINT_MAX >= 0xFFFFFFFF

typedef unsigned uint32;

#else

typedef unsigned long uint32;

#endif

#define C_ASSERT(expr) extern char CAssertExtern[(expr)?1:-1]

// Ensure uint32 is exactly 32-bit

C_ASSERT(sizeof(uint32) * CHAR_BIT == 32);

// Ensure float has the same number of bits as uint32, 32

C_ASSERT(sizeof(uint32) == sizeof(float));

double Ieee754SingleDigits2DoubleCheat(const char s[32])

{

uint32 v;

float f;

unsigned i;

char *p1 = (char*)&v, *p2 = (char*)&f;

// Collect binary digits into an integer variable

v = 0;

for (i = 0; i

v = (v

// Copy the bits from the integer variable to a float variable

for (i = 0; i

*p2++ = *p1++;

return f;

}

double Ieee754SingleDigits2DoubleNoCheat(const char s[32])

{

double f;

int sign, exp;

uint32 mant;

int i;

sign = s[0] - '0';

// Do you really need strto*() or pow() here?

exp = 0;

for (i = 1; i

exp = exp * 2 + (s[i] - '0');

// Remove the exponent bias

exp -= 127;

if (exp > -127)

{

// Normal(ized) numbers

mant = 1; // The implicit "1."

// Account for "1." being in bit position 23 instead of bit position 0

exp -= 23;

}

else

{

// Subnormal numbers

mant = 0; // No implicit "1."

exp = -126; // See your IEEE-54 formulas

// Account for ".1" being in bit position 22 instead of bit position -1

exp -= 23;

}

// Or do you really need strto*() or pow() here?

for (i = 9; i

mant = mant * 2 + (s[i] - '0');

f = mant;

while (exp > 0)

f *= 2,exp--;

// Or here?

while (exp

f /= 2,exp++;

if (sign)

f = -f;

return f;

}

EXAMP EXAMP

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts