Question: You will learn to build Hidden Markov Model using the Viterbi algorithm and apply it to the task of POS tagging. Complete each of the

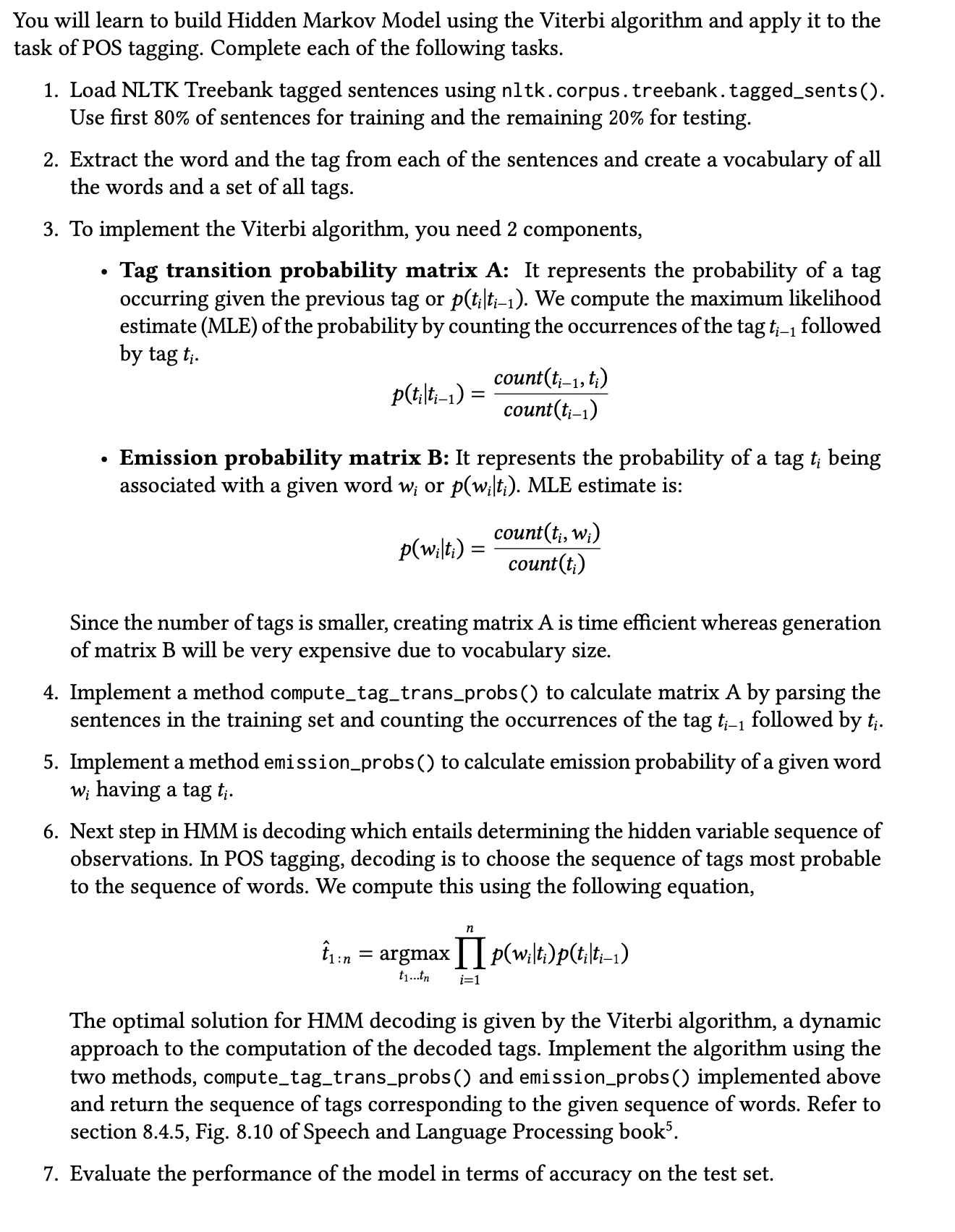

You will learn to build Hidden Markov Model using the Viterbi algorithm and apply it to the

task of POS tagging. Complete each of the following tasks.

Load NLTK Treebank tagged sentences using nltkcorpus.treebank.taggedsents

Use first of sentences for training and the remaining for testing.

Extract the word and the tag from each of the sentences and create a vocabulary of all

the words and a set of all tags.

To implement the Viterbi algorithm, you need components,

Tag transition probability matrix A: It represents the probability of a tag

occurring given the previous tag or We compute the maximum likelihood

estimate MLE of the probability by counting the occurrences of the tag followed

by

Emission probability matrix B: It represents the probability of a tag being

associated with a given word or MLE estimate is:

Since the number of tags is smaller, creating matrix is time efficient whereas generation

of matrix B will be very expensive due to vocabulary size.

Implement a method computetagtransprobs to calculate matrix by parsing the

sentences in the training set and counting the occurrences of the tag followed by

Implement a method emissionprobs to calculate emission probability of a given word

having a tag

Next step in HMM is decoding which entails determining the hidden variable sequence of

observations. In POS tagging, decoding is to choose the sequence of tags most probable

to the sequence of words. We compute this using the following equation,

hat

The optimal solution for HMM decoding is given by the Viterbi algorithm, a dynamic

approach to the computation of the decoded tags. Implement the algorithm using the

two methods, computetagtransprobs and emissionprobs implemented above

and return the sequence of tags corresponding to the given sequence of words. Refer to

section Fig. of Speech and Language Processing book

Evaluate the performance of the model in terms of accuracy on the test set.

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock