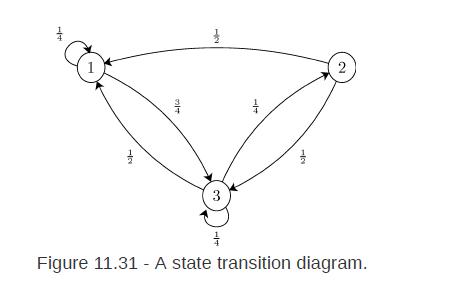

Consider the Markov chain with three states S = {1, 2, 3}, that has the state transition

Question:

Consider the Markov chain with three states S = {1, 2, 3}, that has the state transition diagram is shown in Figure 11.31.

Suppose P(X1 = 1) = 1/2 and P(X1 = 2) = 1/4.

a. Find the state transition matrix for this chain.

b. Find P(X1 = 3,X2 = 2,X3 = 1).

c. Find P(X1 = 3,X3 = 1).

Transcribed Image Text:

1 12 3 12 2 1 Figure 11.31 - A state transition diagram.

Fantastic news! We've Found the answer you've been seeking!

Step by Step Answer:

Answer rating: 50% (2 reviews)

Lets go through each part of the question step by step a Find the state transition matrix for this chain The state transition matrix often denoted as ...View the full answer

Answered By

Lamya S

Highly creative, resourceful and dedicated High School Teacher with a good fluency in English (IELTS- 7.5 band scorer) and an excellent record of successful classroom presentations.

I have more than 2 years experience in tutoring students especially by using my note making strategies.

Especially adept at teaching methods of business functions and management through a positive, and flexible teaching style with the willingness to work beyond the call of duty.

Committed to ongoing professional development and spreading the knowledge within myself to the blooming ones to make them fly with a colorful wing of future.

I do always believe that more than being a teacher who teaches students subjects,...i rather want to be a teacher who wants to teach students how to love learning..

Subjects i handle :

Business studies

Management studies

Operations Management

Organisational Behaviour

Change Management

Research Methodology

Strategy Management

Economics

Human Resource Management

Performance Management

Training

International Business

Business Ethics

Business Communication

Things you can expect from me :

- A clear cut answer

- A detailed conceptual way of explanation

- Simplified answer form of complex topics

- Diagrams and examples filled answers

4.90+

46+ Reviews

54+ Question Solved

Related Book For

Introduction To Probability Statistics And Random Processes

ISBN: 9780990637202

1st Edition

Authors: Hossein Pishro-Nik

Question Posted:

Students also viewed these Business questions

-

Calculate the deflection at point C of a beam subjected to uniformly distributed load w = 275 N/m on span AB and point load P = 10 kN at C. Assume that L = 5 m and EI = 1.50 X 10 7 N.m 2 . A W -L- B...

-

The analysis of the first eight transactions of Advanced Accounting Service follows. Describe each transaction. ASSETS Cash + Accounts + Equipment Receivable 1 2 Bal. $31,000+ 3 Bal. 4 Bal. 5 +31,000...

-

Project the 2 4-1 IV design in Example 8-1 into two replicates of a 2 2 design in the factors A and B. Analyze the data and thaw conclusions. Example 8-1: Consider the filtration rate experiment in...

-

You are the manager of a fast food restaurant. Part of your job is to report to the boss at the end of the day which special is selling best. Use your vast knowledge of descriptive statistics and...

-

Timbuk2 is a San Francisco company that makes a variety of messenger, cyclist, and laptop bags. The companys Web site (Timbuk2.com) allows customers to design their own size, color, and fabric bags...

-

Discuss why combination approaches are considered an important consultative-selling practice. Provide one example of a combination approach.

-

The production department of Zunni's Manufacturing is considering two numerically controlled drill presses; one must be selected. Comparison data is shown in the table below. MARR is 10 percent/year....

-

Post-Balance-Sheet Events At December 31, 2010, Coburn Corp. has assets of $10,000,000, liabilities of $6,000,000, common stock of $2,000,000 (representing 2,000,000 shares of $1 par common stock ),...

-

Externalities and the Environment Meyer describes the "Tragedy of the Commons." The IMF article explains how this type of problem is an example of an "externality." What is an externality? What might...

-

Consider a Markov chain in Example 11.12: a Markov chain with two possible states, S = {0, 1}, and the transition matrix where a and b are two real numbers in the interval [0, 1] such that 0 Example...

-

Let 0 , 1 , be a sequence of nonnegative numbers such that Consider a Markov chain X 0 , X 1 , X 2 , with the state space S = {0, 1, 2,} such that Show that X 1 , X 2 , is a sequence of i.i.d...

-

Differentiate the functions in Problems. Assume that A and B are constants. z = cos(4)

-

PunchTab Inc - venture capital (MDV) vs. Angel Investor. Which is the best investment?

-

Conditions: Imagine that you just started working for a financial organization that provides several financial calculators as web services that are available to third-party developers. Kelsey has...

-

Describing the relationships between the various financial statements. How does one transfer information to the other statements? What stories do they tell?

-

Define the two possible types of capital rationing, and discuss how capital rationing affects the attainment of management's goal of maximizing shareholders' value.

-

In which type of hedge fund investment, managers buy or sell securities and then hedge part or all of the associated risks? Question 7Answer Distressed securities Convertible arbitrage strategies...

-

Explain the difference between the risk-neutral and actual probabilities. In which states is one higher than the other? Why?

-

You've been asked to take over leadership of a group of paralegals that once had a reputation for being a tight-knit, supportive team, but you quickly figure out that this team is in danger of...

-

Mario owns 2,000 shares of Nevada Corporation common stock at the beginning of the year. His basis for the stock is $38,880. During the year, Nevada declares and pays a stock dividend. After the...

-

A corporate taxpayer plans to build a $6 million office building during the next 18 months. How must the corporation treat the interest on debt paid or incurred during the production period?

-

Andy owns an appliance store where he has merchandise such as refrigerators for sale. Roger, a bachelor, owns a refrigerator, which he uses in his apartment for personal use. For which individual is...

-

Consider the right-angle cantilever rod shown. Determine all the forces and Moments at the wall. b y Solid round rod of properties E, G, A, 1, and J " P 225016 D = 2" A = 50" b=35"

-

5.7. For the configuration shown in Fig. P5.7, determine the deflection at the point of load appli cation using a one-element model. If a mesh of several triangular elements is used, commen on the...

-

Which option is NOT true regarding the discharged not final billed (DNFB) status? A. It is beneficial to take measures to decrease the number of patients with this status. B. It creates the need to...

Study smarter with the SolutionInn App