Question: 1. (50 points) Stochastic gradient descent for MLP. Given the following training set D = {z(), y)} for a three-class classification task: 2(1) = (-0.7411,

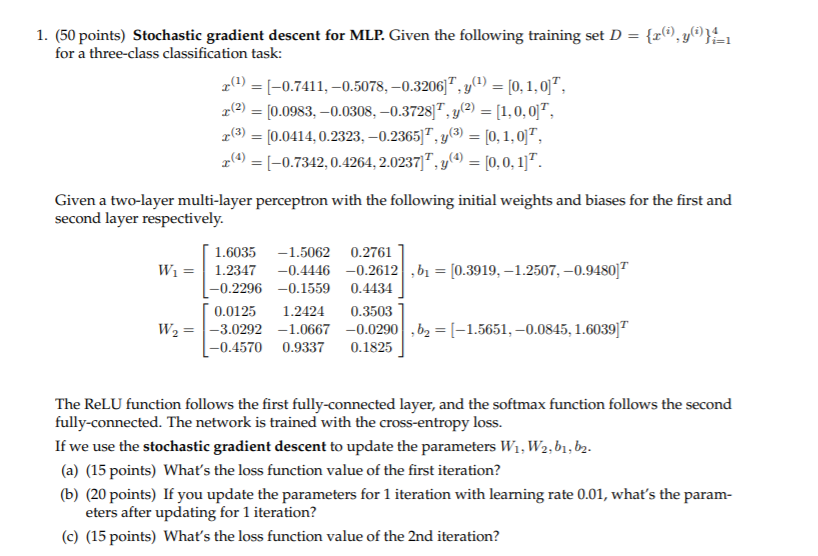

1. (50 points) Stochastic gradient descent for MLP. Given the following training set D = {z(), y)} for a three-class classification task: 2(1) = (-0.7411, -0.5078,-0.3206)",y(1) = [0,1,0, 2 (2) = [0.0983, -0.0308, -0.3728]+, y(2) = [1,0,0", 2(3) = [0.0414, 0.2323, -0.2365]", y(3) = [0,1,0, 24 = (-0.7342, 0.4264, 2.0237), y(4) = [0,0,1). Given a two-layer multi-layer perceptron with the following initial weights and biases for the first and second layer respectively. 1.6035 -1.5062 0.2761 Wi = 1.2347 -0.4446 -0.2612, 61 = [0.3919, -1.2507,-0.9480] -0.2296 -0.1559 0.4434 0.0125 1.2424 0.3503 W, = -3.0292 -1.0667 -0.0290, b2 = [-1.5651, -0.0845, 1.6039) -0.4570 0.9337 0.1825 The ReLU function follows the first fully-connected layer, and the softmax function follows the second fully-connected. The network is trained with the cross-entropy loss. If we use the stochastic gradient descent to update the parameters W1, W2,61, 62. (a) (15 points) What's the loss function value of the first iteration? (b) (20 points) If you update the parameters for 1 iteration with learning rate 0.01, what's the param- eters after updating for 1 iteration? (C) (15 points) What's the loss function value of the 2nd iteration? 1. (50 points) Stochastic gradient descent for MLP. Given the following training set D = {z(), y)} for a three-class classification task: 2(1) = (-0.7411, -0.5078,-0.3206)",y(1) = [0,1,0, 2 (2) = [0.0983, -0.0308, -0.3728]+, y(2) = [1,0,0", 2(3) = [0.0414, 0.2323, -0.2365]", y(3) = [0,1,0, 24 = (-0.7342, 0.4264, 2.0237), y(4) = [0,0,1). Given a two-layer multi-layer perceptron with the following initial weights and biases for the first and second layer respectively. 1.6035 -1.5062 0.2761 Wi = 1.2347 -0.4446 -0.2612, 61 = [0.3919, -1.2507,-0.9480] -0.2296 -0.1559 0.4434 0.0125 1.2424 0.3503 W, = -3.0292 -1.0667 -0.0290, b2 = [-1.5651, -0.0845, 1.6039) -0.4570 0.9337 0.1825 The ReLU function follows the first fully-connected layer, and the softmax function follows the second fully-connected. The network is trained with the cross-entropy loss. If we use the stochastic gradient descent to update the parameters W1, W2,61, 62. (a) (15 points) What's the loss function value of the first iteration? (b) (20 points) If you update the parameters for 1 iteration with learning rate 0.01, what's the param- eters after updating for 1 iteration? (C) (15 points) What's the loss function value of the 2nd iteration

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts