Question: 1. Multiclass classification Consider the multiclass logistic regression optimization problem m K K 1 maximize f(0) = ( yonid@k log exp(-x76x) exp(270) EROXK m i=1

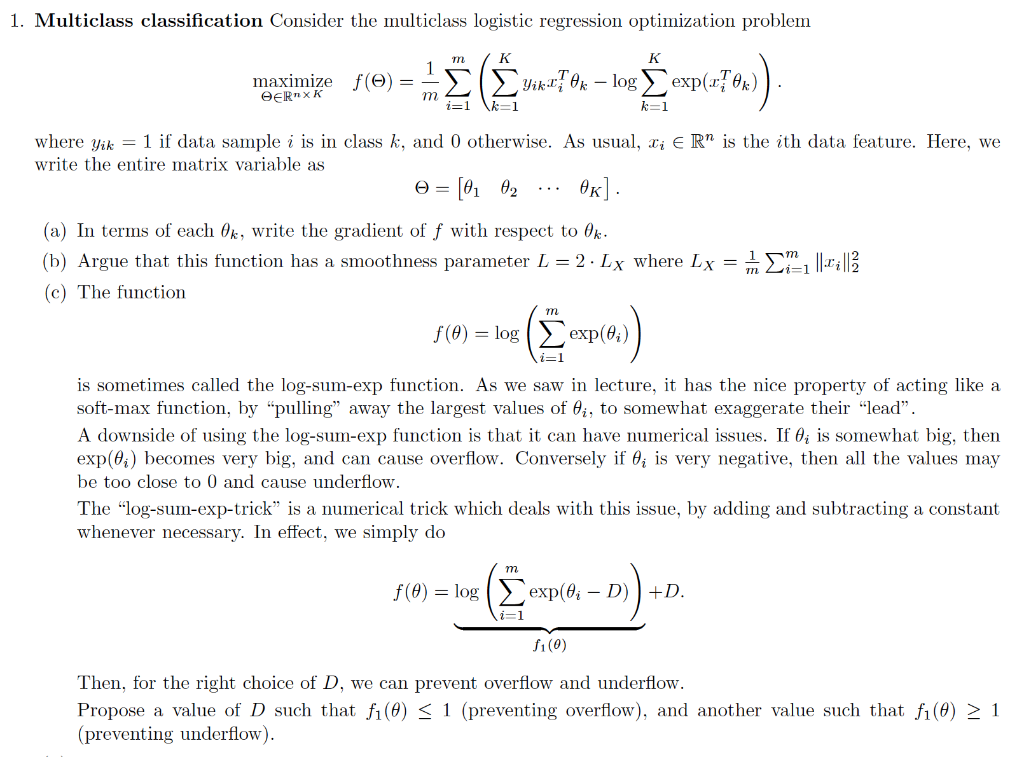

1. Multiclass classification Consider the multiclass logistic regression optimization problem m K K 1 maximize f(0) = ( yonid@k log exp(-x76x) exp(270) EROXK m i=1 k=1 k=1 where yik = 1 if data sample i is in class k, and 0 otherwise. As usual, di ER" is the ith data feature. Here, we write the entire matrix variable as O = [0, 02 OK]. (a) In terms of each Ok, write the gradient of f with respect to Ok. (b) Argue that this function has a smoothness parameter L = 2 Ly where Lx = m2 ||:||2 (c) The function f(0) = log (exp(0;) ( 2 sp(0) is sometimes called the log-sum-exp function. As we saw in lecture, it has the nice property of acting like a soft-max function, by "pulling away the largest values of li, to somewhat exaggerate their "lead. A downside of using the log-sum-exp function is that it can have numerical issues. If , is somewhat big, then exp(@i) becomes very big, and can cause overflow. Conversely if 0; is very negative, then all the values may be too close to 0 and cause underflow. The log-sum-exp-trick is a numerical trick which deals with this issue, by adding and subtracting a constant whenever necessary. In effect, we simply do m f(0) = log exp(0i D))+D. (2 i=1 f1(0) Then, for the right choice of D, we can prevent overflow and underflow. Propose a value of D such that fi(0) 1 (preventing underflow). 1. Multiclass classification Consider the multiclass logistic regression optimization problem m K K 1 maximize f(0) = ( yonid@k log exp(-x76x) exp(270) EROXK m i=1 k=1 k=1 where yik = 1 if data sample i is in class k, and 0 otherwise. As usual, di ER" is the ith data feature. Here, we write the entire matrix variable as O = [0, 02 OK]. (a) In terms of each Ok, write the gradient of f with respect to Ok. (b) Argue that this function has a smoothness parameter L = 2 Ly where Lx = m2 ||:||2 (c) The function f(0) = log (exp(0;) ( 2 sp(0) is sometimes called the log-sum-exp function. As we saw in lecture, it has the nice property of acting like a soft-max function, by "pulling away the largest values of li, to somewhat exaggerate their "lead. A downside of using the log-sum-exp function is that it can have numerical issues. If , is somewhat big, then exp(@i) becomes very big, and can cause overflow. Conversely if 0; is very negative, then all the values may be too close to 0 and cause underflow. The log-sum-exp-trick is a numerical trick which deals with this issue, by adding and subtracting a constant whenever necessary. In effect, we simply do m f(0) = log exp(0i D))+D. (2 i=1 f1(0) Then, for the right choice of D, we can prevent overflow and underflow. Propose a value of D such that fi(0) 1 (preventing underflow)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts