Question: 1 point 2. Consider a set of data (2,4), i = 1,..., n, provided in Table 3. If we believe that x; and y, has

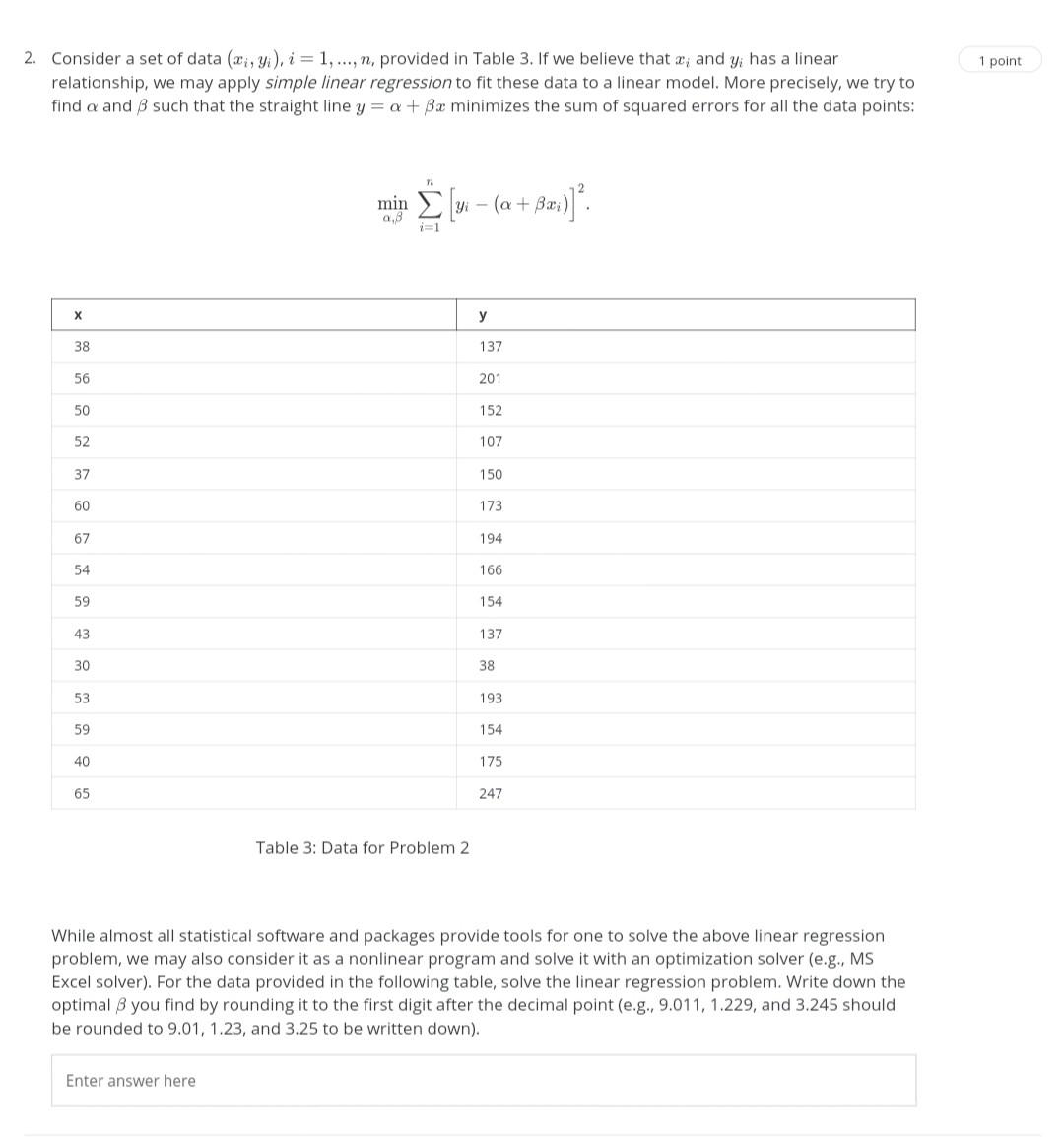

1 point 2. Consider a set of data (2,4), i = 1,..., n, provided in Table 3. If we believe that x; and y, has a linear relationship, we may apply simple linear regression to fit these data to a linear model. More precisely, we try to find a and B such that the straight line y = a + Bx minimizes the sum of squared errors for all the data points: min 9,8 M- [11 (a + Bx;) =1 x y 38 137 56 201 50 152 52 107 37 150 60 173 67 194 54 166 59 154 43 137 30 38 53 193 59 154 40 175 65 247 Table 3: Data for Problem 2 While almost all statistical software and packages provide tools for one to solve the above linear regression problem, we may also consider it as a nonlinear program and solve it with an optimization solver (e.g., MS Excel solver). For the data provided in the following table, solve the linear regression problem. Write down the optimal 8 you find by rounding it to the first digit after the decimal point(e.g., 9.011, 1.229, and 3.245 should be rounded to 9.01, 1.23, and 3.25 to be written down). Enter answer here

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock