Question: 2. Batch gradient decent Initial setting: . a = 0,01 . iteration: 1500 Implement the gradientDescent() function. After implementation, run the gradient descent algorithm to

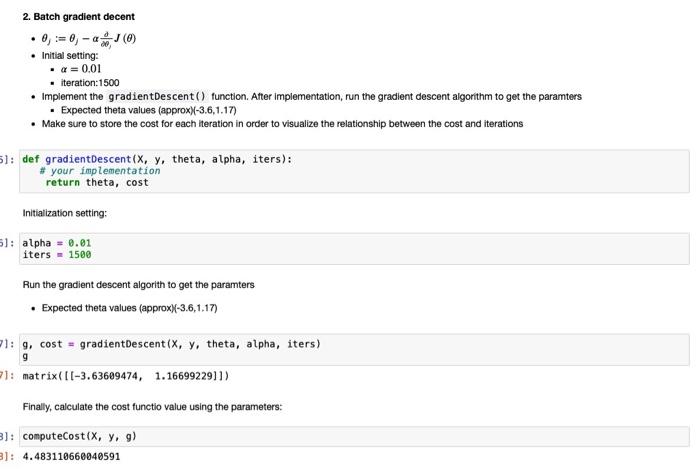

2. Batch gradient decent Initial setting: . a = 0,01 . iteration: 1500 Implement the gradientDescent() function. After implementation, run the gradient descent algorithm to get the paramters Expected theta values (approxX-3.6,1.17) . Make sure to store the cost for each iteration in order to visualize the relationship between the cost and iterations 5): def gradientDescent(x, y, theta, alpha, iters): # your implementation return theta, cost Initialization setting: 51: alpha = 0.01 iters - 1500 Run the gradient descent algorith to get the paramters Expected theta values (approx)(-3.6,1.17) 9 11:9, cost = gradientDescent (x, y, theta, alpha, iters) 11: matrix(11-3.63689474, 1.1669922911) Finally, calculate the cost functio value using the parameters: 8): computeCost(x, y, g) 3): 4.483110660040591

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts