Question: Gradient Descent In this part, you will fit the linear regression parameters 0 to our dataset using gradient descent. The objective of linear regression is

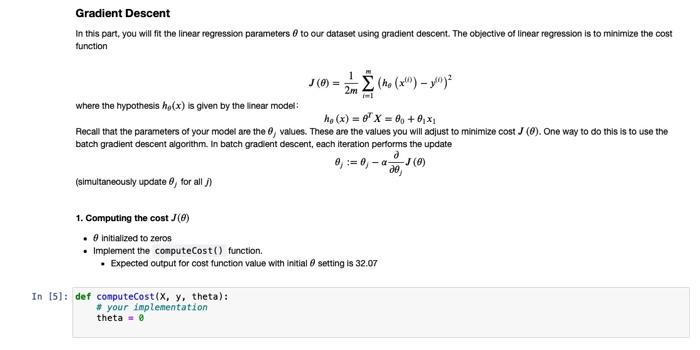

Gradient Descent In this part, you will fit the linear regression parameters 0 to our dataset using gradient descent. The objective of linear regression is to minimize the cost function 2m J(O) = (Me (x") - y") where the hypothesis ho(x) is given by the linear model he(x) = 0 x = 0, +0,* Recall that the parameters of your model are the values. These are the values you will adjust to minimize cost J (). One way to do this is to use the batch gradient descent algorithm. In batch gradient descent, each iteration performs the update -OJO) de (simultaneously update for all a 1. Computing the cost (6) Initialized to zeros Implement the computeCost() function. Expected output for cost function value with initial setting is 32.07 In [5]: def computeCost(x, y, theta): # your implementation theta

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts