Question: 3.3 [15] Consider a multiple-issue design. Suppose you have two execu- tion pipelines, each capable of beginning execution of one instruction per cycle, and enough

![3.3 [15] Consider a multiple-issue design. Suppose you have two execu-](https://dsd5zvtm8ll6.cloudfront.net/si.experts.images/questions/2024/09/66f328fc57561_77166f328fb96698.jpg)

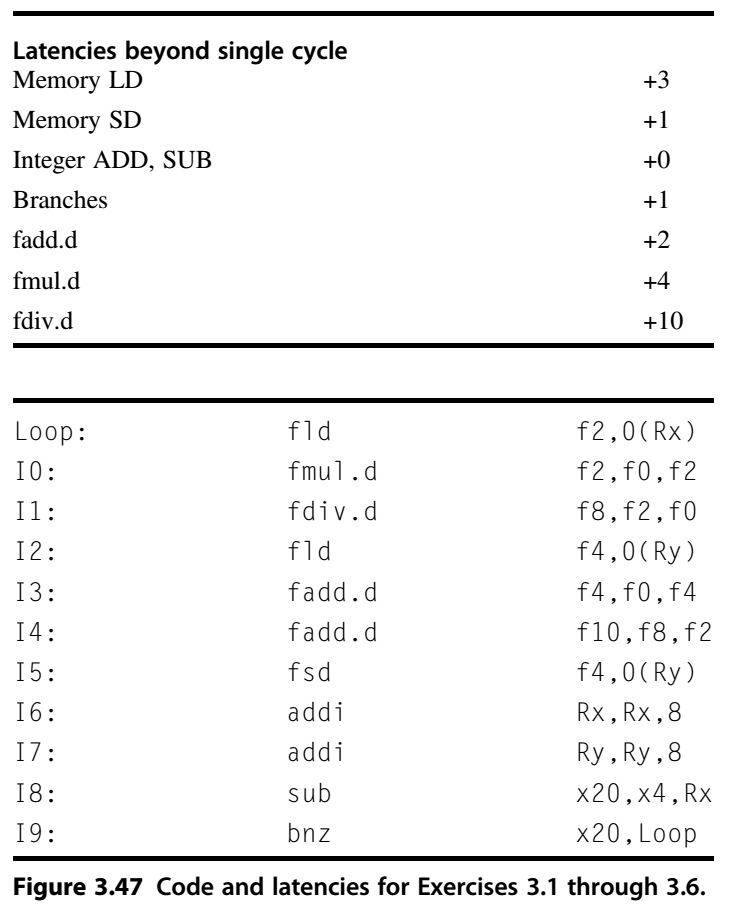

3.3 [15] Consider a multiple-issue design. Suppose you have two execu- tion pipelines, each capable of beginning execution of one instruction per cycle, and enough fetch/decode bandwidth in the front end so that it will not stall your execution. Assume results can be immediately forwarded from one execution unit to another, or to itself. Further assume that the only reason an execution pipeline would stall is to observe a true data dependency. Now how many cycles does the loop require? 3.4 [10] In the multiple-issue design of Exercise 3.3, you may have rec- ognized some subtle issues. Even though the two pipelines have the exact same instruction repertoire, they are neither identical nor interchangeable, because there is an implicit ordering between them that must reflect the ordering of the instruc- tions in the original program. If instruction N+1 begins execution in Execution Pipe 1 at the same time that instruction N begins in Pipe 0, and N+ 1 happens to require a shorter execution latency than N, then N+ 1 will complete before N (even though program ordering would have implied otherwise). Recite at least two reasons why that could be hazardous and will require special considerations in the microarchitecture. Give an example of two instructions from the code in Figure 3.47 that demonstrate this hazard. 3.5 [20] Reorder the instructions to improve performance of the code in Figure 3.47. Assume the two-pipe machine in Exercise 3.3 and that the out-of- order completion issues of Exercise 3.4 have been dealt with successfully. Just worry about observing true data dependences and functional unit latencies for now, How many cycles does your reordered code take? 3.6 [10/10/10] Every cycle that does not initiate a new operation in a pipe is a lost opportunity, in the sense that your hardware is not living up to its potential a. [10] In your reordered code from Exercise 3.5, what fraction of all cycles, counting both pipes, were wasted (did not initiate a new op)? b. [10] Loop unrolling is one standard compiler technique for finding more parallelism in code, in order to minimize the lost opportunities for perfor- mance. Hand-unroll two iterations of the loop in your reordered code from Exer cise 3.5 c. [10] What speedup did you obtain? (For this exercise, just color the N+ 1 iteration's instructions green to distinguish them from the Nth iteration's instructions; if you were actually unrolling the loop, you would have to reassign registers to prevent collisions between the iterations.) 3.3 [15] Consider a multiple-issue design. Suppose you have two execu- tion pipelines, each capable of beginning execution of one instruction per cycle, and enough fetch/decode bandwidth in the front end so that it will not stall your execution. Assume results can be immediately forwarded from one execution unit to another, or to itself. Further assume that the only reason an execution pipeline would stall is to observe a true data dependency. Now how many cycles does the loop require? 3.4 [10] In the multiple-issue design of Exercise 3.3, you may have rec- ognized some subtle issues. Even though the two pipelines have the exact same instruction repertoire, they are neither identical nor interchangeable, because there is an implicit ordering between them that must reflect the ordering of the instruc- tions in the original program. If instruction N+1 begins execution in Execution Pipe 1 at the same time that instruction N begins in Pipe 0, and N+ 1 happens to require a shorter execution latency than N, then N+ 1 will complete before N (even though program ordering would have implied otherwise). Recite at least two reasons why that could be hazardous and will require special considerations in the microarchitecture. Give an example of two instructions from the code in Figure 3.47 that demonstrate this hazard. 3.5 [20] Reorder the instructions to improve performance of the code in Figure 3.47. Assume the two-pipe machine in Exercise 3.3 and that the out-of- order completion issues of Exercise 3.4 have been dealt with successfully. Just worry about observing true data dependences and functional unit latencies for now, How many cycles does your reordered code take? 3.6 [10/10/10] Every cycle that does not initiate a new operation in a pipe is a lost opportunity, in the sense that your hardware is not living up to its potential a. [10] In your reordered code from Exercise 3.5, what fraction of all cycles, counting both pipes, were wasted (did not initiate a new op)? b. [10] Loop unrolling is one standard compiler technique for finding more parallelism in code, in order to minimize the lost opportunities for perfor- mance. Hand-unroll two iterations of the loop in your reordered code from Exer cise 3.5 c. [10] What speedup did you obtain? (For this exercise, just color the N+ 1 iteration's instructions green to distinguish them from the Nth iteration's instructions; if you were actually unrolling the loop, you would have to reassign registers to prevent collisions between the iterations.)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts