Question: 4 . Ensemble Methods In this question, you will implement several ensemble methods including Bagging and AdaBoost on a simple dataset. The methods will learn

Ensemble Methods

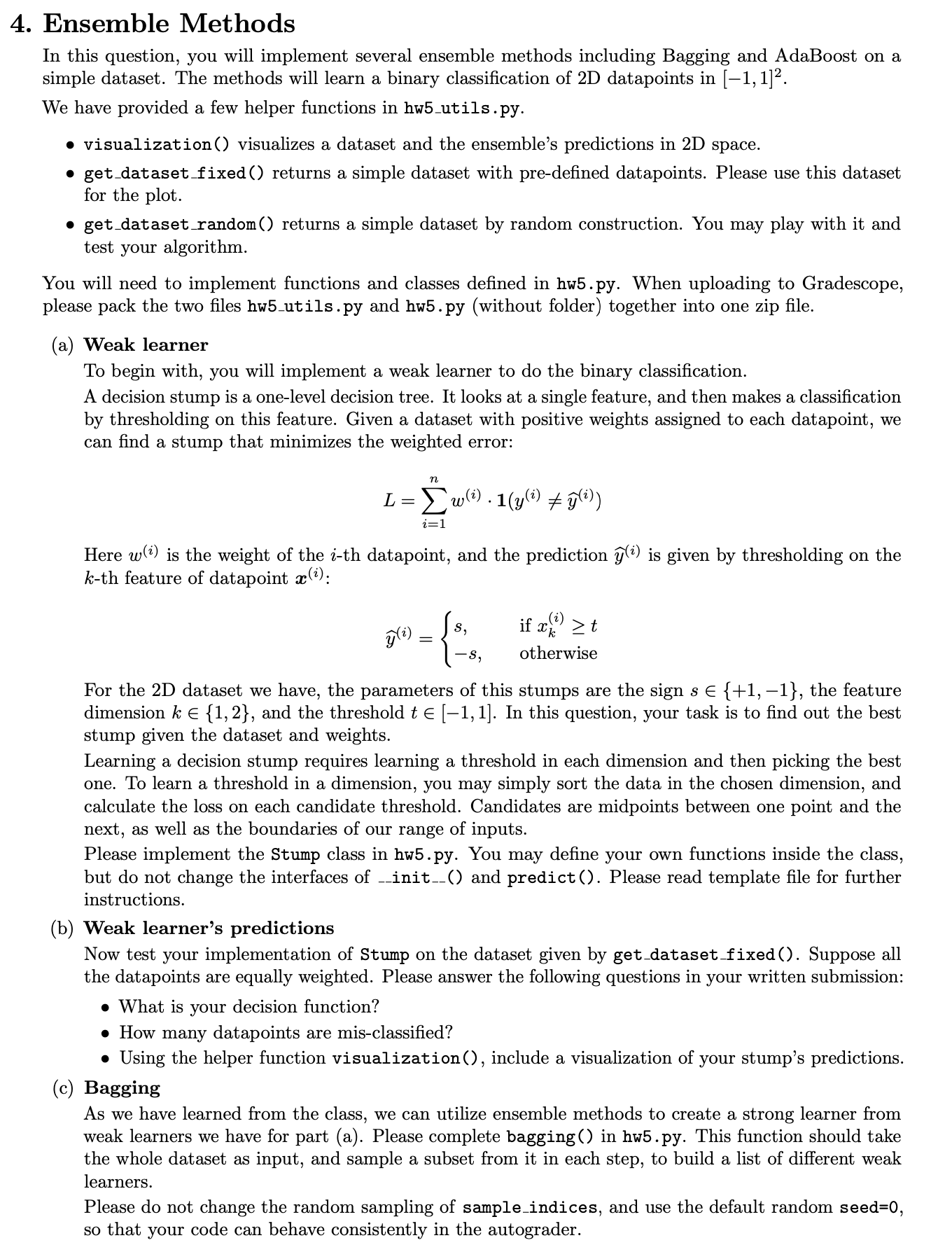

In this question, you will implement several ensemble methods including Bagging and AdaBoost on a simple dataset. The methods will learn a binary classification of D datapoints in

a Weak learner

To begin with, you will implement a weak learner to do the binary classification.

A decision stump is a onelevel decision tree. It looks at a single feature, and then makes a classification by thresholding on this feature. Given a dataset with positive weights assigned to each datapoint, we can find a stump that minimizes the weighted error:

Lsumin wicdot mathbfleftyi

eq widehatyiright

Here wi is the weight of the i th datapoint, and the prediction widehatyi is given by thresholding on the k th feature of datapoint boldsymbolxi :

widehatyileftbeginarrayll

s & text if xkigeq t

s & text otherwise

endarrayright

For the D dataset we have, the parameters of this stumps are the sign s in the feature dimension k in and the threshold t in In this question, your task is to find out the best stump given the dataset and weights.

Learning a decision stump requires learning a threshold in each dimension and then picking the best one. To learn a threshold in a dimension, you may simply sort the data in the chosen dimension, and calculate the loss on each candidate threshold. Candidates are midpoints between one point and the next, as well as the boundaries of our range of inputs.

Please implement the Stump class in hwpy You may define your own functions inside the class, but do not change the interfaces of init and predict Please read template file for further instructions.

b Weak learner's predictions

Now test your implementation of Stump on the dataset given by getdatasetfixed Suppose all the datapoints are equally weighted. Please answer the following questions in your written submission:

What is your decision function?

How many datapoints are misclassified?

Using the helper function visualization include a visualization of your stump's predictions.

c Bagging

As we have learned from the class, we can utilize ensemble methods to create a strong learner from weak learners we have for part a Please complete bagging in hwpy This function should take the whole dataset as input, and sample a subset from it in each step, to build a list of different weak learners.

Please do not change the random sampling of sampleindices, and use the default random seed so that your code can behave consistently in the autograder.

class Stump:

# gettingdata and changing their type from tuple to array

def initself data, labels, weightsNone:

Initializes a stump onelevel decision tree which minimizes

a weighted error function of the input dataset.

In this function, you will need to learn a stump using the weighted

datapoints. Each datapoint has features, whose values are bounded in

Each datapoint has a label in and its importance

is weightedby a positive value.

The stump will choose one of the features, and pick the best threshold

in that dimension, so that the weighted error is minimized.

Arguments:

data: An ndarray with shape n Values in

labels: An ndarray with shape n Values are or

weights: An ndarray with shape n The weights of each

datapoint, all positive.

# You may choose to use the following variables as a start

# The feature dimension which the stump will decide on

# Either or since the datapoints are D

self.dimension

# The threshold in that dimension

# May be midpoints between datapoints or the boundaries

self.threshold

# The predicted sign when the datapoint's feature in that dimension

# is greater than the threshold

# Either or

self.sign

pass

def predictself data:

Arguments:

data: An ndarray with shape n Values in

Returns:

prediction: An ndarray with shape n Values are or

pass

def baggingdata labels, nclassifiers, nsamples, seed:

Arguments:

data: An ndarray with shape n Values in

labels: An ndarray with shape n Values are or

nclassifiers: Number of classifiers to construct.

nsamples: Number of samples to train each classifier.

seed: Random seed for NumPy.

Returns:

classifiers: A list of classifiers.

classifiers

n data.shape

for i in rangenclassifiers:

nprandom.seedseed i

sampleindices nprandom.choicen sizensamples, replaceFalse

# complete the rest of the loop

pass

def adaboostdata labels, nclassifiers:

Arguments:

data: An ndarray with shape n Values in

labels: An ndarray with shape n Values are or

nclassifiers: Number of classifiers to construct.

Returns:

classifiers: A list of classifiers.

weights: A list of

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock