Question: 4.1.13 A Markov chain has the transition probability matrix $$ mathbf{P}=begin{array}{cccc} & 0& 1 & 2 0 & 0.4 & 0.4 & 0.2 & 0.6

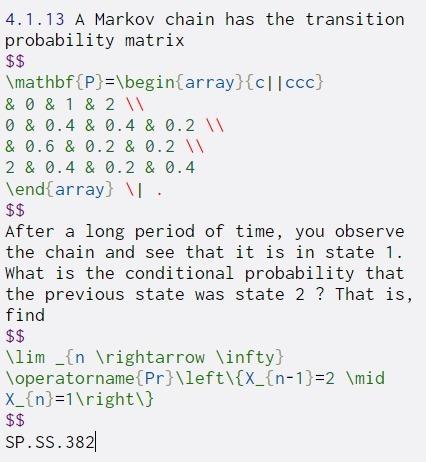

4.1.13 A Markov chain has the transition probability matrix $$ \mathbf{P}=\begin{array}{cccc} & 0& 1 & 2 0 & 0.4 & 0.4 & 0.2 & 0.6 & 0.2 & 0.2 2 & 0.4 & 0.2 & 0.4 \end{array} . $$ After a long period of time, you observe the chain and see that it is in state 1. What is the conditional probability that the previous state was state 2 ? That is, find $$ \lim _{n ightarrow \infty} Toperatorname{Pr}\left\{X_{n-1)=2 \mid X_{n}=1 ight\} $$ SP.SS.382

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts