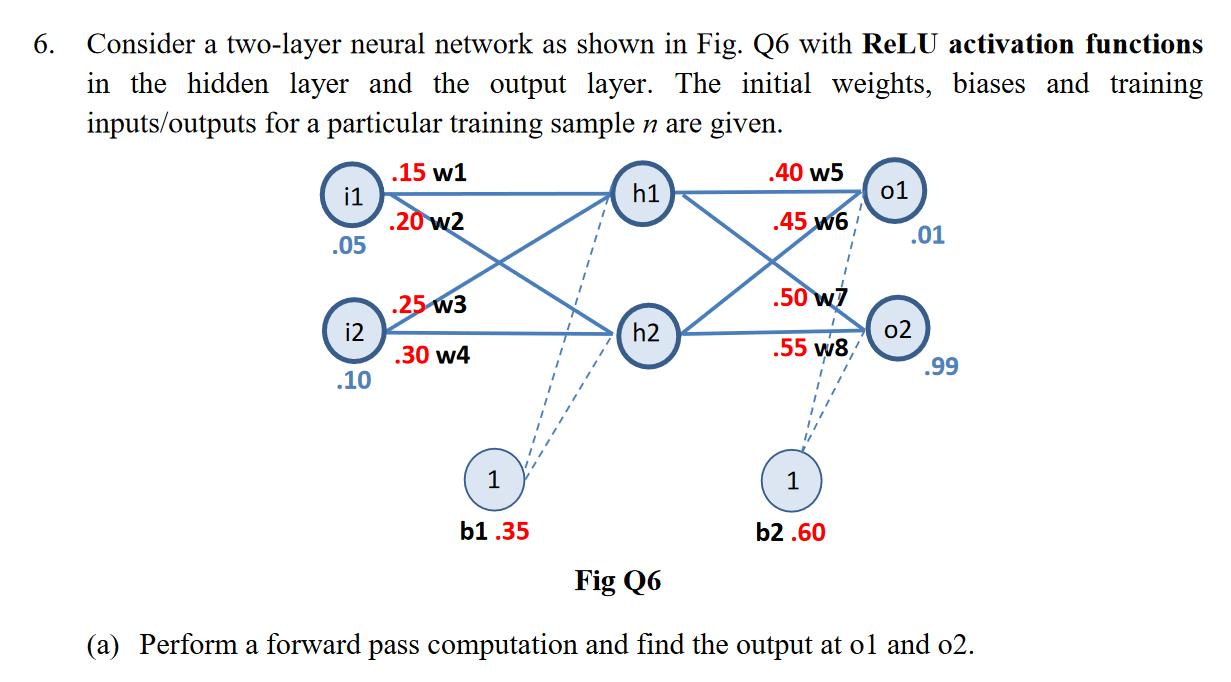

Question: 6. Consider a two-layer neural network as shown in Fig. Q6 with ReLU activation functions in the hidden layer and the output layer. The

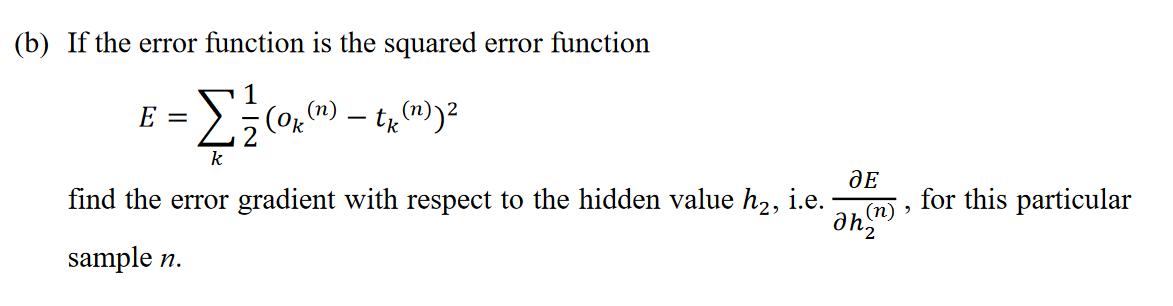

6. Consider a two-layer neural network as shown in Fig. Q6 with ReLU activation functions in the hidden layer and the output layer. The initial weights, biases and training inputs/outputs for a particular training sample n are given. .15 w1 .40 w5 1 h1 01 .20 w2 .45 W6 .01 .05 .25 W3 .50 w7 i2 h2 02 .30 w4 .55 w8 .99 .10 1 b1.35 b2.60 Fig Q6 (a) Perform a forward pass computation and find the output at o1 and 02. (b) If the error function is the squared error function E = - 1 (Ok (n) - tk (n))2 k find the error gradient with respect to the hidden value h, i.e. (n) ah sample n. for this particular

Step by Step Solution

3.30 Rating (147 Votes )

There are 3 Steps involved in it

To perform a forward pass computation and find the output at ol and o2 in the given twolayer neural ... View full answer

Get step-by-step solutions from verified subject matter experts