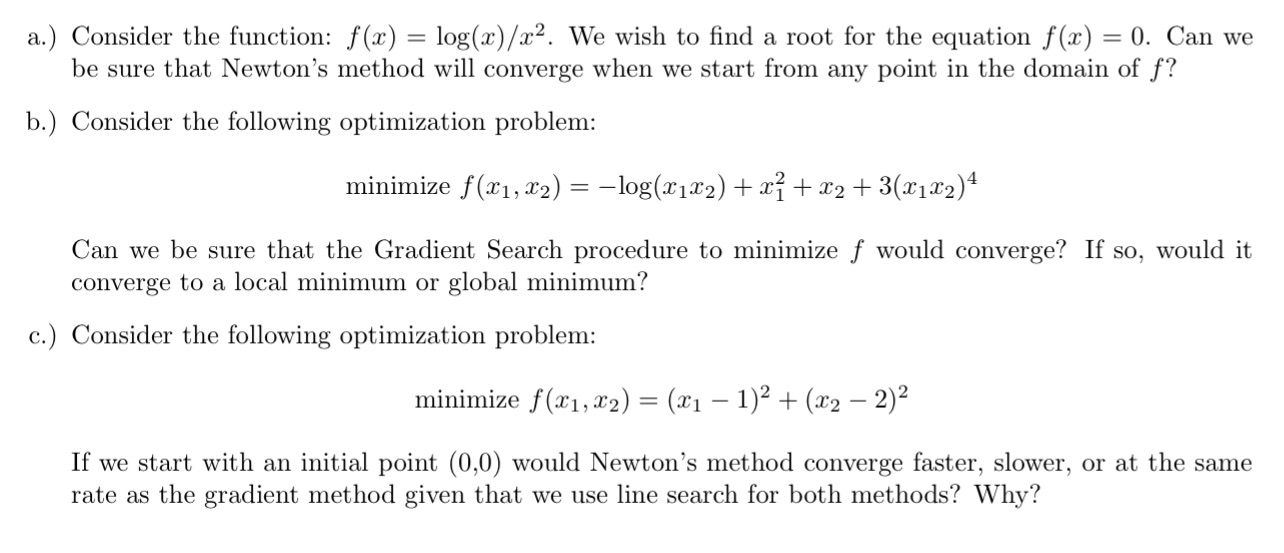

Question: a.) Consider the function: f(:r) = log(;r)/;r2. We wish to nd a root for the equation f(:L') = 0. Can we be sure that Newton's

a.) Consider the function: f(:r) = log(;r)/;r2. We wish to nd a root for the equation f(:L') = 0. Can we be sure that Newton's method will converge when we start from any point in the domain of f? b.) Consider the following optimization problem: minimize an, x2) = log(:r1;r2) + 22% + 332 + 3(zr1332)4 Can we be sure that the Gradient Search procedure to minimize f would converge? If so, would it converge to a local minimum or global minimum? c.) Consider the following optimization problem: minimize f[:r1,;r2) : (.171 1)2 + (3:2 2)2 If we start with an initial point (0,0) would Newton's method converge faster, slower, or at the same rate as the gradient method given that we use line search for both methods? Why

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts