Question: a) Show that for a single-layer perceptron with a linear activation function, the weights can be determined in closed-form from the training data, assuming a

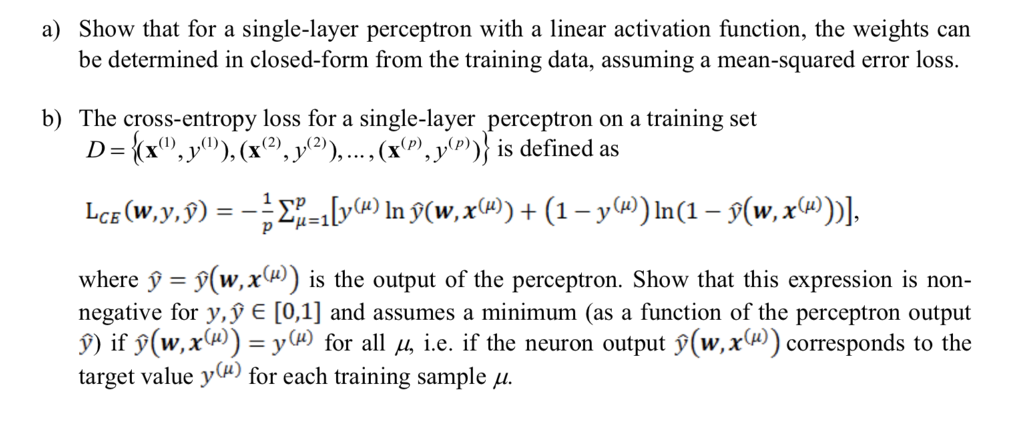

a) Show that for a single-layer perceptron with a linear activation function, the weights can be determined in closed-form from the training data, assuming a mean-squared error loss. b) The cross-entropy loss for a single-layer perceptron on a training set D-(x,y"). (x2.j2),...(xP yP) is defined as W, X where y -(w.x() is the output of the perceptron. Show that this expression is non- negative for y, [0,1] and assumes a minimum (as a function of the perceptron output 9) if9(w, xu))-yli) for all ?, ie. if the neuron output ?(w, X(u)) corresponds to the target value y(u) for each training sample ? a) Show that for a single-layer perceptron with a linear activation function, the weights can be determined in closed-form from the training data, assuming a mean-squared error loss. b) The cross-entropy loss for a single-layer perceptron on a training set D-(x,y"). (x2.j2),...(xP yP) is defined as W, X where y -(w.x() is the output of the perceptron. Show that this expression is non- negative for y, [0,1] and assumes a minimum (as a function of the perceptron output 9) if9(w, xu))-yli) for all ?, ie. if the neuron output ?(w, X(u)) corresponds to the target value y(u) for each training sample

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts