Question: answer problem 3 1. Consider the following one hidden layer Neural Network with 2k hidden units. The network parameters are W E R2kxd and v

answer problem 3

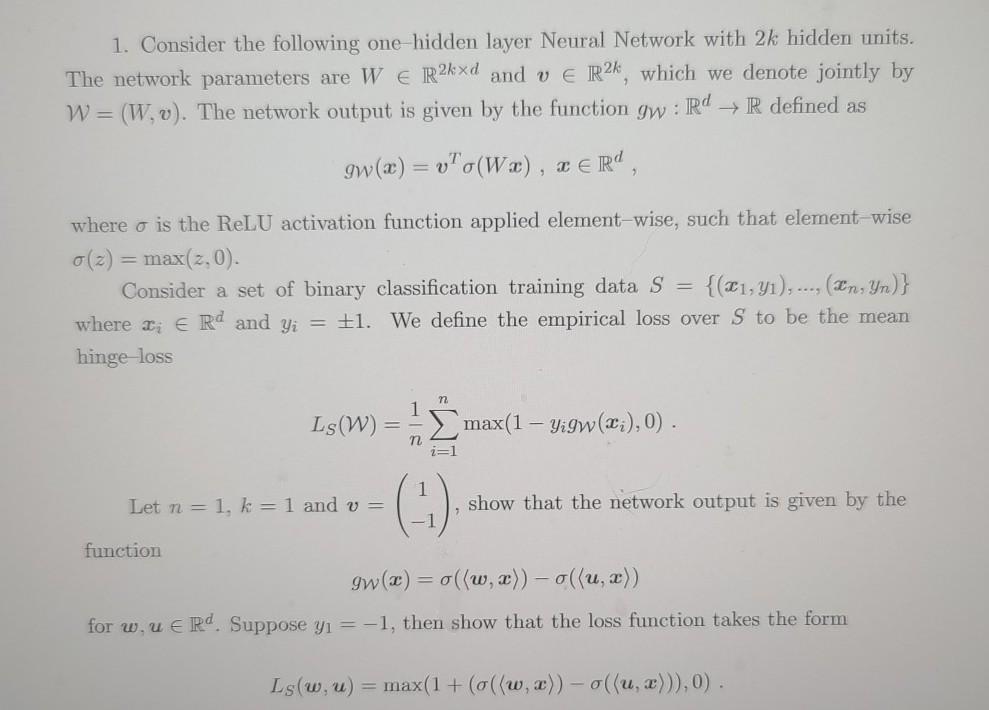

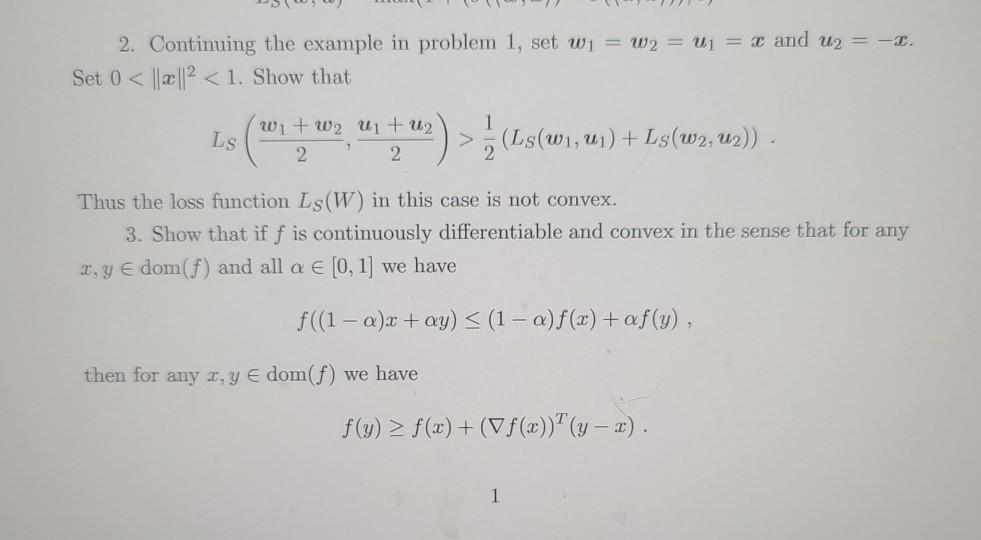

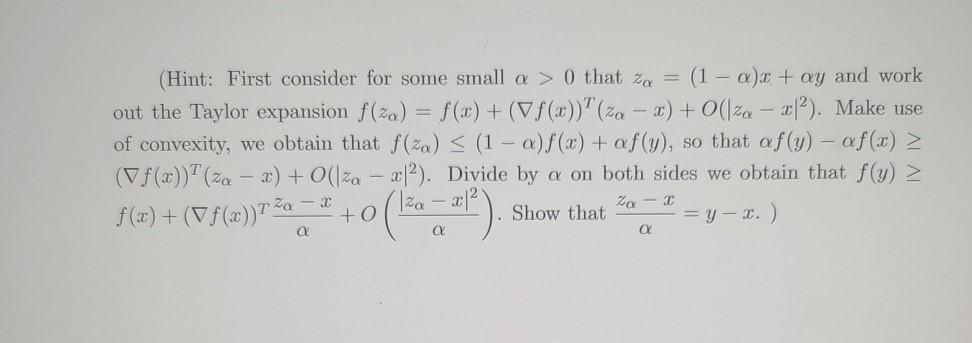

1. Consider the following one hidden layer Neural Network with 2k hidden units. The network parameters are W E R2kxd and v e R2%, which we denote jointly by W=(W.v). The network output is given by the function gw: Rd R defined as gw(x) = v(Wa), IERI, where o is the ReLU activation function applied element-wise, such that element wise 0(z) = max(z,0). Consider a set of binary classification training data s {(x1, y), ..., (In, Yn)} where zi e Rd and yi = +1. We define the empirical loss over S to be the mean hinge loss Ls (W) max(1 - Yigw(x;),0). i=1 (0) Let n = 1, k = 1 and v= show that the network output is given by the function gw(x) = o((W, x)) 0((u,x)) for w.u e Rd. Suppose yi = -1, then show that the loss function takes the form Ls(w, u) = max(1 + (ol(w, x)) - ((u, x))),0). 2. Continuing the example in problem 1, set w1 = W2 = uj = x and u2 = -2. Set 0 f(x) + (V f())?(y-2). 1 (Hint: First consider for some small a > 0 that Za = (1 a) + ay and work out the Taylor expansion f(x) = f(x) + (Vf(x))?(za ) + O(|7a - ). Make use of convexity, we obtain that f(za) (f(x))" (20 2) + O(lza xo|?). Divide by a on both sides we obtain that f(y) > f(x) + ( f (x))770 2 +0 Show that =Y- 2. ) Za OX

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts