Question: Backpropagation We want to train a simple deep neural network f w ( x ) with w = ( w 1 , w 2 ,

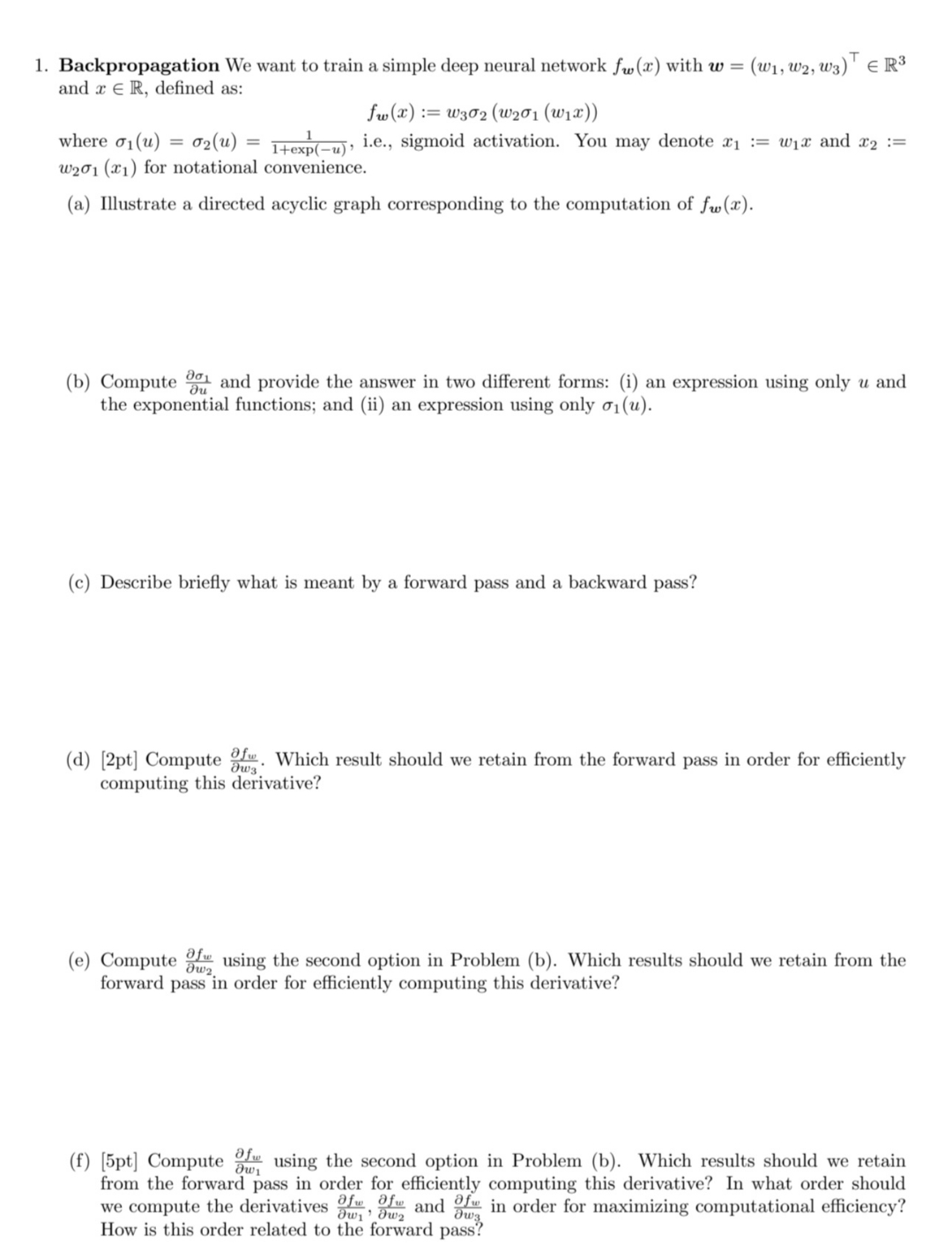

Backpropagation We want to train a simple deep neural network with and xinR, defined as:

:

where ie sigmoid activation. You may denote : and : for notational convenience.

a Illustrate a directed acyclic graph corresponding to the computation of

b Compute and provide the answer in two different forms: i an expression using only and the exponential functions; and ii an expression using only

c Describe briefly what is meant by a forward pass and a backward pass?

d Compute Which result should we retain from the forward pass in order for efficiently computing this derivative?

e Compute using the second option in Problem b Which results should we retain from the forward pass in order for efficiently computing this derivative?

fpt Compute using the second option in Problem b Which results should we retain from the forward pass in order for efficiently computing this derivative? In what order should we compute the derivatives and in order for maximizing computational efficiency? How is this order related to the forward pass?

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock