Question: class MyKNN(sklearn.base.BaseEstimator): def __init__(self, k): self.k = k self.ignore_ = True # Students can ignore this. def fit(self, train_features, train_labels): Train the KNN classifier.

class MyKNN(sklearn.base.BaseEstimator): def __init__(self, k): self.k = k self.ignore_ = True # Students can ignore this.

def fit(self, train_features, train_labels): """ Train the KNN classifier.

Args: train_features: A pandas.DataFrame that only contains numeric data. train_labels: A pandas.Series containing the labels. """

return self

def predict(self, test_features): """ Make predictions on the passed in data points.

Args: test_features: A pandas.DataFrame that only contains numeric data.

Returns: A numpy.ndarray with the predictions. """

return NotImplemented

def score(self, test_features, test_labels): predictions = self.predict(test_features) return sklearn.metrics.accuracy_score(predictions, test_labels)

classifier = MyKNN(3) accuracy = fit_and_visualize_decision_boundary(classifier, toy_features, toy_labels, "MyKNN")

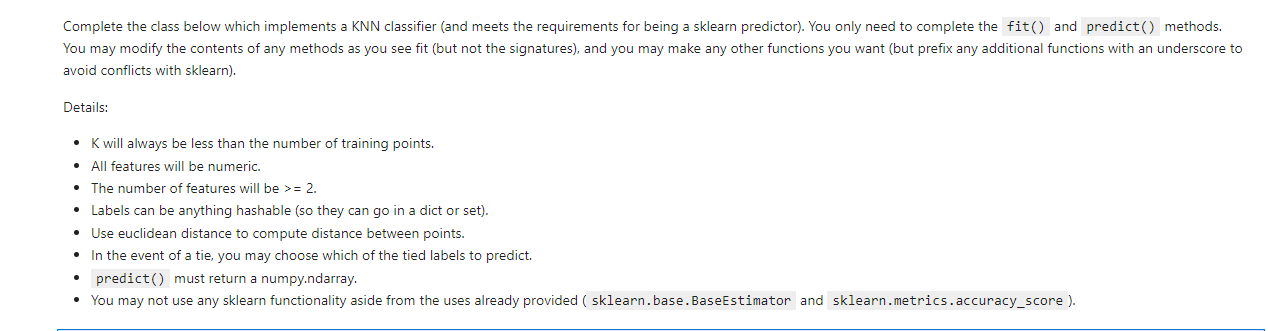

Complete the class below which implements a KNN classifier (and meets the requirements for being a sklearn predictor). You only need to complete the fit () and predict () methods. You may modify the contents of any methods as you see fit (but not the signatures), and you may make any other functions you want (but prefix any additional functions with an underscore to avoid conflicts with sklearn). Details: - K will always be less than the number of training points. - All features will be numeric. - The number of features will be >=2. - Labels can be anything hashable (so they can go in a dict or set). - Use euclidean distance to compute distance between points. - In the event of a tie, you may choose which of the tied labels to predict. - predict() must return a numpy.ndarray. - You may not use any sklearn functionality aside from the uses already provided ( and

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts