Question: Consider a 4-layer neural network showing below: the first layer is the input layer, suppose there is no bias for every layer's forward pass, and

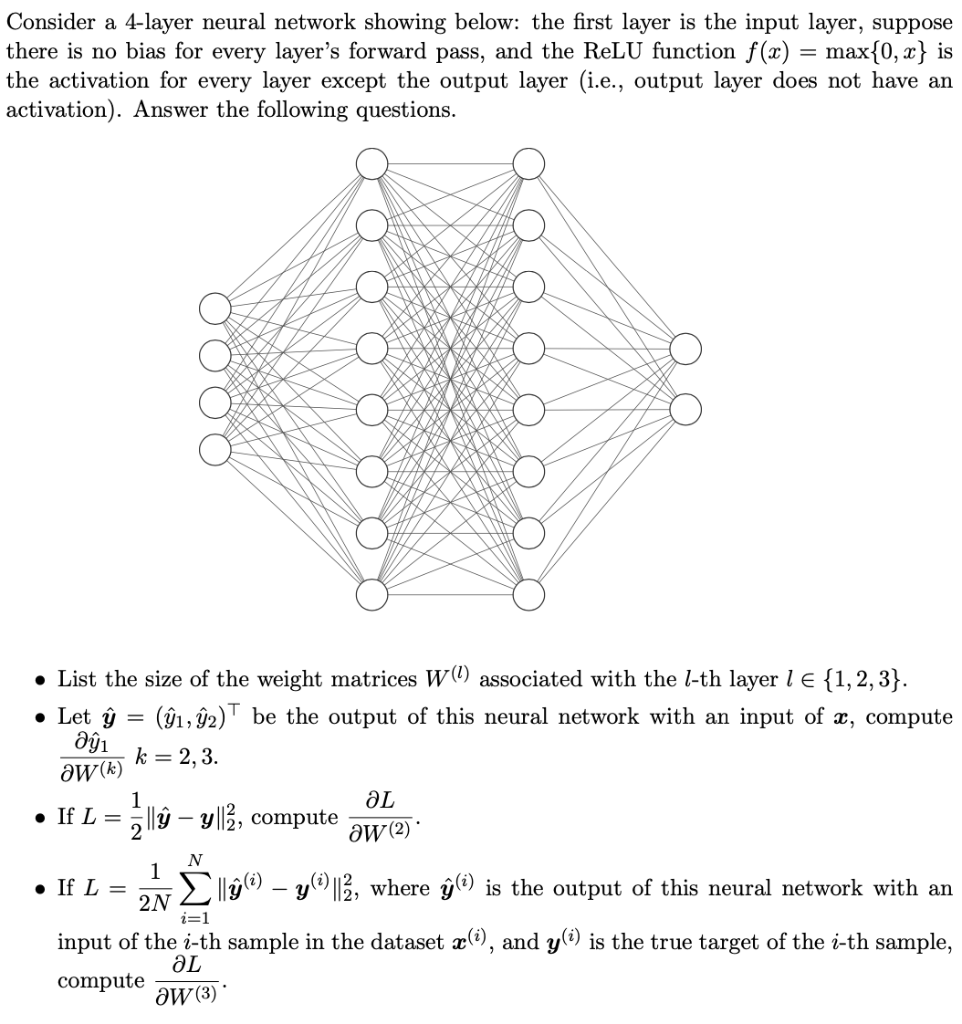

Consider a 4-layer neural network showing below: the first layer is the input layer, suppose there is no bias for every layer's forward pass, and the ReLU function f(x) = max{0, x} is the activation for every layer except the output layer (i.e., output layer does not have an activation). Answer the following questions. List the size of the weight matrices W(1) associated with the l-th layer 1 {1, 2, 3}. Let (1, 2)T be the output of this neural network with an input of x, compute k= 2,3. aw(k) k) . al If L = ll y ||, compute aw(2) N 1 If L = 2N i=1 I ll () y(0)||, where le) is the output of this neural network with an input of the i-th sample in the dataset x(i), and yli) is the true target of the i-th sample, al compute aw(3) Consider a 4-layer neural network showing below: the first layer is the input layer, suppose there is no bias for every layer's forward pass, and the ReLU function f(x) = max{0, x} is the activation for every layer except the output layer (i.e., output layer does not have an activation). Answer the following questions. List the size of the weight matrices W(1) associated with the l-th layer 1 {1, 2, 3}. Let (1, 2)T be the output of this neural network with an input of x, compute k= 2,3. aw(k) k) . al If L = ll y ||, compute aw(2) N 1 If L = 2N i=1 I ll () y(0)||, where le) is the output of this neural network with an input of the i-th sample in the dataset x(i), and yli) is the true target of the i-th sample, al compute aw(3)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts