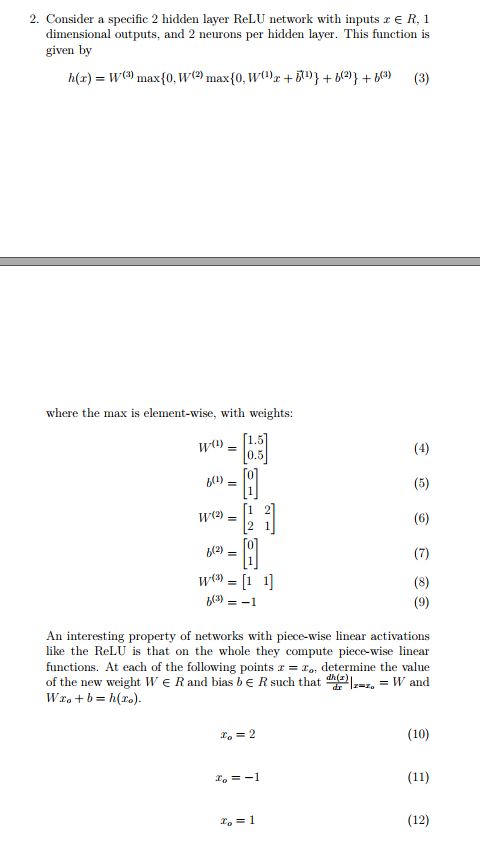

Question: Consider a specific 2 hidden layer ReLU network with inputs xinR, 1 dimensional outputs, and 2 neurons per hidden layer. This function is given by

Consider a specific hidden layer ReLU network with inputs xinR,

dimensional outputs, and neurons per hidden layer. This function is

given by

maxmaxvec

where the max is elementwise, with weights:

An interesting property of networks with piecewise linear activations

like the ReLU is that on the whole they compute piecewise linear

functions. At each of the following points determine the value

of the new weight WinR and bias binR such that and

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock