Question: Please help me with task 1 and 2 ! Problem 2 : Backpropagation Consider a neural network with one input layer, one hidden layer, and

Please help me with task and

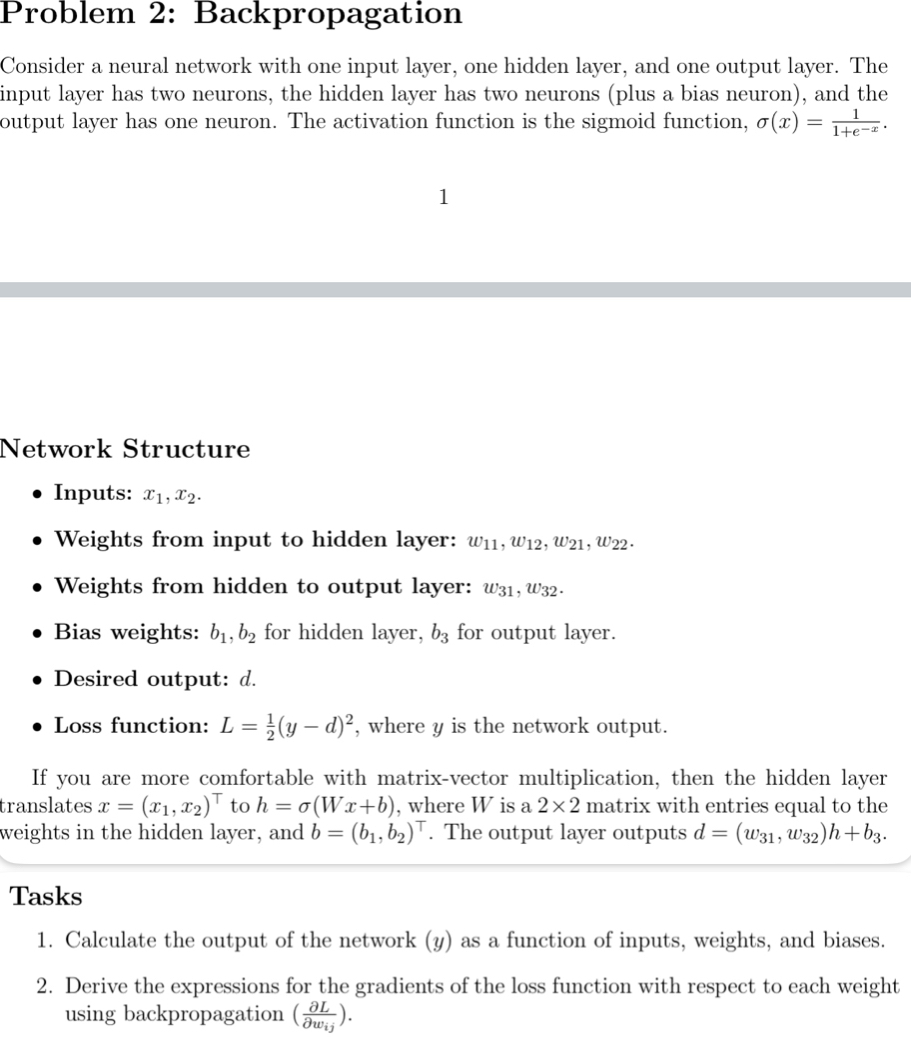

Problem : Backpropagation

Consider a neural network with one input layer, one hidden layer, and one output layer. The input layer has two neurons, the hidden layer has two neurons plus a bias neuron and the output layer has one neuron. The activation function is the sigmoid function,

Network Structure

Inputs:

Weights from input to hidden layer:

Weights from hidden to output layer:

Bias weights: for hidden layer, for output layer.

Desired output:

Loss function: where is the network output.

If you are more comfortable with matrixvector multiplication, then the hidden layer translates to where is a matrix with entries equal to the weights in the hidden layer, and The output layer outputs

Tasks

Calculate the output of the network as a function of inputs, weights, and biases.

Derive the expressions for the gradients of the loss function with respect to each weight using backpropagation

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock