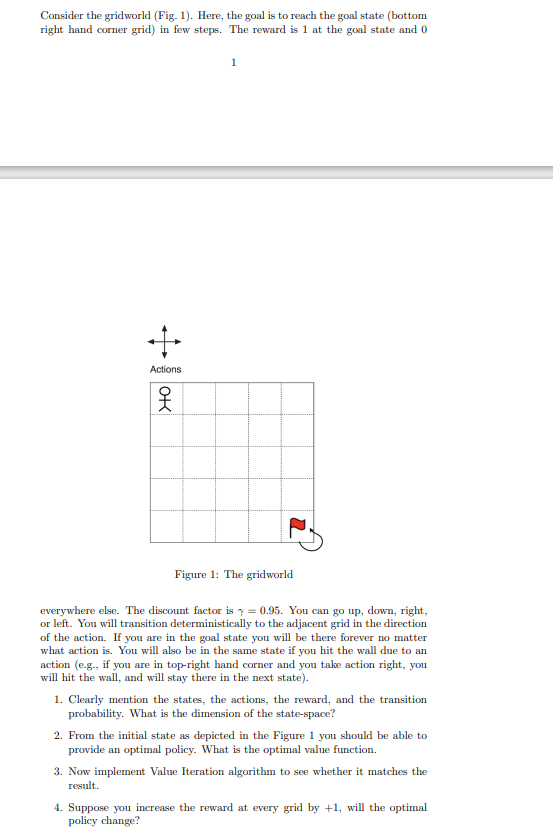

Question: Consider the gridworld ( Fig . 1 ) . Here, the goal is to reach the goal state ( bottom right hand corner grid )

Consider the gridworld Fig Here, the goal is to reach the goal state bottom

right hand corner grid in few steps. The reward is at the goal state and

Figure : 'The gridworld

everywhere else. The discount factor is You can go up down, right,

or left. You will transition deterministically to the adjacent grid in the direction

of the action. If you are in the goal state you will be there forever no matter

what action is You will also be in the same state if you hit the wall due to an

action eg if you are in topright hand corner and you take action right, you

will hit the wall, and will stay there in the next state

Clearly mention the states, the actions, the reward, and the transition

probability. What is the dimension of the statespace?

From the initial state as depicted in the Figure you should be able to

provide an optimal policy. What is the optimal value function.

Now implement Value Iteration algorithm to see whether it matches the

result.

Suppose you increase the reward at every grid by will the optimal

policy change?

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock