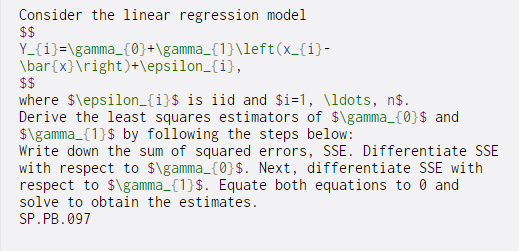

Question: Consider the linear regression model $$ Y_{i}=gamma_{0}+gamma_{1}left(x_{i}- bar{x} ight)+epsilon_{i}, $$ where $epsilon_{i}$ is iid and $i=1, ldots, n$. Derive the least squares estimators of $gamma_{0}$

Consider the linear regression model $$ Y_{i}=\gamma_{0}+\gamma_{1}\left(x_{i}- \bar{x} ight)+\epsilon_{i}, $$ where $\epsilon_{i}$ is iid and $i=1, \ldots, n$. Derive the least squares estimators of $\gamma_{0}$ and $\gamma_{1}$ by following the steps below: Write down the sum of squared errors, SSE. Differentiate SSE with respect to $\gamma_{0}$. Next, differentiate SSE with respect to $\gamma_{1}$. Equate both equations to 0 and solve to obtain the estimates. SP.PB.097

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock