Question: Consider the two-class classification problem where the class label y (0; 1) and each training example X has 2 binary attributes X, X (0;

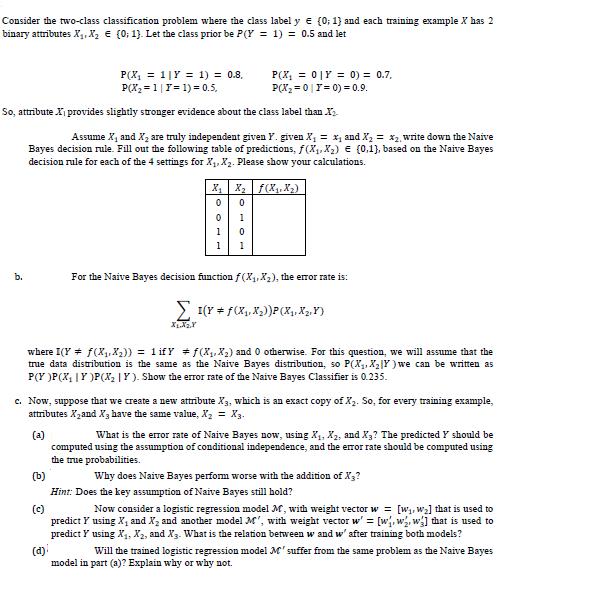

Consider the two-class classification problem where the class label y (0; 1) and each training example X has 2 binary attributes X, X (0; 1). Let the class prior be P(Y = 1) = 0.5 and let P(X = 1 Y = 1) = 0.8. P(X = 1 | Y= 1) = 0.5, So, attribute X provides slightly stronger evidence about the class label than X Assume X, and X are truly independent given Y. given X = x, and X = x, write down the Naive Bayes decision rule. Fill out the following table of predictions, f(X, X) (0.1), based on the Naive Bayes decision rule for each of the 4 settings for X, X. Please show your calculations. b. X X f(XX) 0 0 1 1 (c) OHOA 0 1 0 P(X = 01Y = 0) = 0.7, P(X=0|1= 0) = 0.9. 1 For the Naive Bayes decision function f(X, X), the error rate is: I(YfX, X))P(X, X,Y) where I(Y = f(XX)) = 1ify = f(X, X) and 0 otherwise. For this question, we will assume that the true data distribution is the same as the Naive Bayes distribution, so P(X, XY) we can be written as P(Y)P(X|Y)P(X | Y). Show the error rate of the Naive Bayes Classifier is 0.235. c. Now, suppose that we create a new attribute X3, which is an exact copy of X. So, for every training example, attributes Xand X3 have the same value, X = X3. (a) What is the error rate of Naive Bayes now, using X, X, and X3? The predicted y should be computed using the assumption of conditional independence, and the error rate should be computed using the true probabilities. (b) Why does Naive Bayes perform worse with the addition of X3? Hint: Does the key assumption of Naive Bayes still hold? Now consider a logistic regression model M, with weight vector w = [w, W] that is used to predict Y using X, and X and another model M', with weight vector w' = [w, w, w] that is used to predict Y using X, X2, and X3. What is the relation between w and w' after training both models? Will the trained logistic regression model M' suffer from the same problem as the Naive Bayes model in part (a)? Explain why or why not.

Step by Step Solution

3.41 Rating (157 Votes )

There are 3 Steps involved in it

a The error rate of the Naive Bayes Classifier using X1 X2 is Error rate PY fX1 X2 PY 0 fX1 X2 1 PY ... View full answer

Get step-by-step solutions from verified subject matter experts