Question: D) Iterative algorithm for Lasso. (20 points) We want to derive efficient algorithm for solving optimization problem where the objective is a sum of a

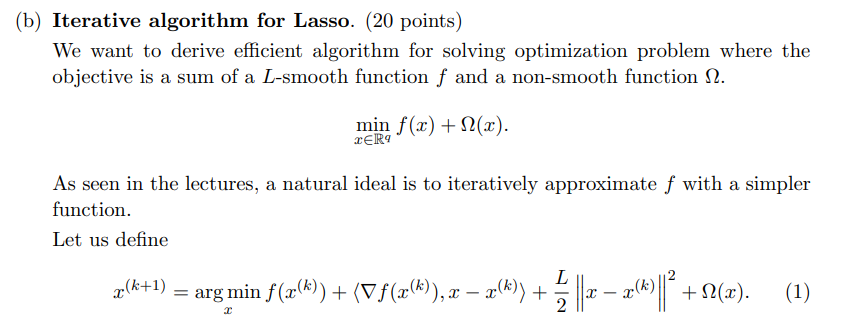

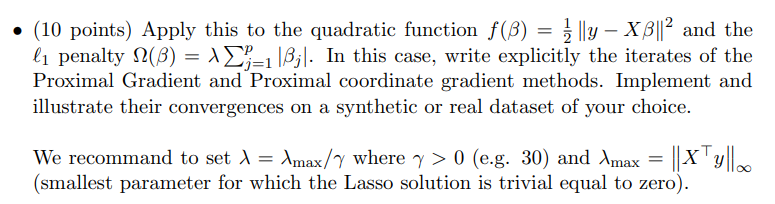

D) Iterative algorithm for Lasso. (20 points) We want to derive efficient algorithm for solving optimization problem where the objective is a sum of a L-smooth function f and a non-smooth function . minxRqf(x)+(x). As seen in the lectures, a natural ideal is to iteratively approximate f with a simpler function. Let us define x(k+1)=xargminf(x(k))+f(x(k)),xx(k)+2Lxx(k)2+(x). - (10 points) Apply this to the quadratic function f()=21yX2 and the 1 penalty ()=j=1pj. In this case, write explicitly the iterates of the Proximal Gradient and Proximal coordinate gradient methods. Implement and illustrate their convergences on a synthetic or real dataset of your choice. We recommand to set =max/ where >0 (e.g. 30) and max=Xy (smallest parameter for which the Lasso solution is trivial equal to zero)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts