Question: Deep Learning (Relu and Sigmoid) Below is a fully connected neural network model (Multilayered network) with two nodes in the input layer, two nodes in

Deep Learning (Relu and Sigmoid)

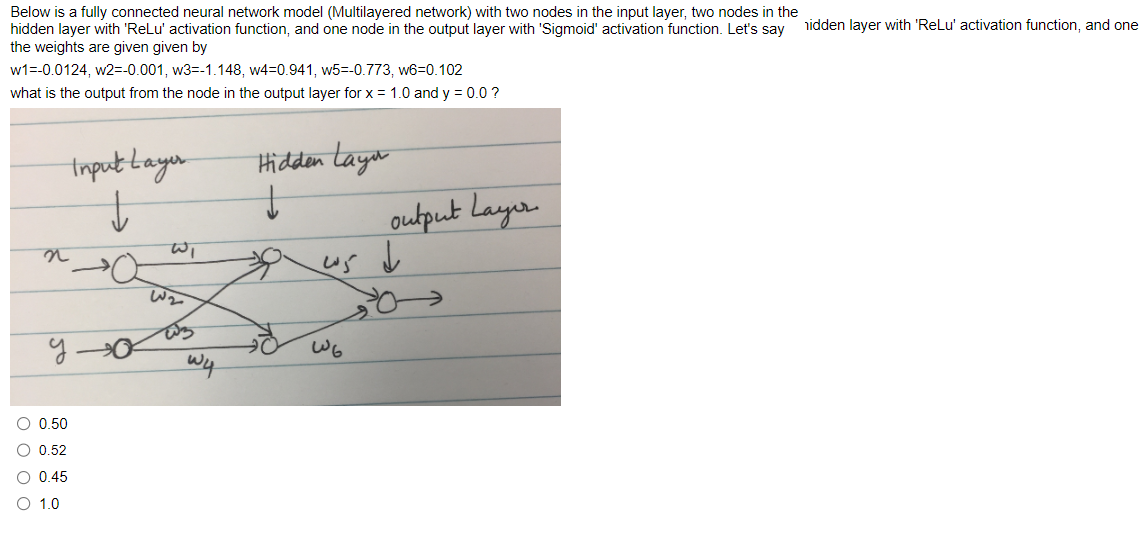

Below is a fully connected neural network model (Multilayered network) with two nodes in the input layer, two nodes in the hidden layer with 'ReLu' activation function, and one node in the output layer with 'Sigmoid' activation function. Let's say iidden layer with 'ReLu' activation function, and one the weights are given given by w1=0.0124,w2=0.001,w3=1.148,w4=0.941,w5=0.773,w6=0.102 what is the output from the node in the output layer for x=1.0 and y=0.0 ? 0.50 0.52 0.45 1.0

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts