Question: Dimensionality Reduction Using PCA Bookmark this page Project due Jul 1 0 , 2 0 2 4 1 7 : 2 9 IST PCA finds

Dimensionality Reduction Using PCA

Bookmark this page

Project due Jul : IST

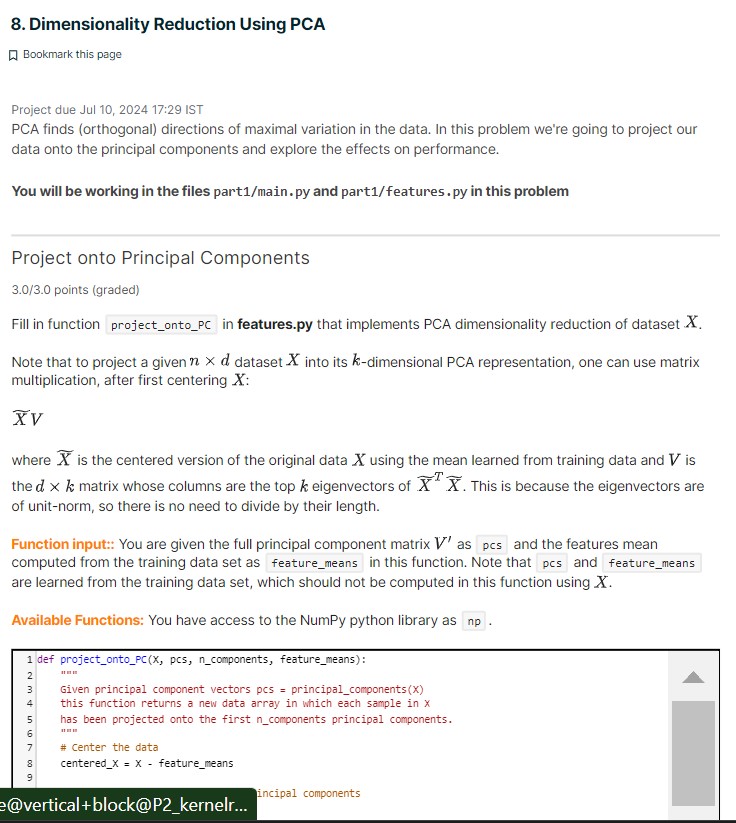

PCA finds orthogonal directions of maximal variation in the data. In this problem we're going to project our

data onto the principal components and explore the effects on performance.

You will be working in the files partmain py and partfeatures py in this problem

Project onto Principal Components

points graded

Fill in function

in

features.py that implements PCA dimensionality reduction of dataset

Note that to project a given dataset into its dimensional PCA representation, one can use matrix

multiplication, after first centering :

widetilde

where widetilde is the centered version of the original data using the mean learned from training data and is

the matrix whose columns are the top eigenvectors of widetildewidetilde This is because the eigenvectors are

of unitnorm, so there is no need to divide by their length.

Function input:: You are given the full principal component matrix as pcs and the features mean

computed from the training data set as featuremeans in this function. Note that pcs and featuremeans

are learned from the training data set, which should not be computed in this function using

Available Functions: You have access to the NumPy python library as npnnthis function returns a new data array in which each sample in nmncenteredXxmathrm featuremeans

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock