import numpy as np

from matplotlib import pyplot as plt

import cv2

METHOD = 'SIFT' # 'SIFT','SURF', 'ORB'

# Read the images

img1 = cv2.imread('input/img1.ppm')

img2 = cv2.imread('input/img2.ppm')

#cv2.imshow('Input',img1)

# Convert the images to grayscale

img1_gray = cv2.cvtColor(img1,cv2.COLOR_BGR2GRAY)

img2_gray = cv2.cvtColor(img2,cv2.COLOR_BGR2GRAY)

if METHOD == 'SIFT':

print('Calculating SIFT features...')

# Create a SIFT object

sift = cv2.xfeatures2d.SIFT_create()

# Get the keypoints and the descriptors

keypoints1, descriptors1 = sift.detectAndCompute(img1_gray,None)

keypoints2, descriptors2 = sift.detectAndCompute(img2_gray,None)

# keypoints object includes position, size, angle, etc.

# descriptors is an array. For sift, each row is a 128-length feature vector

elif METHOD == 'SURF':

print('Calculating SURF features...')

surf = cv2.xfeatures2d.SURF_create(4000)

keypoints1, descriptors1 = surf.detectAndCompute(img1_gray,None)

keypoints2, descriptors2 = surf.detectAndCompute(img2_gray,None)

elif METHOD == 'ORB':

print('Calculating ORB features...')

orb = cv2.ORB_create()

keypoints1, descriptors1 = orb.detectAndCompute(img1_gray,None)

keypoints2, descriptors2 = orb.detectAndCompute(img2_gray,None)

# Note: Try cv2.NORM_HAMMING for this feature

# Draw the keypoints

img1 = cv2.drawKeypoints(image=img1, outImage=img1, keypoints=keypoints1,

flags = cv2.DRAW_MATCHES_FLAGS_DRAW_RICH_KEYPOINTS,

color = (0, 0, 255))

img2 = cv2.drawKeypoints(image=img2, outImage=img2, keypoints=keypoints2,

flags = cv2.DRAW_MATCHES_FLAGS_DRAW_RICH_KEYPOINTS,

color = (0, 0, 255))

# Display the images

cv2.imshow('Keypoints 1', img1)

cv2.imshow('Keypoints 2', img2)

# Create a brute-force descriptor matcher object

bf = cv2.BFMatcher(cv2.NORM_L2, crossCheck=True)

# Different distances can be used.

# Match keypoints

matches1to2 = bf.match(descriptors1,descriptors2)

# matches1to2 is a DMatch object

# LOOK AT OPENCV DOCUMENTATION AND

# LEARN ABOUT THE DMatch OBJECT AND ITS FIELDS, SPECIFICALLY THE STRENGTH OF MATCH

# matches1to2[0].distance

# Sort according to distance and display the first 40 matches

matches1to2 = sorted(matches1to2, key = lambda x:x.distance)

img3 = cv2.drawMatches(img1,keypoints1,img2,keypoints2,matches1to2[:40],img2,flags=2)

plt.imshow(img3)

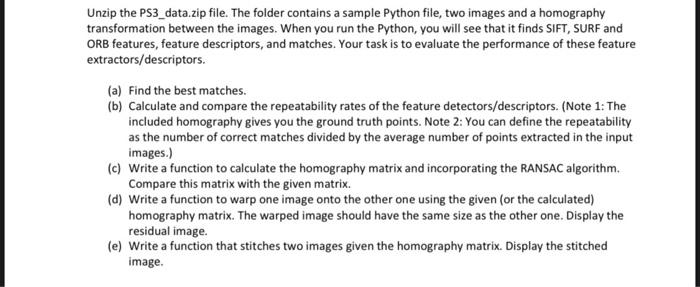

Unzip the PS3_data.zip file. The folder contains a sample Python file, two images and a homography transformation between the images. When you run the Python, you will see that it finds SIFT, SURF and ORB features, feature descriptors, and matches. Your task is to evaluate the performance of these feature extractors/descriptors. (a) Find the best matches. (b) Calculate and compare the repeatability rates of the feature detectors/descriptors. (Note 1: The included homography gives you the ground truth points. Note 2: You can define the repeatability as the number of correct matches divided by the average number of points extracted in the input images.) (c) Write a function to calculate the homography matrix and incorporating the RANSAC algorithm Compare this matrix with the given matrix. (d) Write a function to warp one image onto the other one using the given (or the calculated) homography matrix. The warped image should have the same size as the other one. Display the residual image. (e) Write a function that stitches two images given the homography matrix. Display the stitched image