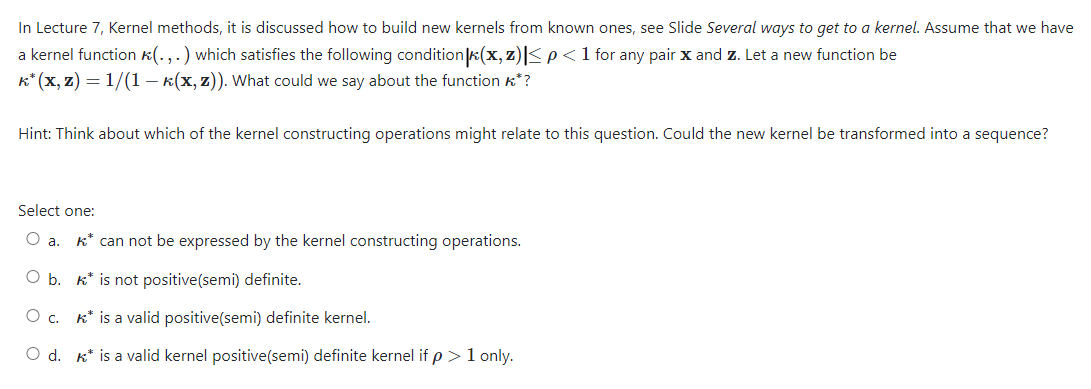

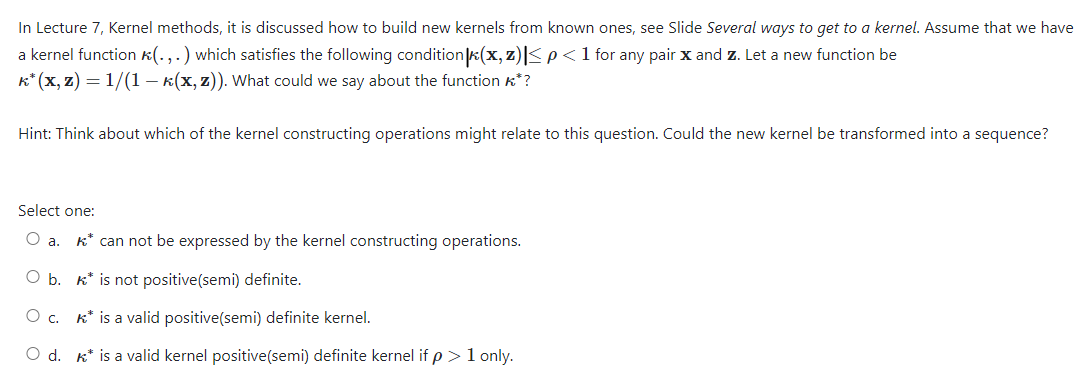

Question: In Lecture 7, Kernel methods, it is discussed how to build new kernels from known ones, see Slide Several ways to get to a kernel.

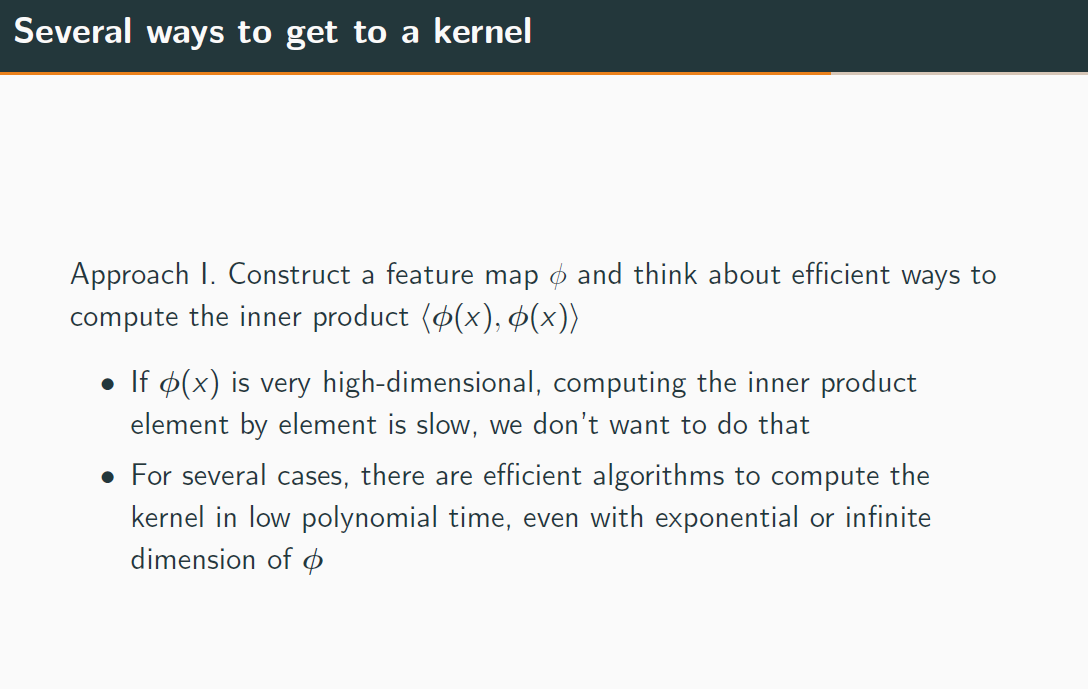

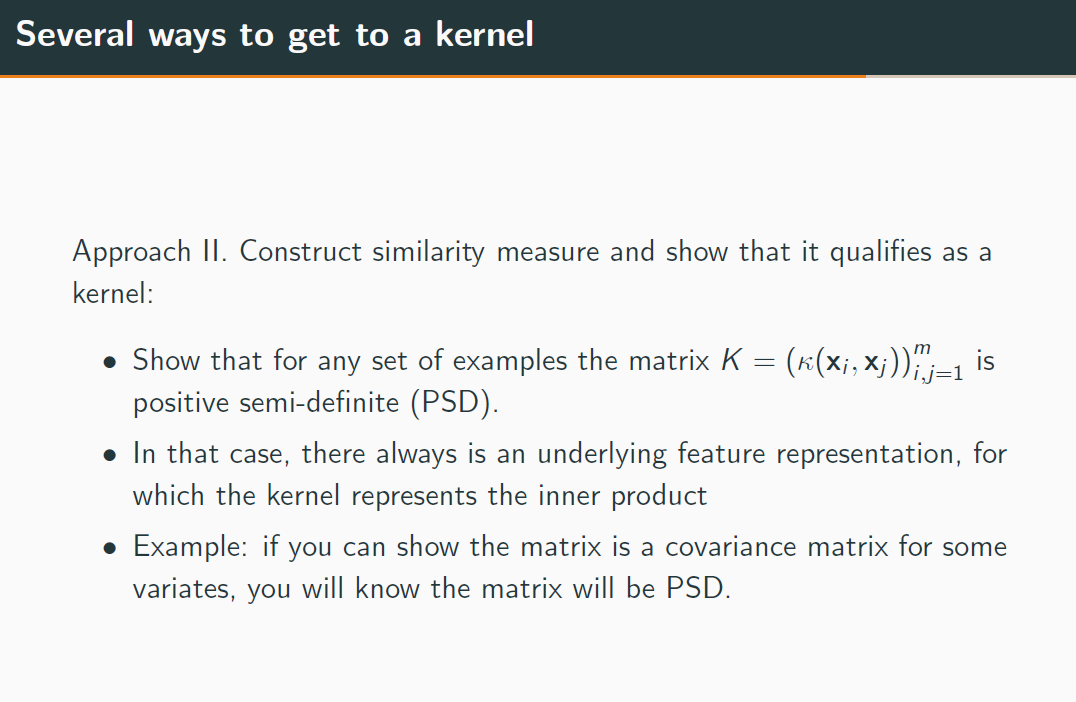

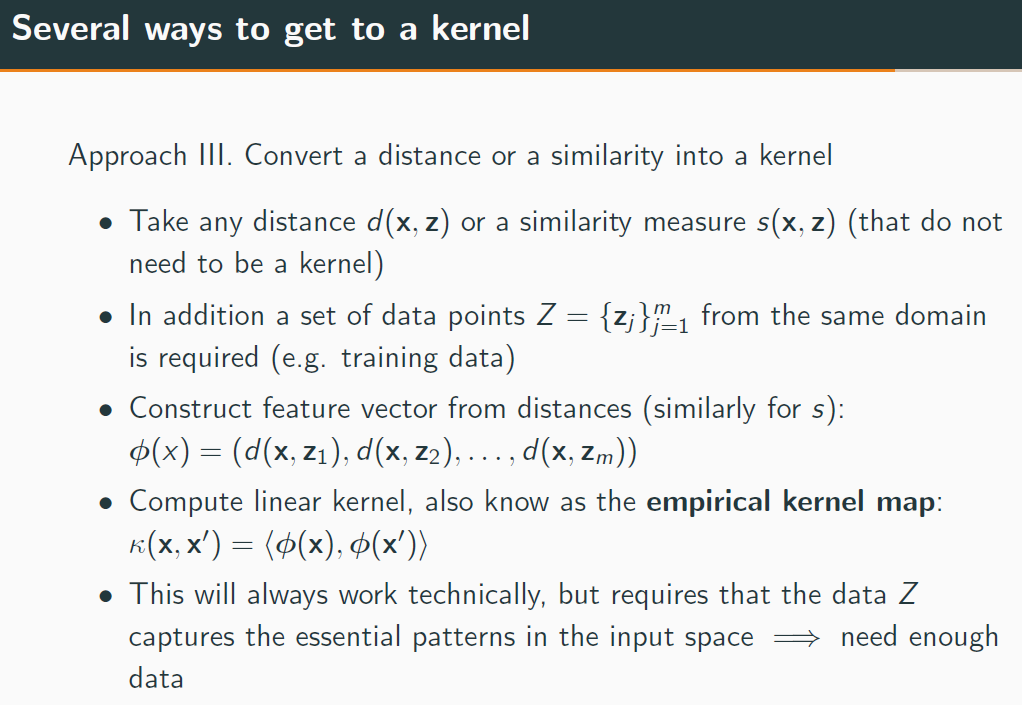

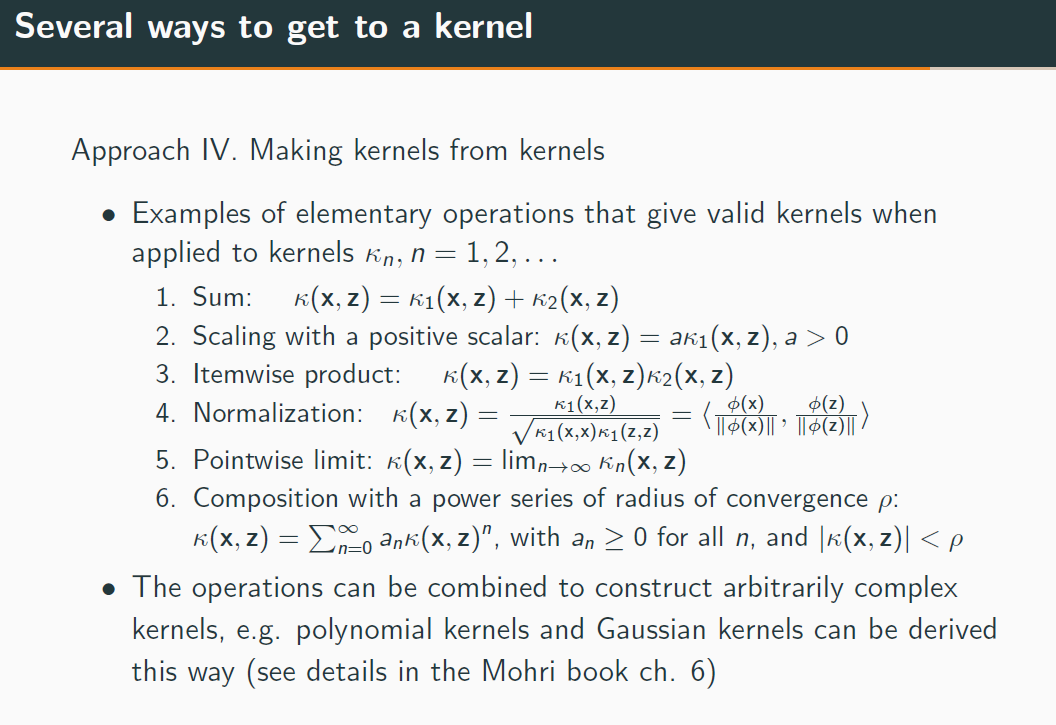

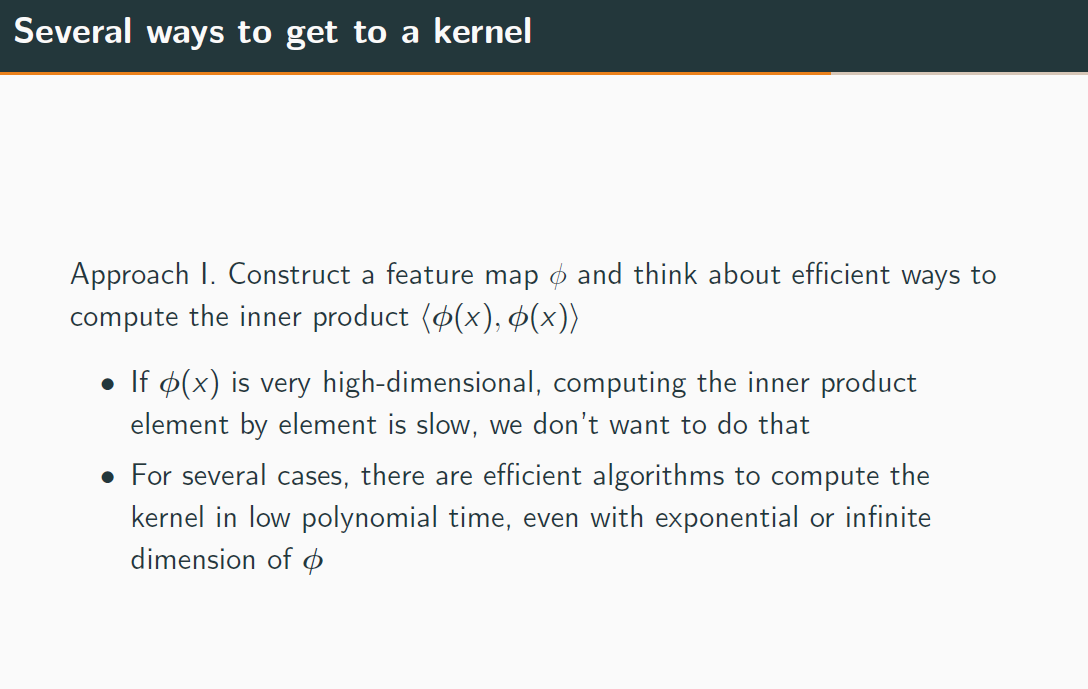

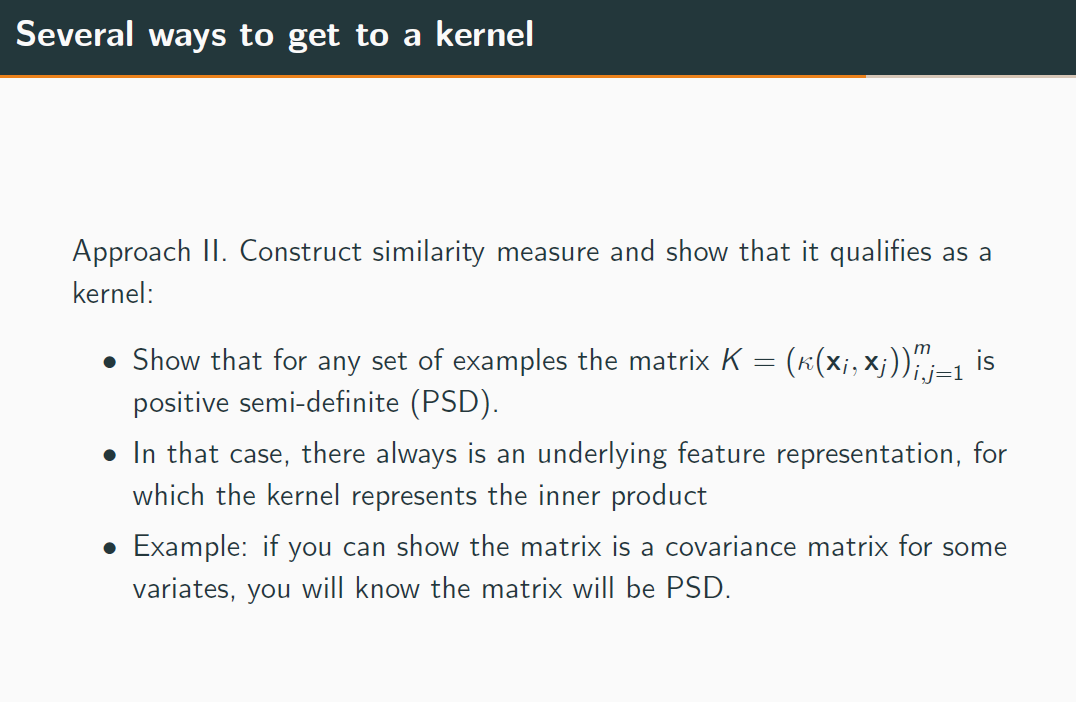

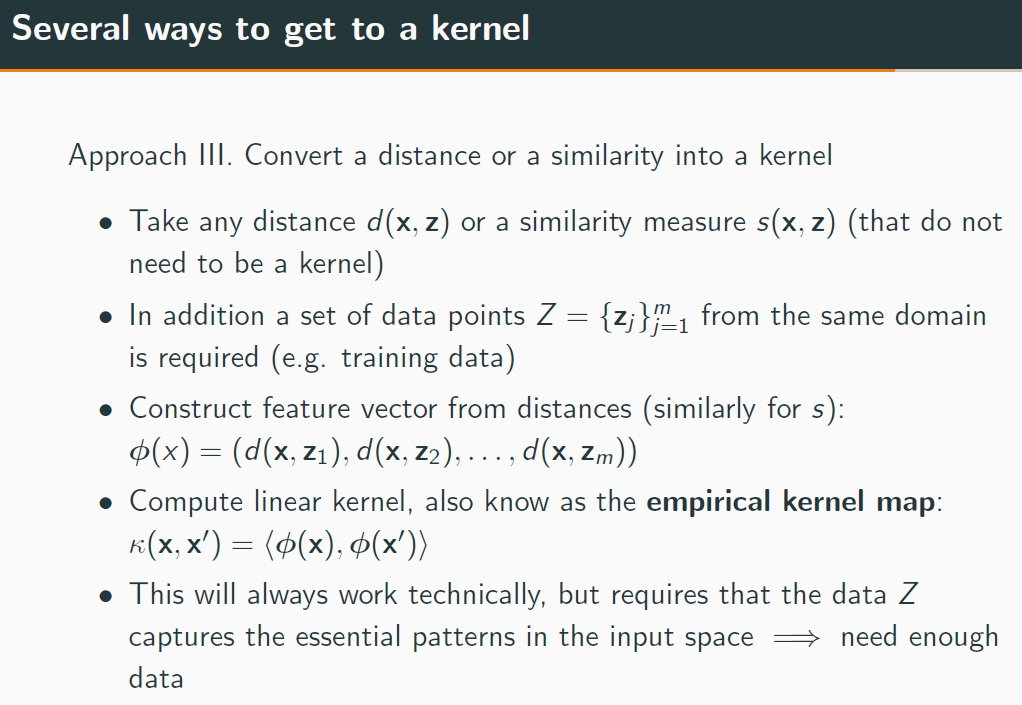

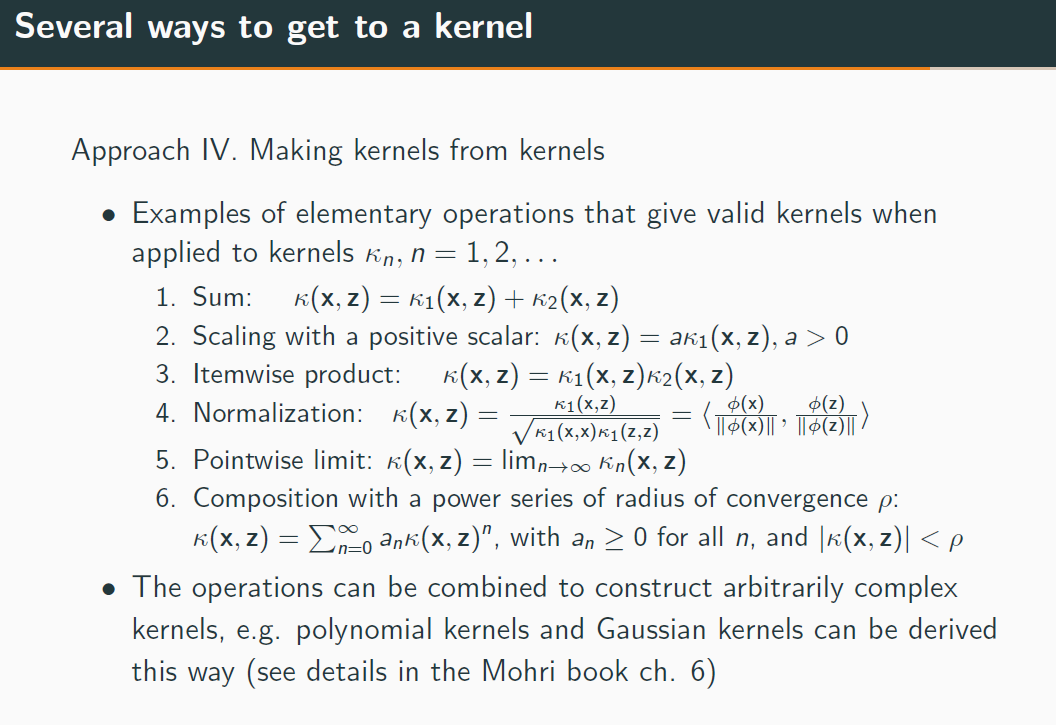

In Lecture 7, Kernel methods, it is discussed how to build new kernels from known ones, see Slide Several ways to get to a kernel. Assume that we have a kernel function (., . ) which satisfies the following condition k(X, Z) | 1 only.Several ways to get to a kernel Approach |. Construct a feature map gr\") and think about efficient ways to compute the inner product (@505), d)(x)) o If $01) is very high-dimensional, computing the inner product element by element is slow, we don't want to do that o For several cases, there are efficient algorithms to compute the kernel in low polynomial time, even with exponential or infinite dimension of (p Several ways to get to a kernel Approach ||. Construct similarity measure and show that it qualifies as a kernel: 9 Show that for any set of examples the matrix K : (r-{.(x;, whiz-:1 is positive semi-definite (PSD). o In that case, there always is an underlying feature representation, for which the kernel represents the inner product 0 Example: if you can show the matrix is a covariance matrix for some variates, you will know the matrix will be PSD. Several ways to get to a kernel Approach |l|. Convert a distance or a similarity into a kernel 0 Take any distance d(x: 2) or a similarity measure 5(x: 2) (that do not need to be a kernel) m o In addition a set of data points Z : {2;}- J21 from the same domain is required (eg. training data) 0 Construct feature vector from distances (similarly for s): @501) : (d(x_, :1), d(x, 22)._ . . . . db:g 2m)) 0 Compute linear kernel, also know as the empirical kernel map: iii-(X: X') : (MK). $05)) 0 This will always work technically, but requires that the data Z captures the essential patterns in the input space :> need enough data Several ways to get to a kernel Approach IV. Making kernels from kernels . Examples of elementary operations that give valid kernels when applied to kernels Kin, n = 1, 2, .. . 1. Sum: K(X, z) = K1(X, Z) + K2(X, z) 2. Scaling with a positive scalar: k(x, z) = aki(x, z), a > 0 3. Itemwise product: k(x, z) = K1(X, Z)K2(X, Z) 4. Normalization: K(X, z) = - K1 (X, Z) $(x ) p (z ) VK1 (x, x) K1 ( 2, Z) = 10 ( x ) IT ' 10( 2 ) 1 / 5. Pointwise limit: k(x, z) = limn-too kin(X, z) 6. Composition with a power series of radius of convergence p: K(x, z) = _no ank(x, z)", with an 2 0 for all n, and |k(x, z) |

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts