Question: Indeed, linear models correspond to a one layer neural networks with linear activation. Denote f:RpR:xj=1pjxj to represent the output of such network. Given n samples

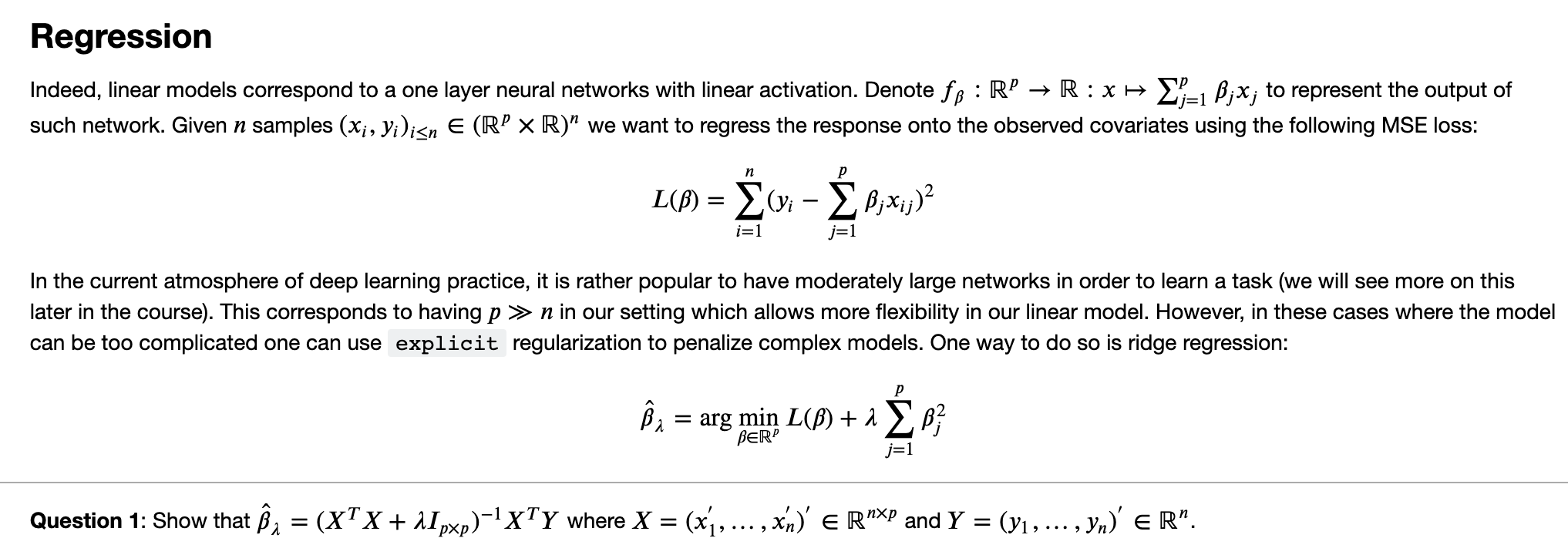

Indeed, linear models correspond to a one layer neural networks with linear activation. Denote f:RpR:xj=1pjxj to represent the output of such network. Given n samples (xi,yi)in(RpR)n we want to regress the response onto the observed covariates using the following MSE loss: L()=i=1n(yij=1pjxij)2 In the current atmosphere of deep learning practice, it is rather popular to have moderately large networks in order to learn a task later in the course). This corresponds to having pn in our setting which allows more flexibility in our linear model. However, in these cases where the mode can be too complicated one can use regularization to penalize complex models. One way to do so is ridge regression: ^=argminRpL()+j=1pj2 Question 1: Show that ^=(XTX+Ipp)1XTY where X=(x1,,xn)Rnp and Y=(y1,,yn)Rn

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts