Question: information theory note :; please help me I am in exam I need answer within 15 min .. The total information of independent packets is:

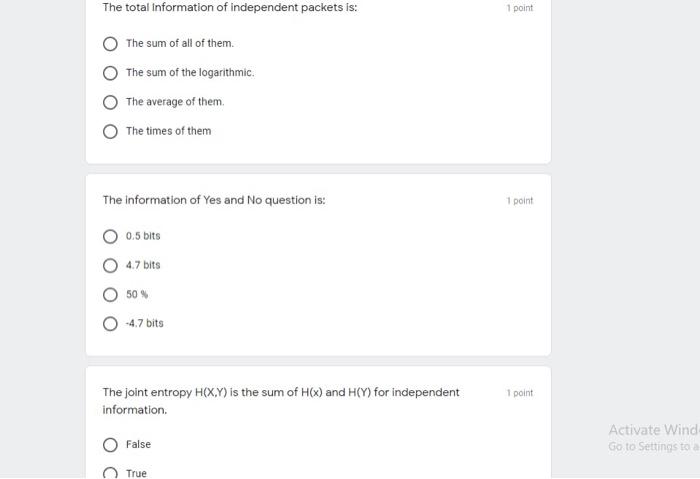

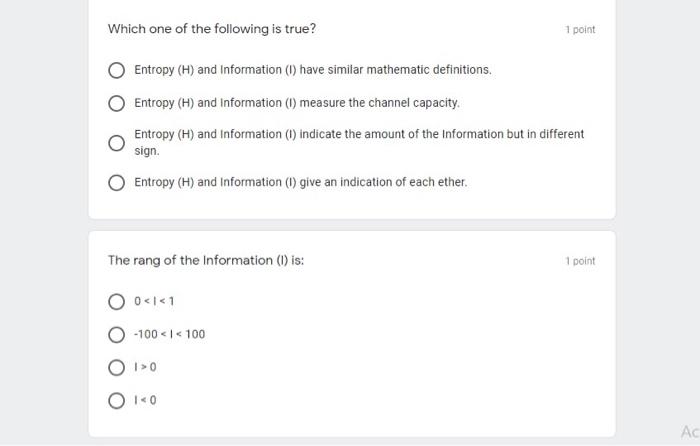

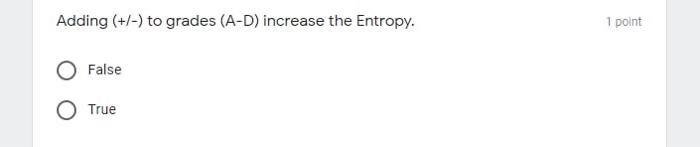

The total information of independent packets is: 1 point The sum of all of them The sum of the logarithmic The average of them The times of them The information of Yes and No question is: 1 point 0.5 bits 4.7 bits 50% -4.7 bits 1 point The joint entropy H(XY) is the sum of H(x) and H(Y) for independent information Activate Wind Go to Settings to False True Which one of the following is true? 1 point Entropy (H) and Information (1) have similar mathematic definitions. Entropy (H) and Information (1) measure the channel capacity Entropy (H) and Information (1) indicate the amount of the Information but in different sign. Entropy (H) and Information (1) give an indication of each ether. The rang of the Information (1) is: 1 point -100 0 O 10 Ac Adding (+/-) to grades (A-D) increase the Entropy. 1 point False O True The total information of independent packets is: 1 point The sum of all of them The sum of the logarithmic The average of them The times of them The information of Yes and No question is: 1 point 0.5 bits 4.7 bits 50% -4.7 bits 1 point The joint entropy H(XY) is the sum of H(x) and H(Y) for independent information Activate Wind Go to Settings to False True Which one of the following is true? 1 point Entropy (H) and Information (1) have similar mathematic definitions. Entropy (H) and Information (1) measure the channel capacity Entropy (H) and Information (1) indicate the amount of the Information but in different sign. Entropy (H) and Information (1) give an indication of each ether. The rang of the Information (1) is: 1 point -100 0 O 10 Ac Adding (+/-) to grades (A-D) increase the Entropy. 1 point False O True

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts