Question: Let x ER and y Rm be zero mean random vectors. Assume y is given, and let x = h(y) denote an estimate of

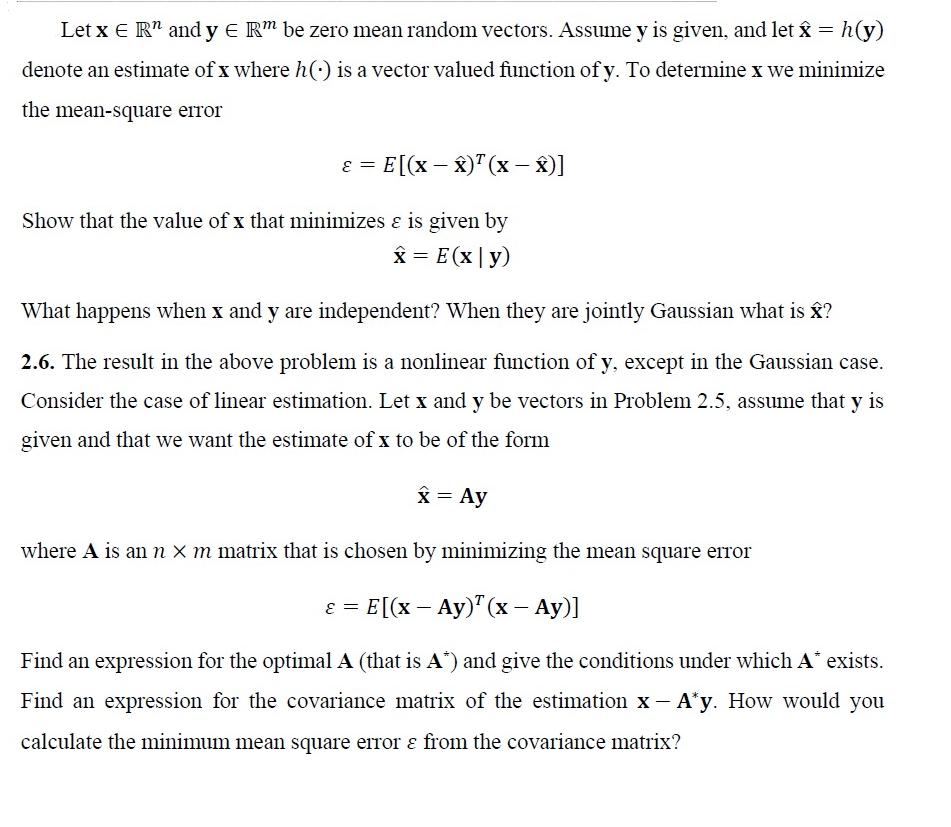

Let x ER" and y Rm be zero mean random vectors. Assume y is given, and let x = h(y) denote an estimate of x where h() is a vector valued function of y. To determine xwe minimize the mean-square error = E[(x x)(x x)] Show that the value of x that minimizes & is given by x = E(x|y) What happens when x and y are independent? When they are jointly Gaussian what is ? 2.6. The result in the above problem is a nonlinear function of y, except in the Gaussian case. Consider the case of linear estimation. Let x and y be vectors in Problem 2.5, assume that y is given and that we want the estimate of x to be of the form x = Ay where A is an n m matrix that is chosen by minimizing the mean square error E[(x - Ay) (x - Ay)] = Find an expression for the optimal A (that is A*) and give the conditions under which A* exists. Find an expression for the covariance matrix of the estimation x - A*y. How would you calculate the minimum mean square error & from the covariance matrix?

Step by Step Solution

3.51 Rating (175 Votes )

There are 3 Steps involved in it

SOLUTION To minimize the meansquare error we need to find the value of hat x that minimizes the expr... View full answer

Get step-by-step solutions from verified subject matter experts