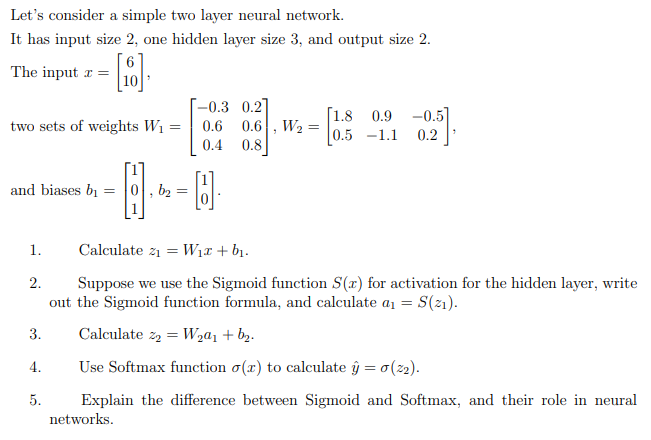

Question: Let's consider a simple two layer neural network. It has input size 2, one hidden layer size 3, and output size 2. The input r=

Let's consider a simple two layer neural network. It has input size 2, one hidden layer size 3, and output size 2. The input r= [] two sets of weights W = -0.3 0.21 [1.8 0.9 -0.5) 0.6 0.6 , W2 = 0.5 -1.1 0.2 0.4 0.8 and biases by 01, b2 = 0 1. 2. 3. Calculate 21 = W11+b1. Suppose we use the Sigmoid function S(r) for activation for the hidden layer, write out the Sigmoid function formula, and calculate a1 = S(21). Calculate za =W22+ by. Use Softmax function (x) to calculate y = 0(22). Explain the difference between Sigmoid and Softmax, and their role in neural networks. 4. 5

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts