Question: MATH HW *question 3 is cut out and it says steps 6 to 7 Q1. (12 pts] Independence in Hidden Markov Models Below is a

MATH HW

*question 3 is cut out and it says steps 6 to 7

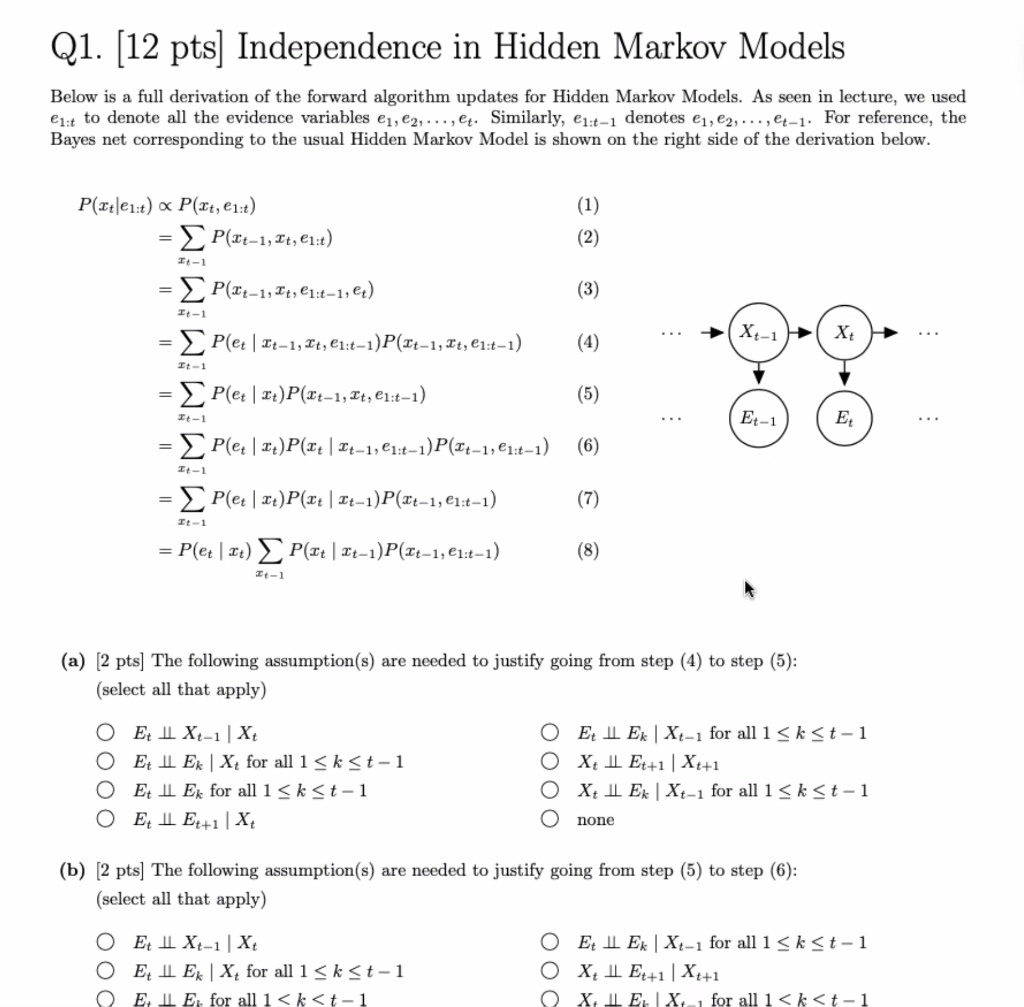

Q1. (12 pts] Independence in Hidden Markov Models Below is a full derivation of the forward algorithm updates for Hidden Markov Models. As seen in lecture, we used 1:t to denote all the evidence variables e1,e2, ..., @t. Similarly, ei:t-1 denotes e1, C2, ...,@t-1. For reference, the Bayes net corresponding to the usual Hidden Markov Model is shown on the right side of the derivation below. P(xt|e1:t) P(It, 1:t) P(rt-1, It, e1:1) (1) (2) It-1 = P(141,It, 21:21,et) (3) It-1 X-1 It-1 (5) I-1 Et-1 E Plet | 241,1t, 21:t-1)P(Tt-1, It, 21:1-1) = Plet | 2+)P(It-1, qt, e1:t-1) Plet | 7+)P(It | It-1, 21:2-1)P(241921:1-1) = Plet | X+)P(1+ | It-1)P(Tt-1, 21:4-1) = Plet|2t) P(It | It-1)P(Tt-1,21:t-1) (6) It-1 (7) It-1 (8) It-1 (a) [2 pts) The following assumption(s) are needed to justify going from step (4) to step (5): (select all that apply) Et 1 Xt-1 | Xt Et Ex X+ for all 1

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts