Question: Part 2: A simple Spark application In this part, you will implement a simple Spark application. We have provided some sample data collected by IOT

Part 2: A simple Spark application

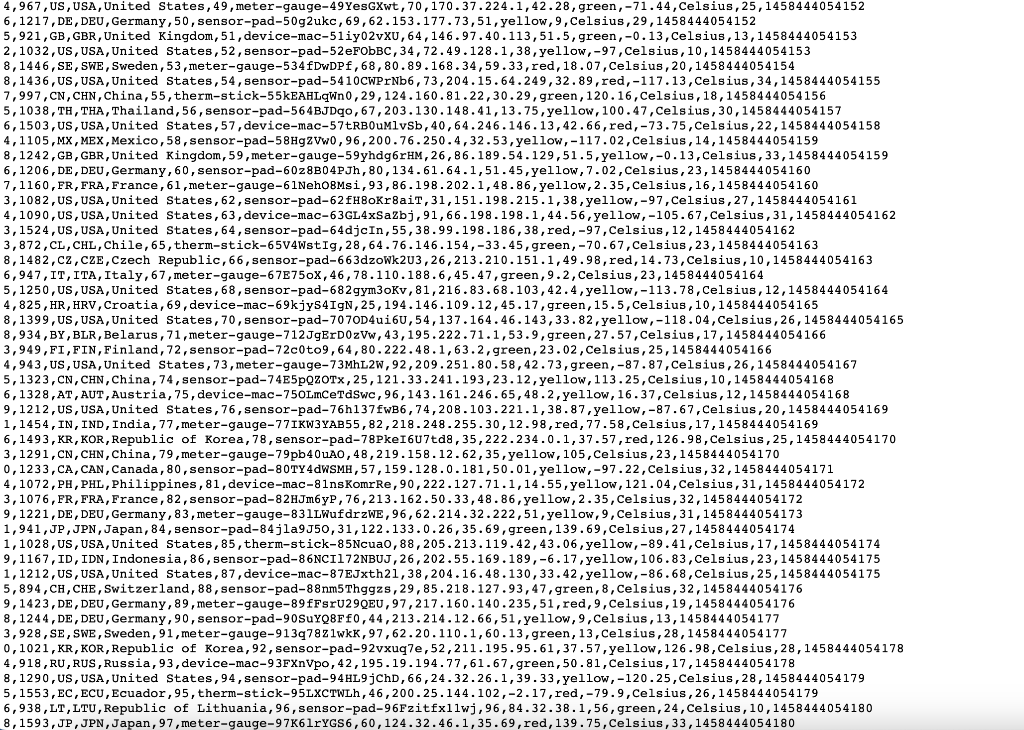

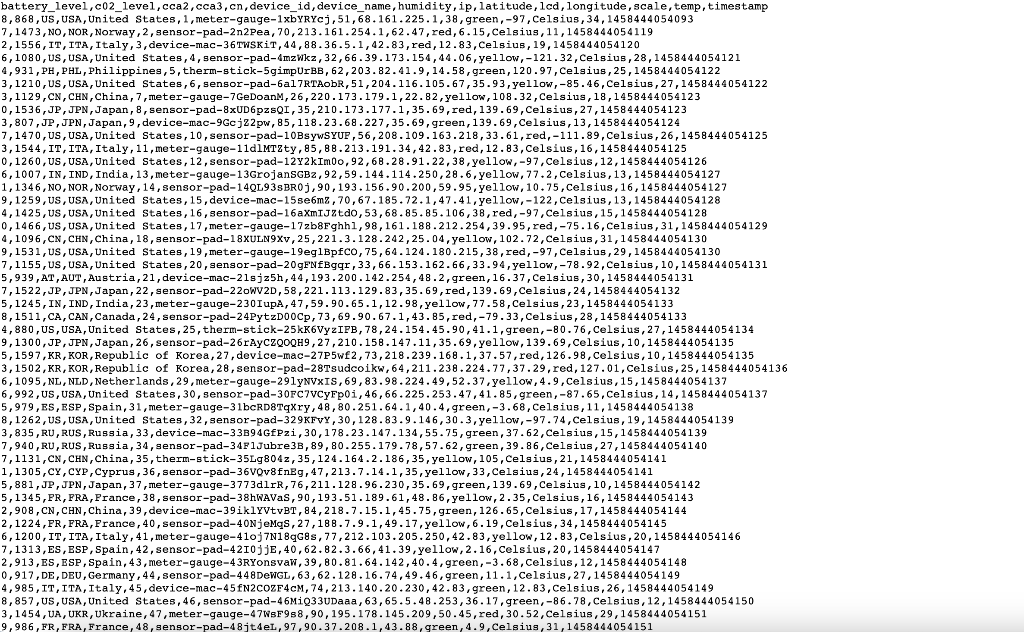

In this part, you will implement a simple Spark application. We have provided some sample data collected by IOT devices, screenshots are below. You need to sort the data firstly by the country code alphabetically (the third column) then by the timestamp (the last column). Here is an example:

Input:

| cca2 | device_id | timestamp | |||

|---|---|---|---|---|---|

| US | 1 | 1 | |||

| IN | 2 | 2 | |||

| US | 3 | 2 | |||

| CN | 4 | 4 | |||

| US | 5 | 3 | |||

| IN | 6 | 1 |

Output:

| cca2 | device_id | timestamp | |||

|---|---|---|---|---|---|

| CN | 4 | 4 | |||

| IN | 6 | 1 | |||

| IN | 2 | 2 | |||

| US | 1 | 1 | |||

| US | 3 | 2 | |||

| US | 5 | 3 |

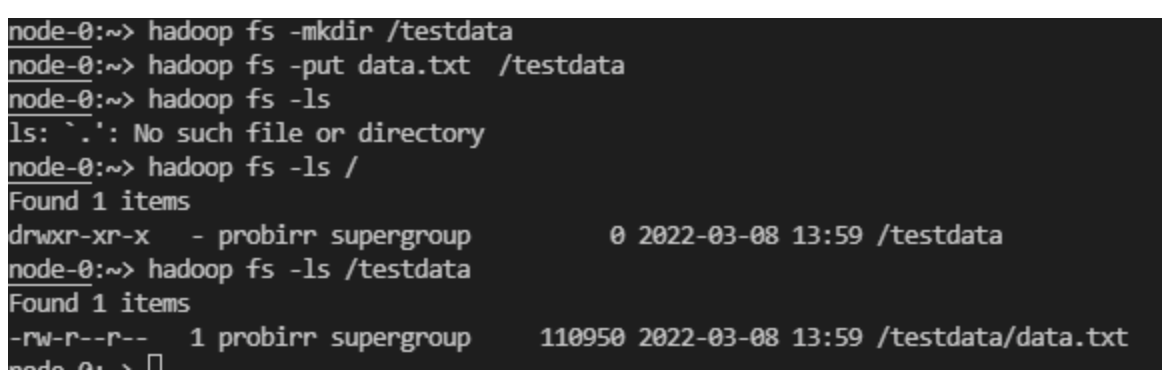

You should first load the data into HDFS.

Then, write a Spark program in Java/Python/Scala to sort the data. Finally, output the results into HDFS in form of csv. Your program will take in two arguments, the first a path to the input file and the second the path to the output file. Note that if two data tuples have the same country code and timestamp, the order of them does not matter.

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts