Question: PLEASE CODE IN PYTHON Gradient Descent Consider the bivariate function f : R2 R that is defined as follows: Provide an implementation of gradient descent

PLEASE CODE IN PYTHON

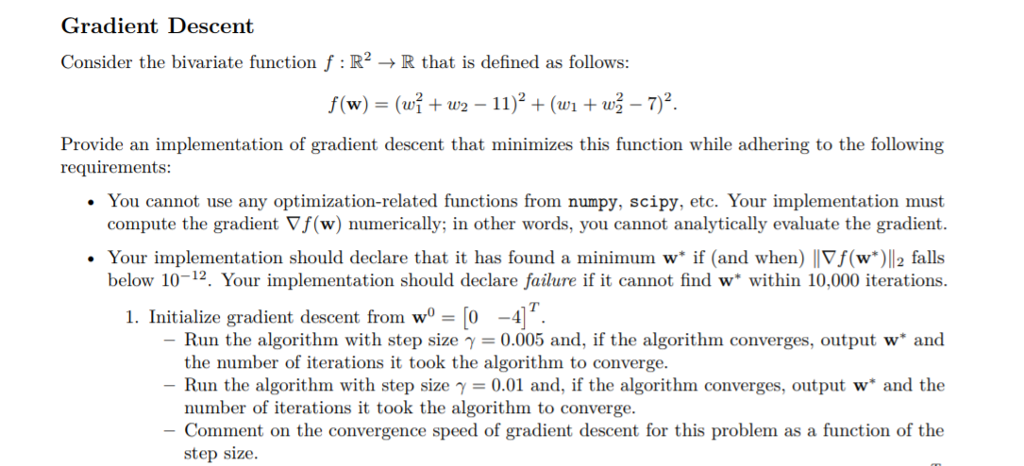

Gradient Descent Consider the bivariate function f : R2 R that is defined as follows: Provide an implementation of gradient descent that minimizes this function while adhering to the following requirements: . You cannot use any optimization-related functions from numpy, scipy, etc. Your implementation must compute the gradient Vf(w) numerically; in other words, you cannot analytically evaluate the gradient. Your implementation should declare that it has found a minimum w if (and when) Vf(w*)l12 falls below 10-12. Your implementation should declare failure if it cannot find w within 10,000 iterations. I. Initialize gradient descent from wo = [0-4]T. -Run the algorithm with step size = 0.005 and, if the algorithm converges, output w. and Run the algorithm with step size 0.01 and, if the algorithm converges, output w" and the Comment on the convergence speed of gradient descent for this problem as a function of the the number of iterations it took the algorithm to converge. number of iterations it took the algorithm to converge. step size Gradient Descent Consider the bivariate function f : R2 R that is defined as follows: Provide an implementation of gradient descent that minimizes this function while adhering to the following requirements: . You cannot use any optimization-related functions from numpy, scipy, etc. Your implementation must compute the gradient Vf(w) numerically; in other words, you cannot analytically evaluate the gradient. Your implementation should declare that it has found a minimum w if (and when) Vf(w*)l12 falls below 10-12. Your implementation should declare failure if it cannot find w within 10,000 iterations. I. Initialize gradient descent from wo = [0-4]T. -Run the algorithm with step size = 0.005 and, if the algorithm converges, output w. and Run the algorithm with step size 0.01 and, if the algorithm converges, output w" and the Comment on the convergence speed of gradient descent for this problem as a function of the the number of iterations it took the algorithm to converge. number of iterations it took the algorithm to converge. step size

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts