Question: Problem 1 : An MDP Episode ( 2 5 points ) In this part of the assignment, we are going to play an episode in

Problem : An MDP Episode points

In this part of the assignment, we are going to play an episode in an MDP by following a given policy. Consider the first test case of problem available in the file testcasespprob

The first part of this file specifies an MDP is the start state with four available actions N is an ordinary state with the same four available actions and are states where the only available action is exit and the reward are and respectively. The reward for action in other states is # is a wall.

Actions are not deterministic in this environment. In this case with noise we are successfully acting of the time and of the time we will act perpendicular to the intended direction with equal probability, ie for each unintended direction. If the agent attempts to move into a wall, the agent will stay in the same position. Note that this MDP is identical to the example that we covered extensively in our class.

The second part of this file specifies the policy to be executed.

As usual, your first task is to implement the parsing of this grid MDP in the function readgridmdpproblempfilepath of the file

parse.py You may use any appropriate data structure.

Next, you should implement running the episode in the function playepisodeproblem in the file py

Below is the expected output. Note that we always use exactly characters for the output of a single grid and that the last line does not contain a new line.

Taking action: W intended:

Reward received:

New state:

Cumulativ rewbar a rd sm:

Taking action: intended:

Reward received:

New state:

Cumulativ rewbar a rd sm:

Taking action: intended:

Reward received:

New state:

Cumulativ rewbar a rd sm:

Taking action: intended: E

Reward received:

New state:

#

Cumulativ rewbar a rd sm:

Taking action: intended:

Reward received:

New state:

Cumulativ rewbar a rd sm:

Taking action: E intended: E

Reward received:

'New state:

Cumulativ rewbar a rd sm:

Taking action: E intended: E

Reward received:

New state:

Cumulative rewbar a rd sm:

Taking action: E intended: E

Reward received:

New state:

Cumulative rewbar a rd sm:

Taking action: exit intended: exit

Reward received:

New state:

Cumulative rewbar a rd sm:

As you can see, in this question we don't use any discount factor. We will introduce that in the next question. You can also try some of the other test cases such as testcasespprob.With a correct implementation, you should be able to pass all test cases.

py

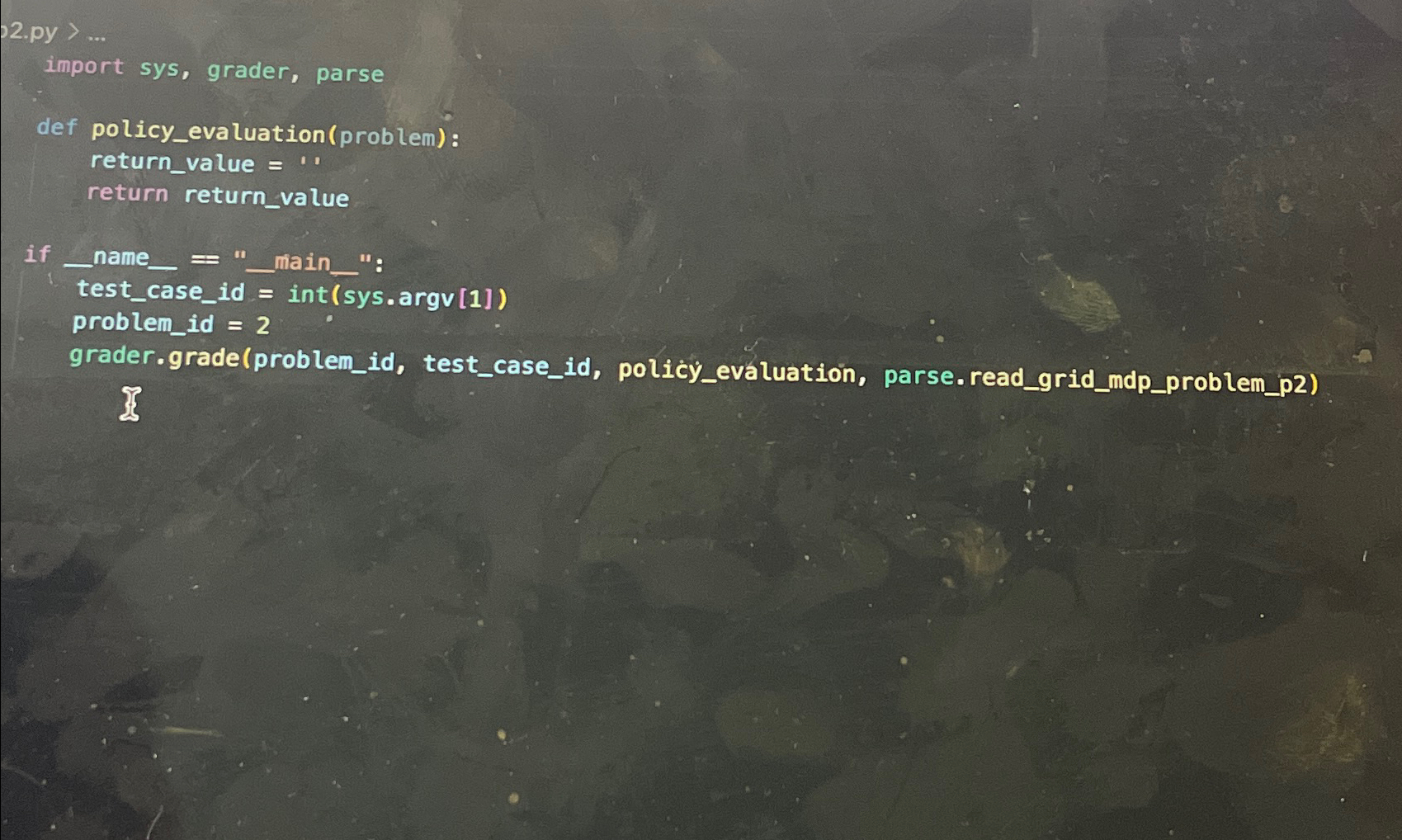

import sys grader, parse

def policyevaluationproblem:

returnvalue

return returnvalue

if :

testcaseid intsysargv

problemid

grader.gradeproblemid testcaseid policyevaluation, parse.readgridmdpproblemp

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock