Question: Problem 2: Maximum Margin Classifiers (20 points) In this question, we examine the properties of max-margin separating hyperplanes on toy, two-dimensional data. Note: this problem

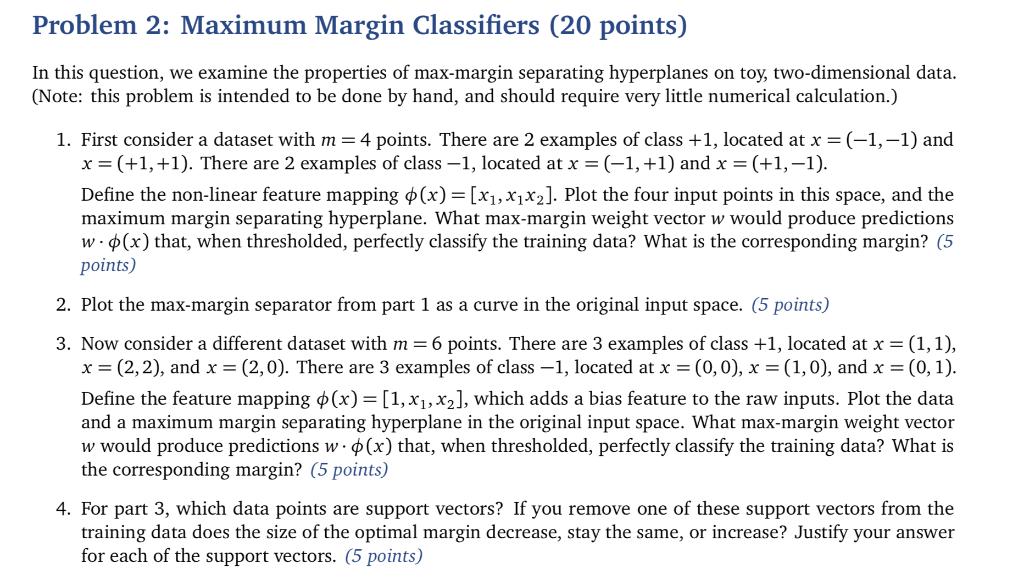

Problem 2: Maximum Margin Classifiers (20 points) In this question, we examine the properties of max-margin separating hyperplanes on toy, two-dimensional data. Note: this problem is intended to be done by hand, and should require very little numerical calculation.) 1, First consider a dataset with m = 4 points. There are 2 examples of class +1, located at x = (-1,-1) and x (+1, +1). There are 2 examples of class-1, located at x-(-1, +1) and x (+1,-1) Define the non-linear feature mapping (x) = [xi, Xi&J. Plot the four input points in this space, and the maximum margin separating hyperplane. What max-margin weight vector w would produce predictions w-o(x) that, when thresholded, perfectly classify the training data? What is the corresponding margin? (5 points 2. Plot the max-margin separator from part 1 as a curve in the original input space. (5 points) 3. Now consider a different dataset with m 6 points. There are 3 examples of class +1, located at x (1.1), x = (2,2), and x = (2,0). There are 3 examples of class-1, located at x = (0,0), x = (1,0), and x = (0, ). Define the feature mapping (x)-[1, xi, X2], which adds a bias feature to the raw inputs. Plot the data and a maximum margin separating hyperplane in the original input space. What max-margin weight vector w would produce predictions w. (x) that, when thresholded, perfectly classify the training data? What is the corresponding margin? (5 points) 4. For part 3, which data points are support vectors? If you remove one of these support vectors from the training data does the size of the optimal margin decrease, stay the same, or increase? Justify your answer for each of the support vectors. (5 points) Problem 2: Maximum Margin Classifiers (20 points) In this question, we examine the properties of max-margin separating hyperplanes on toy, two-dimensional data. Note: this problem is intended to be done by hand, and should require very little numerical calculation.) 1, First consider a dataset with m = 4 points. There are 2 examples of class +1, located at x = (-1,-1) and x (+1, +1). There are 2 examples of class-1, located at x-(-1, +1) and x (+1,-1) Define the non-linear feature mapping (x) = [xi, Xi&J. Plot the four input points in this space, and the maximum margin separating hyperplane. What max-margin weight vector w would produce predictions w-o(x) that, when thresholded, perfectly classify the training data? What is the corresponding margin? (5 points 2. Plot the max-margin separator from part 1 as a curve in the original input space. (5 points) 3. Now consider a different dataset with m 6 points. There are 3 examples of class +1, located at x (1.1), x = (2,2), and x = (2,0). There are 3 examples of class-1, located at x = (0,0), x = (1,0), and x = (0, ). Define the feature mapping (x)-[1, xi, X2], which adds a bias feature to the raw inputs. Plot the data and a maximum margin separating hyperplane in the original input space. What max-margin weight vector w would produce predictions w. (x) that, when thresholded, perfectly classify the training data? What is the corresponding margin? (5 points) 4. For part 3, which data points are support vectors? If you remove one of these support vectors from the training data does the size of the optimal margin decrease, stay the same, or increase? Justify your answer for each of the support vectors. (5 points)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts