Question: Problem 3. Stochastic gradient descent for convolutional neural networks Consider the convolutional network below with sigmoid activation in the convolutional layer. [cl c2] [c3 c4]

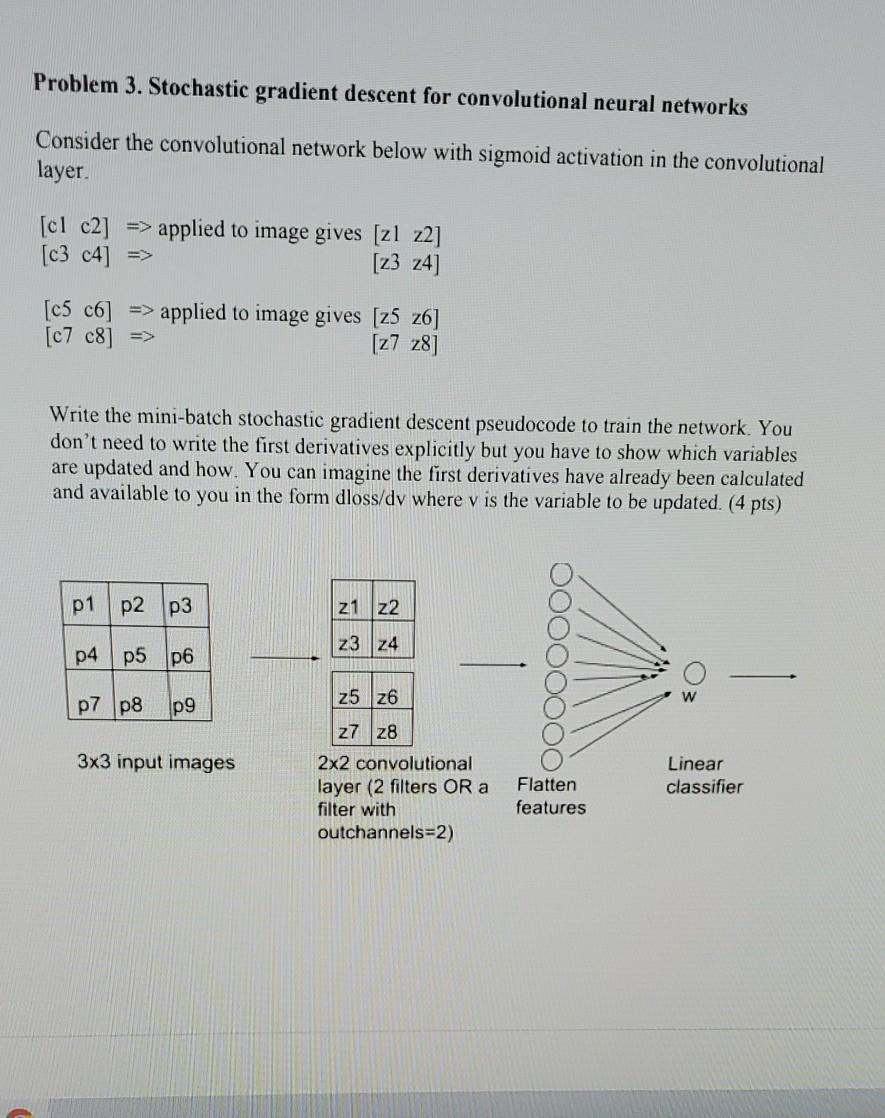

Problem 3. Stochastic gradient descent for convolutional neural networks Consider the convolutional network below with sigmoid activation in the convolutional layer. [cl c2] [c3 c4] => applied to image gives [zl z2] [z3 z4] [c5 c6] => applied to image gives [25 z6] [c7c8] => [z7 z8] Write the mini-batch stochastic gradient descent pseudocode to train the network. You don't need to write the first derivatives explicitly but you have to show which variables are updated and how. You can imagine the first derivatives have already been calculated and available to you in the form dloss/dv where v is the variable to be updated. (4 pts) p1 p2 p3 21 22 23 24 p4 p5 p6 25 26 w p7 p8 p9 3x3 input images 27 28 2x2 convolutional layer (2 filters OR a filter with outchannels=2) Linear classifier Flatten features

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts