Question: Proximal Gradient Descent The gradient descent algorithm cannot be directly applied since the objective function is non- differentiable. Discuss why the objective function is non-differentiable.

Proximal Gradient Descent

The gradient descent algorithm cannot be directly applied since the objective function is non-

differentiable. Discuss why the objective function is non-differentiable.

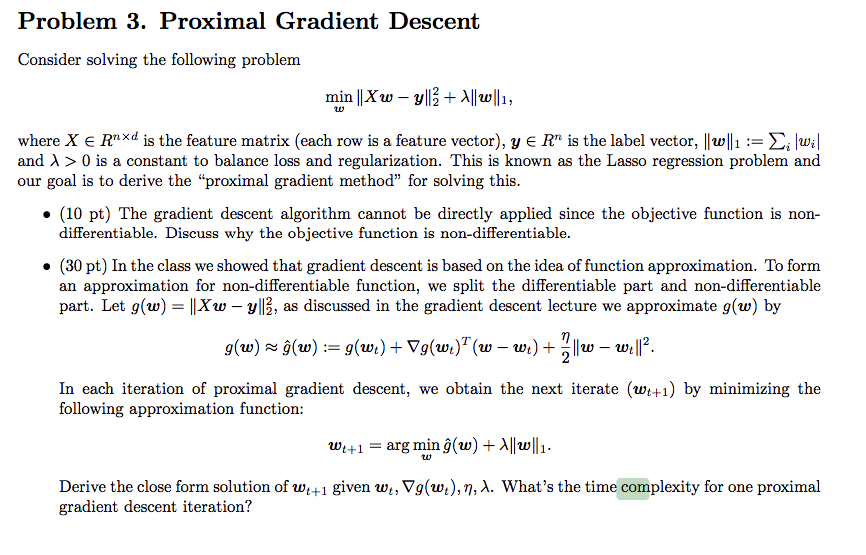

Problem 3. Proximal Gradient Descent Consider solving the following problem where Rnxd is the feature matrix (each row is a feature vector), y e Rn is the label vector, llw11- lwil and > 0 is a constant to balance loss and regularization. This is known as the Lasso regression problem and (10 pt) The gradient descent algorithm cannot be directly applied since the objective function is non- (30 pt) In the class we showed that gradient descent is based on the idea of function approximation. To form our goal is to derive the "proximal gradient method" for solving this differentiable. Discuss why the objective function is non-differentiable an approximation for non-differentiable function, we split the differentiable part and non-differentiable part. Let g(w)Xw yl2, as discussed in the gradient descent lecture we approximate g(w) by In each iteration of proximal gradient descent, we obtain the next iterate (wi+1) by minimizing the following approximation function: wt +1 = arg ming(w) + 1wlla Derive the close form solution of wt +1 given wt, g(w), , . What's the time complexity for one proximal gradient descent iteration? Problem 3. Proximal Gradient Descent Consider solving the following problem where Rnxd is the feature matrix (each row is a feature vector), y e Rn is the label vector, llw11- lwil and > 0 is a constant to balance loss and regularization. This is known as the Lasso regression problem and (10 pt) The gradient descent algorithm cannot be directly applied since the objective function is non- (30 pt) In the class we showed that gradient descent is based on the idea of function approximation. To form our goal is to derive the "proximal gradient method" for solving this differentiable. Discuss why the objective function is non-differentiable an approximation for non-differentiable function, we split the differentiable part and non-differentiable part. Let g(w)Xw yl2, as discussed in the gradient descent lecture we approximate g(w) by In each iteration of proximal gradient descent, we obtain the next iterate (wi+1) by minimizing the following approximation function: wt +1 = arg ming(w) + 1wlla Derive the close form solution of wt +1 given wt, g(w), , . What's the time complexity for one proximal gradient descent iteration

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts