Question: Problem 4 (PROBABILISTIC LATENT VARIABLE MODEL AND ITS RELATION TO PCA) Consider the latent variable model x=Wz+e, (12) where I E Rd, 2 E R9W

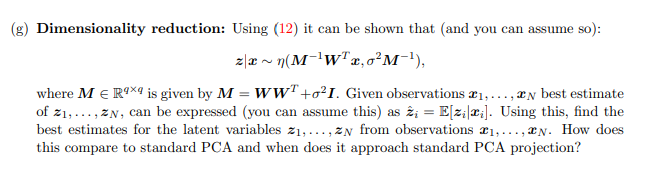

Problem 4 (PROBABILISTIC LATENT VARIABLE MODEL AND ITS RELATION TO PCA) Consider the latent variable model x=Wz+e, (12) where I E Rd, 2 E R9W E Rdxq and e E Rd. The probabilistic model uses z ~ n(0,1) and en(0,0I) and is independent of z. We make N observations of x, i.e., 11, ..., IN, and we do not know the matrix W or the noise variance o?, which we try to use a maximum likelihood estimator for it. (a) Prove that using (12) 2~(0,Ww" +oI). (b) Using (a), show that the log likelihood function L of 21, ..., IN, i.e., log f(x1, ..., IN) is given by c= {d log(27) + log |C| + trace (C5)}, (13) where C=ww+oI, S i=1 0 0 0 0 Hint: Stack up = {2},...,x)" and notice that ~ (0,C), where is a block diagonal matrix: co : and use the property trace(AB) = trace(BA). (c) We can show and you can assume) that ac aw = N(C-sc-w-c-w) (14) Use (14) to show that the optimal solution satisfies: SC-W=W (15) (d) We will use a singular value decomposition form for W as, W=ULVT (16) (17) where U E Rdxq has orthonormal columns, V e R9X9 is orthonormal i.e., VVT = 1 and L = diag(l1,..., lq) represent the singular values. Upon substituting (16) in (15) we can show (and you can assume) that SUL =U (GI+L?) L Use (17) to show that for li #0, Su; = (o? +1})ui, i.e., Ui are eigenvectors of S when l; +0. Note that when l; = 0, we can choose uj arbitrarily. Therefore the question becomes how many such eigenvectors do we choose. Using this, we can show (and you can assume) that W=U,(K, -o-1)*R, (18) where U, E Rdxq has the q eigenvectors of S (not necessarily the largest), R E R9x9 is an arbitrary orthogonal matrix, and kj pg) the corresponding eigenvalue to u; or (19) else where to avoid confusion with ordered eigenvalues, we have used pl.) as some permutation of it. (e) We will next show (and you can assume that the maximum likelihood estimate of W,02 from maximizing (13) is given by WML = U, (1, -o1) R, OL LE.. i=9+1 where U, E Rdxq has the q eigenvectors of S with the largest eigenvalues, 11 > 122 ... > Id, with diagonal matrix A = diag(41, ..., Aq), and R R9X9 is an arbitrary orthogonal matrix, i.e., RRT = 1. Moreover, omL , =2+1 di The expression in (18) when substituted into (13) and maximizing for o2 can be show to be (and you can assume so): - {ruct to their d log(27) + d + log(4p()) + (d - q') log (8-)} (20) N j='+1 Show that since ;=1 log(Ap()) = =, log(1i) = log |S|, we can write (20) as, N C -{dlog(27) + d + log S}- log(16)) + (d - () log ) 2 2 d- j='+1 (21) (f) Therefore the maximum likelihood choice for p (-) is related to minimizing over p(-) and log(Ap()) + log ) (d-9) d-4 j='+1 Show that the optimal choice is d' = q and p(-) chooses the q largest eigenvalues 11, ..., 1g. d d j='+1 (g) Dimensionality reduction: Using (12) it can be shown that and you can assume so): zx~n(M-'W"x, oM-), where M ER9x9 is given by M = ww +oI. Given observations 21,..., ty best estimate of 21, ..., ZN, can be expressed (you can assume this) as i = E[zi|2i]. Using this, find the best estimates for the latent variables z1, ... , zn from observations 21, ..., En. How does this compare to standard PCA and when does it approach standard PCA projection? Problem 4 (PROBABILISTIC LATENT VARIABLE MODEL AND ITS RELATION TO PCA) Consider the latent variable model x=Wz+e, (12) where I E Rd, 2 E R9W E Rdxq and e E Rd. The probabilistic model uses z ~ n(0,1) and en(0,0I) and is independent of z. We make N observations of x, i.e., 11, ..., IN, and we do not know the matrix W or the noise variance o?, which we try to use a maximum likelihood estimator for it. (a) Prove that using (12) 2~(0,Ww" +oI). (b) Using (a), show that the log likelihood function L of 21, ..., IN, i.e., log f(x1, ..., IN) is given by c= {d log(27) + log |C| + trace (C5)}, (13) where C=ww+oI, S i=1 0 0 0 0 Hint: Stack up = {2},...,x)" and notice that ~ (0,C), where is a block diagonal matrix: co : and use the property trace(AB) = trace(BA). (c) We can show and you can assume) that ac aw = N(C-sc-w-c-w) (14) Use (14) to show that the optimal solution satisfies: SC-W=W (15) (d) We will use a singular value decomposition form for W as, W=ULVT (16) (17) where U E Rdxq has orthonormal columns, V e R9X9 is orthonormal i.e., VVT = 1 and L = diag(l1,..., lq) represent the singular values. Upon substituting (16) in (15) we can show (and you can assume) that SUL =U (GI+L?) L Use (17) to show that for li #0, Su; = (o? +1})ui, i.e., Ui are eigenvectors of S when l; +0. Note that when l; = 0, we can choose uj arbitrarily. Therefore the question becomes how many such eigenvectors do we choose. Using this, we can show (and you can assume) that W=U,(K, -o-1)*R, (18) where U, E Rdxq has the q eigenvectors of S (not necessarily the largest), R E R9x9 is an arbitrary orthogonal matrix, and kj pg) the corresponding eigenvalue to u; or (19) else where to avoid confusion with ordered eigenvalues, we have used pl.) as some permutation of it. (e) We will next show (and you can assume that the maximum likelihood estimate of W,02 from maximizing (13) is given by WML = U, (1, -o1) R, OL LE.. i=9+1 where U, E Rdxq has the q eigenvectors of S with the largest eigenvalues, 11 > 122 ... > Id, with diagonal matrix A = diag(41, ..., Aq), and R R9X9 is an arbitrary orthogonal matrix, i.e., RRT = 1. Moreover, omL , =2+1 di The expression in (18) when substituted into (13) and maximizing for o2 can be show to be (and you can assume so): - {ruct to their d log(27) + d + log(4p()) + (d - q') log (8-)} (20) N j='+1 Show that since ;=1 log(Ap()) = =, log(1i) = log |S|, we can write (20) as, N C -{dlog(27) + d + log S}- log(16)) + (d - () log ) 2 2 d- j='+1 (21) (f) Therefore the maximum likelihood choice for p (-) is related to minimizing over p(-) and log(Ap()) + log ) (d-9) d-4 j='+1 Show that the optimal choice is d' = q and p(-) chooses the q largest eigenvalues 11, ..., 1g. d d j='+1 (g) Dimensionality reduction: Using (12) it can be shown that and you can assume so): zx~n(M-'W"x, oM-), where M ER9x9 is given by M = ww +oI. Given observations 21,..., ty best estimate of 21, ..., ZN, can be expressed (you can assume this) as i = E[zi|2i]. Using this, find the best estimates for the latent variables z1, ... , zn from observations 21, ..., En. How does this compare to standard PCA and when does it approach standard PCA projection

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts