Question: Python Using the code below 1 . adjust parameters to improve accuracy and a discussion on the activities you performed and results. 2 . Generate

Python

Using the code below

adjust parameters to improve accuracy and a discussion on the activities you performed and results.

Generate a Tensorboard and produce graphics that show various improvements that you were able to gain. Loss and Accuracy for train and validation for example.

#Load the libraries Load them all here not sprinkled throughout the notebook.

import pandas as pd

import numpy as np

from sklearn.modelselection import traintestsplit

from sklearn.preprocessing import StandardScaler

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn.pipeline import Pipeline

from sklearn.decomposition import PCA

from sklearn.preprocessing import OneHotEncoder

from sklearn.modelselection import GridSearchCV

from sklearn.metrics import accuracyscore, confusionmatrix, classificationreport, recallscore, precisionscore, fscore

import warnings

warnings.filterwarningsignore #ignore warnings

loadext tensorboard

import tensorflow as tf

from tensorflow.keras.callbacks import EarlyStopping

from tensorflow.keras.optimizers import Adam, Nadam, RMSprop

mnist tfkeras.datasets.mnist

xtrain, ytrainxtest, ytest mnist.loaddata

from google.colab import drive

drive.mountcontentdrive

dfmnisttrain pdreadcsvcontentdriveMyDriveColab Notebooksdatamnisttrain.csv

dfmnisttest pdreadcsvcontentdriveMyDriveColab Notebooksdatamnisttest.csv

Model Development

batchsize

numclasses

epochs

inputshape

optimizer Adamlearningrate

logdir "logsfit # Adjust the directory as needed

tensorboardcallback tfkeras.callbacks.TensorBoardlogdirlogdir, histogramfreq

model tfkeras.models.Sequential

tfkeras.layers.ConvD padding'same', activation'relu', inputshapeinputshape

tfkeras.layers.ConvD padding'same', activation'relu'

tfkeras.layers.MaxPoolD

tfkeras.layers.Dropout

tfkeras.layers.ConvD padding'same', activation'relu'

tfkeras.layers.ConvD padding'same', activation'relu'

tfkeras.layers.MaxPoolDstrides

tfkeras.layers.Dropout

tfkeras.layers.Flatten

tfkeras.layers.Dense activation'relu'

tfkeras.layers.Dropout

tfkeras.layers.Densenumclasses, activation'softmax'

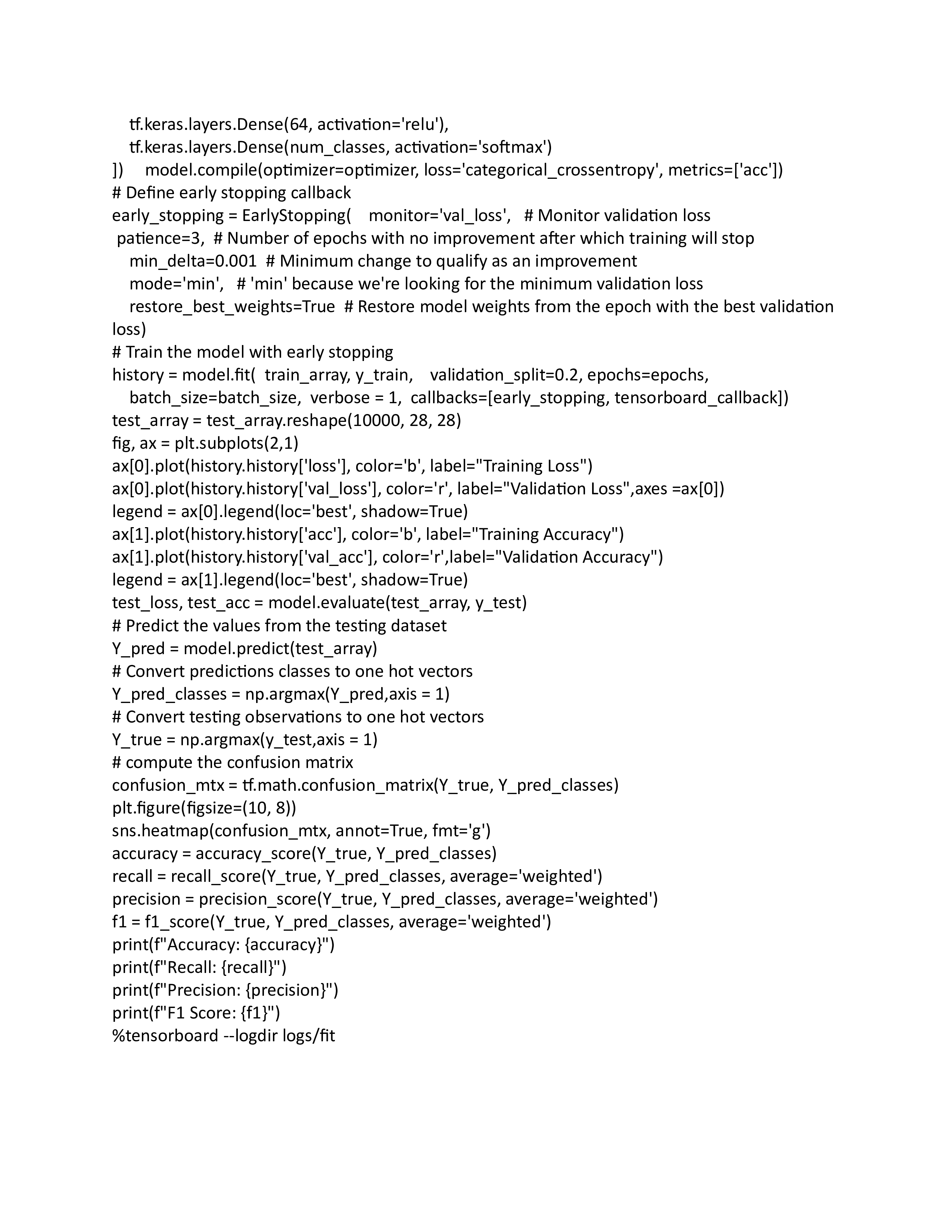

model.compileoptimizeroptimizer, loss'categoricalcrossentropy', metricsacc

# Define early stopping callback

earlystopping EarlyStopping

monitor'valloss', # Monitor validation loss

patience # Number of epochs with no improvement after which training will stop

mindelta # Minimum change to qualify as an improvement

mode'min', # 'min' because we're looking for the minimum validation loss

restorebestweightsTrue # Restore model weights from the epoch with the best validation loss

from sys import version

# Train the model with early stopping

history model.fit

trainarray, ytrain,

validationsplit

epochsepochs,

batchsizebatchsize,

verbose

callbacksearlystopping, tensorboardcallback

fig, ax pltsubplots

axplothistoryhistoryloss colorb label"Training Loss"

axplothistoryhistoryvalloss' colorr label"Validation Loss",axes ax

legend axlegendloc'best', shadowTrue

axplothistoryhistoryacc colorb label"Training Accuracy"

axplothistoryhistoryvalacc' colorrlabel"Validation Accuracy"

legend axlegendloc'best', shadowTrue

testloss, testacc model.evaluatetestarray, ytest

# Predict the values from the testing dataset

Ypred model.predicttestarray

# Convert predictions classes to one hot vectors

Ypredclasses npargmaxYpred,axis

# Convert testing observations to one hot vectors

Ytrue npargmaxytest,axis

# compute the confusion matrix

confusionmtx tfmath.confusionmatrixYtrue, Ypredclasses

pltfigurefigsize

snsheatmapconfusionmtx annotTrue, fmtg

accuracy accuracyscoreYtrue, Ypredclasses

recall recallscoreYtrue, Ypredclasses, average'weighted'

precision precisionscoreYtrue, Ypredclasses, average'weighted'

f fscoreYtrue, Ypredclasses, average'weighted'

printfAccuracy: accuracy

printfRecall: recall

printfPrecision: precision

printfF Score: f

tensorboard logdir logsfit

batchsize

numclasses

epochs

trainarray trainarray.reshape

optimizer Adamlearningrate

logdir "logsfit # Adjust the directory as needed

tensorboardcallback tfkeras.callbacks.TensorBoardlogdirlogdir, histogramfreq

The code is continued on the image

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock