Question: Q 4 . Construct an MDP to find an optimal policy for the following problem. The agent operates in a discrete, two - dimensional space

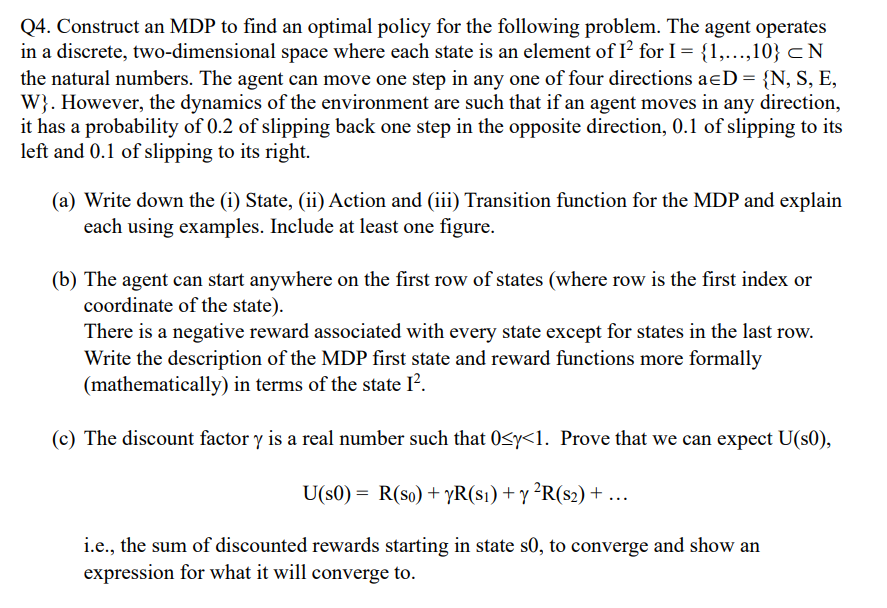

Q Construct an MDP to find an optimal policy for the following problem. The agent operates

in a discrete, twodimensional space where each state is an element of for dots,

the natural numbers. The agent can move one step in any one of four directions

W However, the dynamics of the environment are such that if an agent moves in any direction,

it has a probability of of slipping back one step in the opposite direction, of slipping to its

left and of slipping to its right.

a Write down the i State, ii Action and iii Transition function for the MDP and explain

each using examples. Include at least one figure.

b The agent can start anywhere on the first row of states where row is the first index or

coordinate of the state

There is a negative reward associated with every state except for states in the last row.

Write the description of the MDP first state and reward functions more formally

mathematically in terms of the state

c The discount factor is a real number such that Prove that we can expect

dots

ie the sum of discounted rewards starting in state s to converge and show an

expression for what it will converge to

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock