Question: Q 9 Policy Iteration: Cycle 1 4 Points Consider the following transition diagram, transition function and reward function for an MDP . Discount Factor,

Q Policy Iteration: Cycle

Points

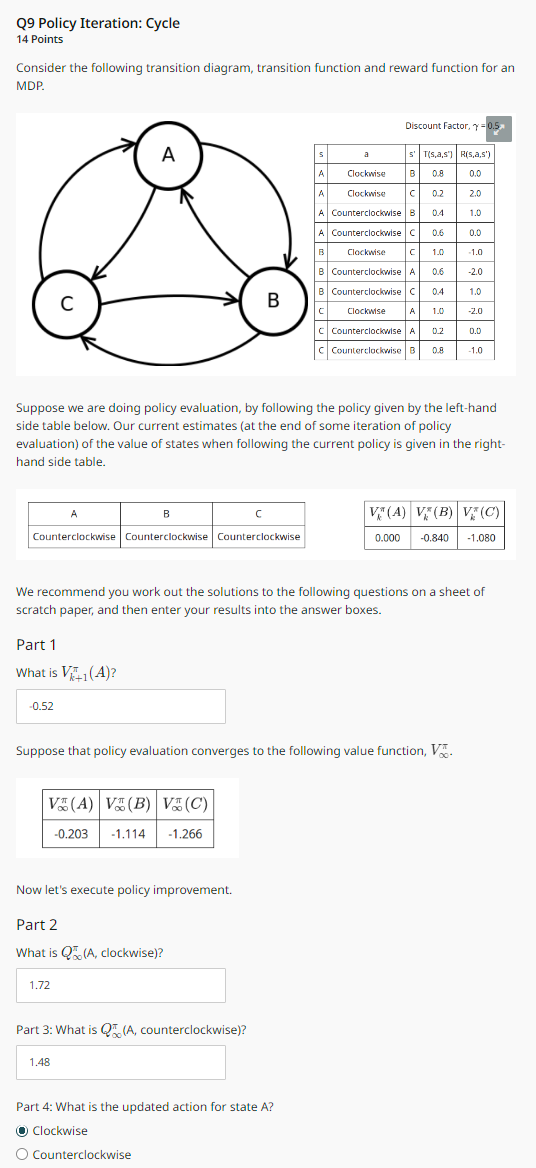

Consider the following transition diagram, transition function and reward function for an MDP

Discount Factor, gamma;

Suppose we are doing policy evaluation, by following the policy given by the lefthand side table below. Our current estimates at the end of some iteration of policy evaluation of the value of states when following the current policy is given in the righthand side table.

We recommend you work out the solutions to the following questions on a sheet of scratch paper, and then enter your results into the answer boxes.

Part

What is VkpiA

Suppose that policy evaluation converges to the following value function, Vinftypi

Now let's execute policy improvement.

Part

What is QinftypiA clockwise

Part : What is QinftypiA counterclockwise

Part : What is the updated action for state A

Clockwise

Counterclockwise

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock