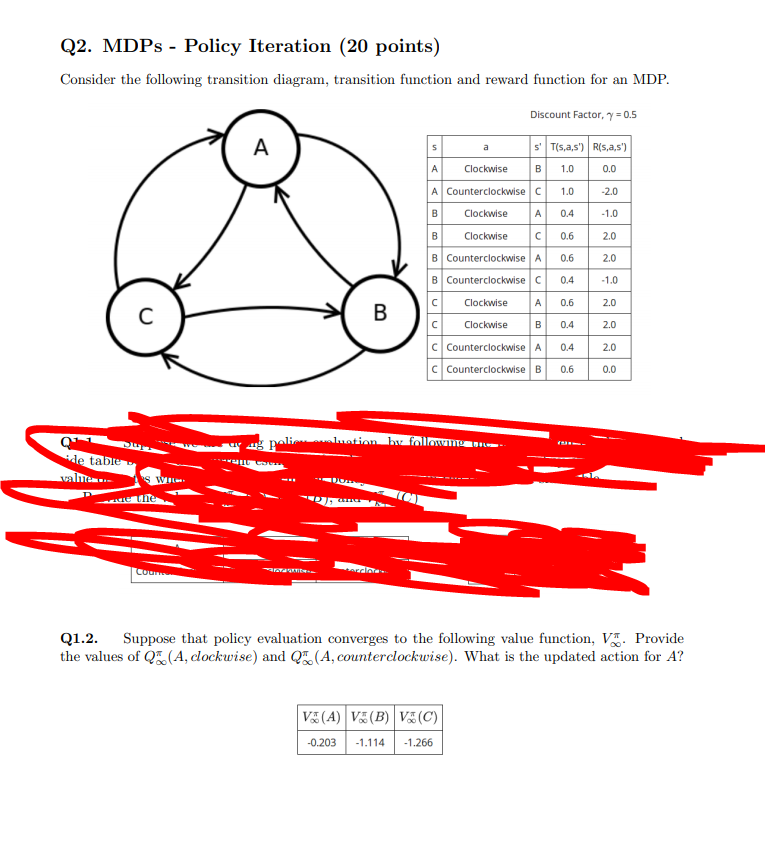

Question: Q2. MDPs - Policy Iteration (20 points) Consider the following transition diagram, transition function and reward function for an MDP. Discount Factor, y=0.5 A s

Q2. MDPs - Policy Iteration (20 points) Consider the following transition diagram, transition function and reward function for an MDP. Discount Factor, y=0.5 A s a S' Tis,a,s') Ris,a,s") A Clockwise B 1.0 0.0 A Counterclockwise C 1.0 -2.0 B Clockwise A 0.4 - 1.0 B C 0.6 2.0 0.6 2.0 0.4 -1.0 Clockwise B Counterclockwise A B Counterclockwise C Clockwise Clockwise Counterclockwise A Counterclockwise B 0.6 2.0 B B 0.4 2.0 0.4 2.0 0.6 0.0 mation by followers Q de table wants 'S WI mite Q1.2. Suppose that policy evaluation converges to the following value function, V. Provide the values of Q. (A, clockwise) and Q. (A, counterclockwise). What is the updated action for A? V(A) V(B) V(C) -0.203 -1.114 -1.266

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts