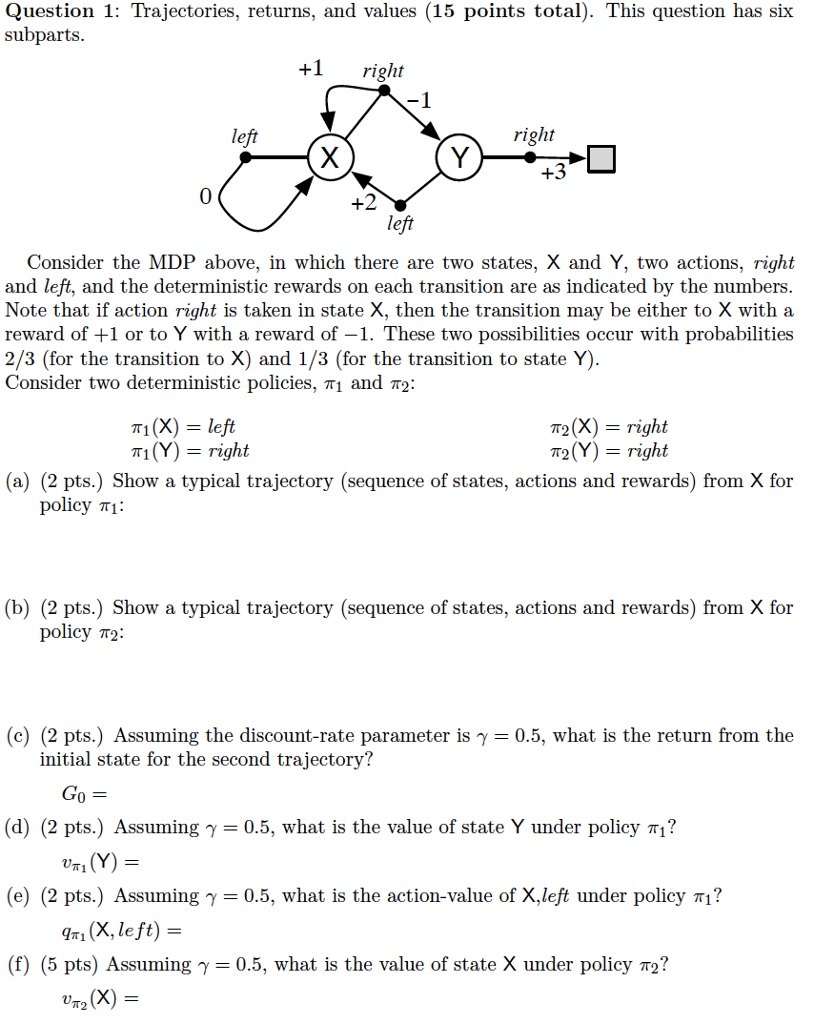

Question: Question 1: Trajectories, returns, and values (15 points total). This question has six subparts +I right left right 0 le Consider the MDP above, in

Question 1: Trajectories, returns, and values (15 points total). This question has six subparts +I right left right 0 le Consider the MDP above, in which there are two states, X and Y, two actions, right and left, and the deterministic rewards on each transition are as indicated by the numbers Note that if action right is taken in state X, then the transition may be either to X with a reward of +1 or to Y with a reward of -1. These two possibilities occur with probabilities 2/3 (for the transition to X) and 1/3 (for the transition to state Y) Consider two deterministic policies, 1 and 2: T1(X) n(Y) left 2(X)-right 2(Y-right right (a) (2 pts.) Show a typical trajectory (sequence of states, actions and rewards) from X for policy : (b) (2 pts.) Show a typical trajectory (sequence of states, actions and rewards) from X for policy 2. (c) (2 pts.) Assuming the discount-rate parameter is -0.5, what is the return from the initial state for the second trajectory? T0 (d) (2 pts.) Assuming -0.5, what is the value of state Y under policy ? (e) (2 pts.) Assuming -0.5, what is the action-value of X,left under policy ? (f) (5 pts) Assuming 0.5, what is the value of state X under policy 2? UTi (Y)- q (X, left) = "m (X) = Question 1: Trajectories, returns, and values (15 points total). This question has six subparts +I right left right 0 le Consider the MDP above, in which there are two states, X and Y, two actions, right and left, and the deterministic rewards on each transition are as indicated by the numbers Note that if action right is taken in state X, then the transition may be either to X with a reward of +1 or to Y with a reward of -1. These two possibilities occur with probabilities 2/3 (for the transition to X) and 1/3 (for the transition to state Y) Consider two deterministic policies, 1 and 2: T1(X) n(Y) left 2(X)-right 2(Y-right right (a) (2 pts.) Show a typical trajectory (sequence of states, actions and rewards) from X for policy : (b) (2 pts.) Show a typical trajectory (sequence of states, actions and rewards) from X for policy 2. (c) (2 pts.) Assuming the discount-rate parameter is -0.5, what is the return from the initial state for the second trajectory? T0 (d) (2 pts.) Assuming -0.5, what is the value of state Y under policy ? (e) (2 pts.) Assuming -0.5, what is the action-value of X,left under policy ? (f) (5 pts) Assuming 0.5, what is the value of state X under policy 2? UTi (Y)- q (X, left) = "m (X) =

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts