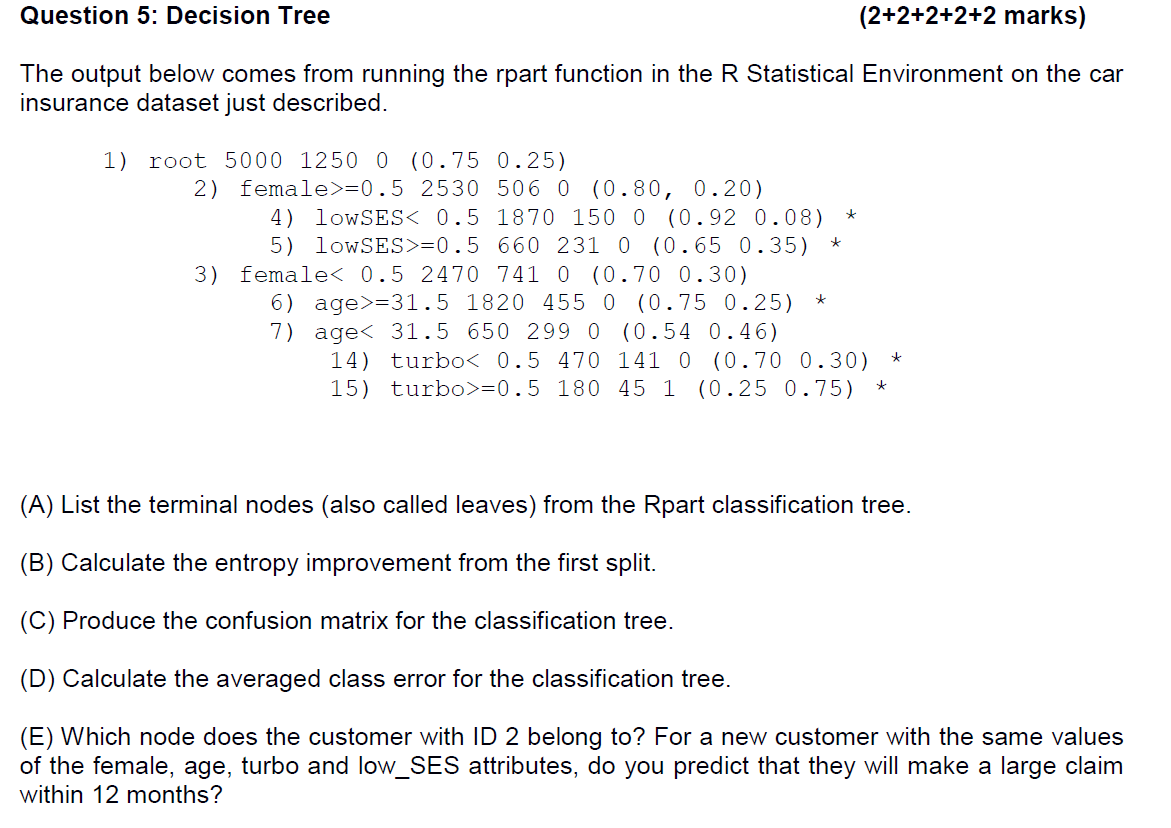

Question: Question 5: Decision Tree (2+2+2+2+2 marks) The output below comes from running the rpart function in the R Statistical Environment on the car insurance dataset

Question 5: Decision Tree (2+2+2+2+2 marks) The output below comes from running the rpart function in the R Statistical Environment on the car insurance dataset just described. 1) root 5000 1250 0 (0.75 0.25) 2) female>=0.5 2530 506 0 (0.80, 0.20) 4) lowSES=0.5 660 231 0 (0.65 0.35) * 3) female=31.5 1820 455 0 (0.75 0.25) * 7) age=0.5 180 45 1 (0.25 0.75) * (A) List the terminal nodes (also called leaves) from the Rpart classification tree. (B) Calculate the entropy improvement from the first split. (C) Produce the confusion matrix for the classification tree. (D) Calculate the averaged class error for the classification tree. (E) Which node does the customer with ID 2 belong to? For a new customer with the same values of the female, age, turbo and low_SES attributes, do you predict that they will make a large claim within 12 months

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts