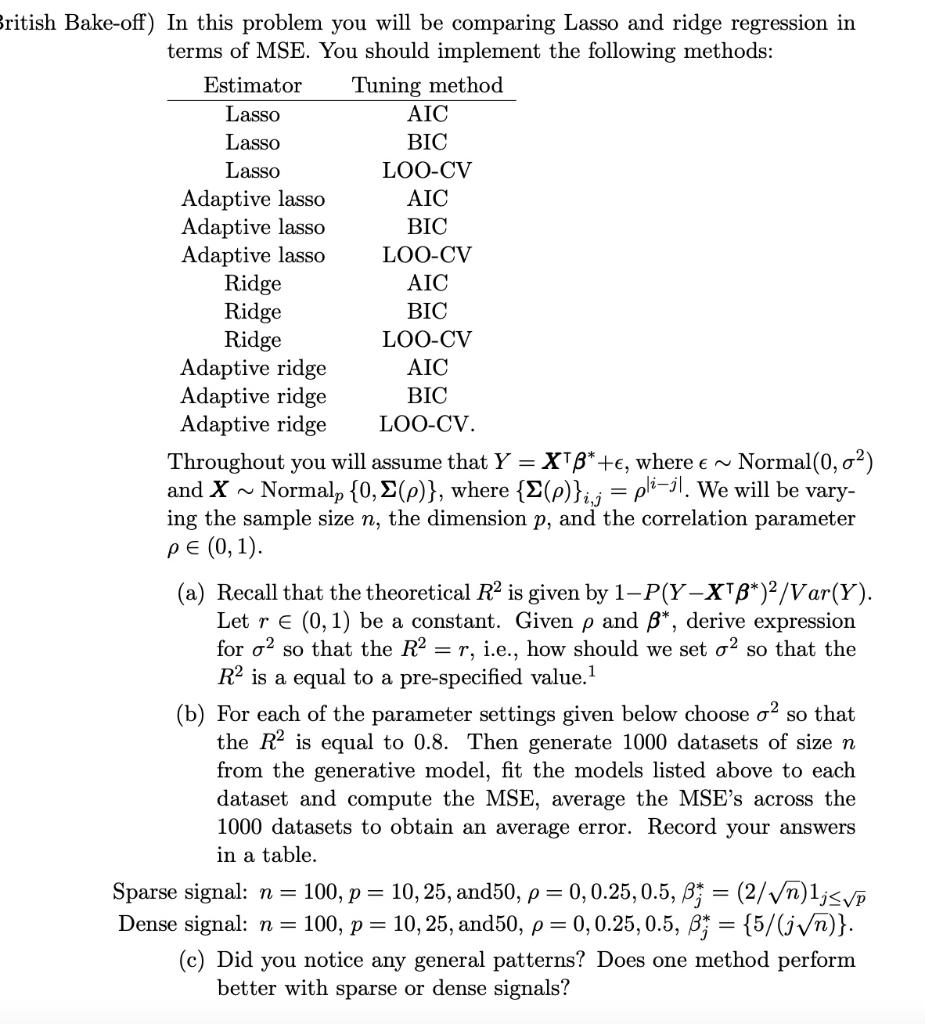

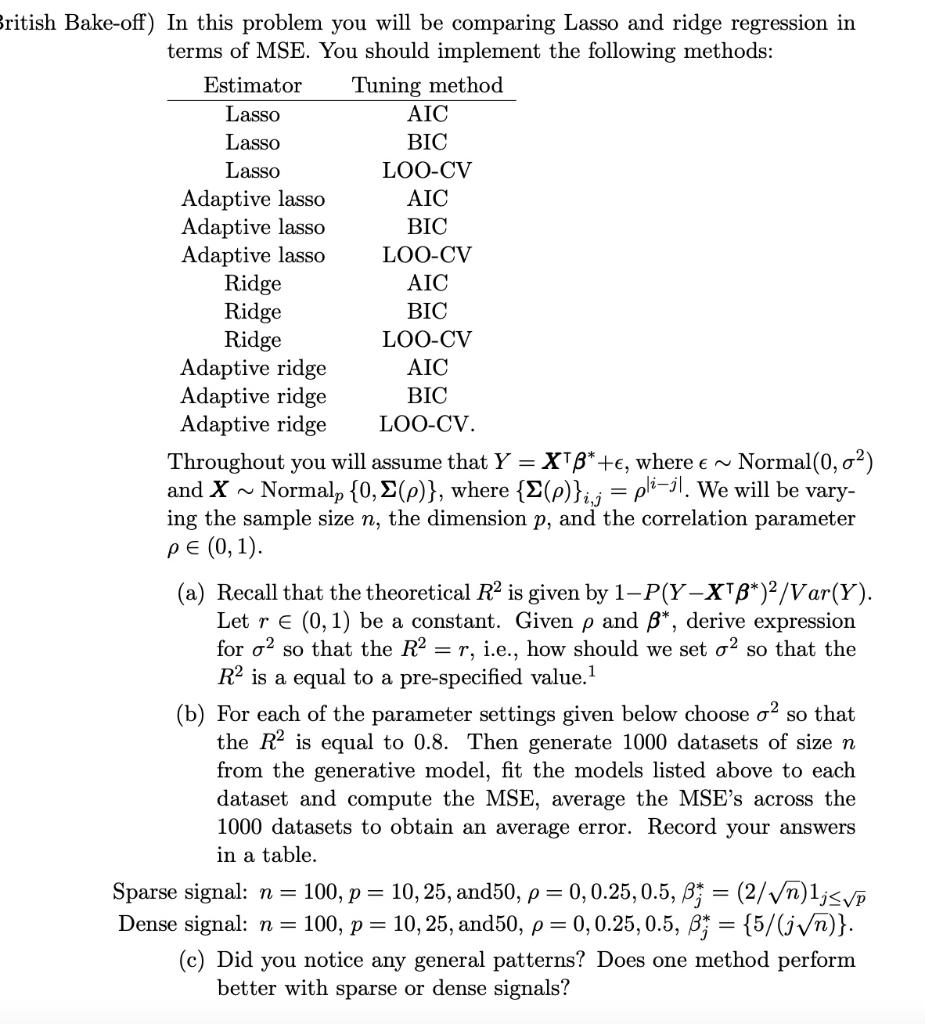

Question: ritish Bake-off) In this problem you will be comparing Lasso and ridge regression in terms of MSE. You should implement the following methods: Estimator Tuning

ritish Bake-off) In this problem you will be comparing Lasso and ridge regression in terms of MSE. You should implement the following methods: Estimator Tuning method Lasso AIC Lasso BIC Lasso LOO-CV Adaptive lasso AIC Adaptive lasso BIC Adaptive lasso LOO-CV Ridge AIC Ridge BIC Ridge LOO-CV Adaptive ridge AIC Adaptive ridge BIC Adaptive ridge LOO-CV. Throughout you will assume that Y = XTB*+, where e ~ Normal(0,0%) and X ~ Normalp {0, $(p)}, where {E()}ij = pli-il. We will be vary- ing the sample size n, the dimension p, and the correlation parameter pe (0,1). (a) Recall that the theoretical R2 is given by 1-P(Y-XTB*)/Var(Y). Let r (0,1) be a constant. Given p and 8*, derive expression for o2 so that the R2 = r, i.e., how should we set o2 so that the R2 is a equal to a pre-specified value.1 (b) For each of the parameter settings given below choose op so that the R is equal to 0.8. Then generate 1000 datasets of size n from the generative model, fit the models listed above to each dataset and compute the MSE, average the MSE's across the 1000 datasets to obtain an average error. Record your answers in a table. Sparse signal: n = 100, p = 10,25, and50, p = 0,0.25, 0.5, B = (2/Vn)1jsve Dense signal: n = 100, p = 10, 25, and50, p = 0,0.25, 0.5, B = {5/(jVn)}. (c) Did you notice any general patterns? Does one method perform better with sparse or dense signals? ritish Bake-off) In this problem you will be comparing Lasso and ridge regression in terms of MSE. You should implement the following methods: Estimator Tuning method Lasso AIC Lasso BIC Lasso LOO-CV Adaptive lasso AIC Adaptive lasso BIC Adaptive lasso LOO-CV Ridge AIC Ridge BIC Ridge LOO-CV Adaptive ridge AIC Adaptive ridge BIC Adaptive ridge LOO-CV. Throughout you will assume that Y = XTB*+, where e ~ Normal(0,0%) and X ~ Normalp {0, $(p)}, where {E()}ij = pli-il. We will be vary- ing the sample size n, the dimension p, and the correlation parameter pe (0,1). (a) Recall that the theoretical R2 is given by 1-P(Y-XTB*)/Var(Y). Let r (0,1) be a constant. Given p and 8*, derive expression for o2 so that the R2 = r, i.e., how should we set o2 so that the R2 is a equal to a pre-specified value.1 (b) For each of the parameter settings given below choose op so that the R is equal to 0.8. Then generate 1000 datasets of size n from the generative model, fit the models listed above to each dataset and compute the MSE, average the MSE's across the 1000 datasets to obtain an average error. Record your answers in a table. Sparse signal: n = 100, p = 10,25, and50, p = 0,0.25, 0.5, B = (2/Vn)1jsve Dense signal: n = 100, p = 10, 25, and50, p = 0,0.25, 0.5, B = {5/(jVn)}. (c) Did you notice any general patterns? Does one method perform better with sparse or dense signals